This post is based on a memo I wrote for this year’s Meta Coordination Forum. See also Arden Koehler’s recent post, which hits a lot of similar notes.

Summary

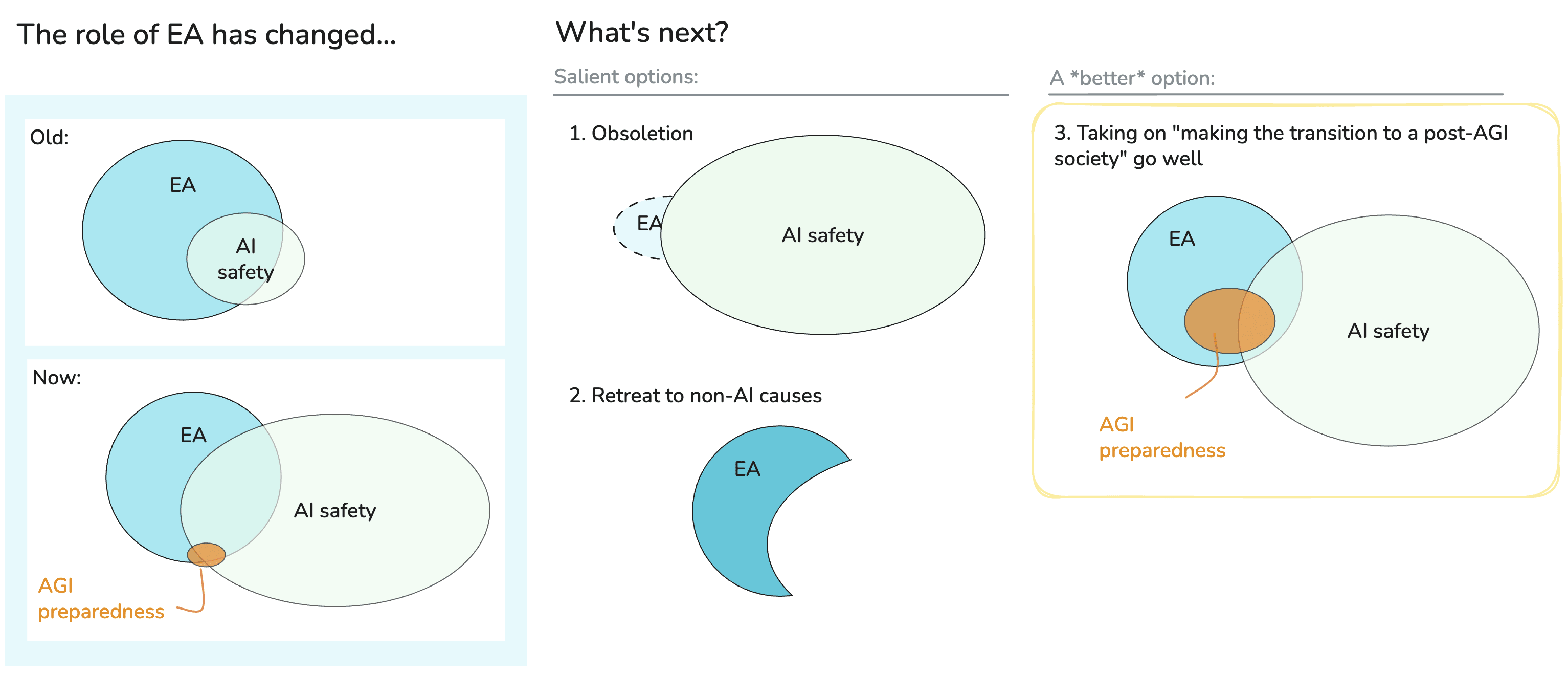

The EA movement stands at a crossroads. In light of AI’s very rapid progress, and the rise of the AI safety movement, some people view EA as a legacy movement set to fade away; others think we should refocus much more on “classic” cause areas like global health and animal welfare.

I argue for a third way: EA should embrace the mission of making the transition to a post-AGI society go well, significantly expanding our cause area focus beyond traditional AI safety. This means working on neglected areas like AI welfare, AI character, AI persuasion and epistemic disruption, human power concentration, space governance, and more (while continuing work on global health, animal welfare, AI safety, and biorisk).

These additional cause areas are extremely important and neglected, and particularly benefit from an EA mindset (truth-seeking, scope-sensitive, willing to change one’s mind quickly). I think that people going into these other areas would be among the biggest wins for EA movement-building right now — generally more valuable than marginal technical safety or safety-related governance work. If we can manage to pull it off, this represents a potentially enormous opportunity for impact for the EA movement.

There's recently been increased emphasis on "principles-first" EA, which I think is great. But I worry that in practice a "principles-first" framing can become a cover for anchoring on existing cause areas, rather than an invitation to figure out what other cause areas we should be working on. Being principles-first means being genuinely open to changing direction based on new evidence; if the world has changed dramatically, we should expect our priorities to change too.

This third way will require a lot of intellectual nimbleness and willingness to change our minds. Post-FTX, much of EA adopted a "PR mentality" that I think has lingered and is counterproductive. EA is intrinsically controversial because we say things that aren't popular — and given recent events, we'll be controversial regardless. This is liberating: we can focus on making arguments we think are true and important, with bravery and honesty, rather than constraining ourselves with excessive caution.

Three possible futures for the EA movement

AI progress has been going very fast, much faster than most people anticipated.[1] AI safety has become its own field, with its own momentum and independent set of institutions. It can feel like EA is in some ways getting eaten by that field: for example, on university campuses, AI safety groups are often displacing EA groups.

Here are a couple of attitudes to EA that I’ve seen people have in response:

- (1) EA is a legacy movement, set to wither and fade away, and that’s fine.[2]

- (2) EA should return to, and double down on, its core, original, ideas: global health and development, animal welfare, effective giving.

I get a lot of (1)-energy from e.g. the Constellation office and other folks heavily involved in AI safety and governance. I’ve gotten (2)-energy in a more diffuse way from some conversations I’ve had, and from online discussion; it’s sometimes felt to me that a “principles-first” framing of EA (which I strongly agree with) can in practice be used as cover for an attitude of “promote the classic mix of cause areas.”

I think that the right approach is a third way:[3]

- (3) EA should take on — and build into part of its broad identity — the mission of making the transition to a post-AGI society go well, making that a major focus (but far from the only focus). This means picking up a range of cause-areas beyond what “AI safety” normally refers to.

To make this more precise and concrete, I mean something like:

- In terms of curriculum content, maybe 30% or more should be on cause areas beyond AI safety, biorisk, animal welfare, or global health. (I’ll write out more which ones below.)

- And a lot of content should be on the values and epistemic habits we’ll need to navigate in a very rapidly changing world.

- In terms of online EA-related public debate, maybe 50% of that becomes about making the transition to a post-AGI society go well.

- This % is higher than for curriculum content because “AGI preparedness” ideas is generally fresher and juicier than other content, and because AI-related content now organically gets a lot more interest than other areas.

- In terms of what people do, maybe 15% (for now, scaling up to 30% or more over time) are primarily working on cause areas other than the “classic” cause areas, and a larger fraction (50% or more) have it in their mind as something they might transition into in a few years.

- I think that people going into these other areas would be among the biggest wins for EA movement-building; generally more valuable than marginal technical safety or safety governance.

- If someone really can focus on a totally neglected area like AI-enabled human powergrabs or AI rights and make it more concrete and tractable, that’s plausibly the highest-impact thing they can do.

More broadly, I like to think about the situation like this:

- Enlightenment thinkers had a truly enormous positive impact on the world by helping establish the social, cultural and institutional framework for the Modern Era.

- EA could see itself as contributing to a second Enlightenment, focusing on what the social, cultural and institutional framework should be for the Age of AGI, and helping make the transition to that society go as well as possible.

I think the third way is the right approach for two big reasons:

- I think, in the aggregate, AGI-related cause areas other than technical alignment and biorisk are as big or even bigger a deal than technical alignment and biorisk are, and there are highly neglected areas in this space. EA can help fill those gaps.

- Currently, the EA movement feels intellectually adrift, and this focus could be restorative.

A third potential reason is:

- Even just for AI safety, EA-types are way more valuable than non-EA technical alignment researchers.

I’ll take each in turn.

Reason #1: Neglected cause areas

The current menu of cause areas in EA is primarily: :

- global health & development

- factory farming

- AI safety

- biorisk

- “meta”

On the “third way” approach, taking on the mission of making the transition to a post-AGI society go well, the menu might be more like this (though note this is meant to be illustrative rather than exhaustive, is not in any priority order, and in practice these wouldn’t all get equal weight[4]):

- global health & development

- factory farming

- AI safety

- AI character[5]

- AI welfare / digital minds

- the economic and political rights of AIs

- AI-driven persuasion and epistemic disruption

- AI for better reasoning, decision-making and coordination

- the risk of (AI-enabled) human coups

- democracy preservation

- gradual disempowerment

- biorisk

- space governance

- s-risks

- macrostrategy

- meta

I think the EA movement would accomplish more good if people and money were more spread across these cause areas than they currently are.

I make the basic case for the importance of some of these areas, and explain what they are at more length, in PrepIE (see especially section 6) and Better Futures (see especially the last essay).[6] The key point is that there’s a lot to do to make the transition to a post-AGI world go well; and much of this work isn't naturally covered by AI safety and biosecurity.

These areas unusually neglected. The pipeline of AI safety folks is much stronger than it is for these other areas, given MATS and the other fellowships; the pipeline for many of these other areas is almost nonexistent. And the ordinary incentives for doing technical AI safety research (for some employers, at least) are now very strong: including equity, you could get a starting salary on the order of $1M/yr, working for an exciting, high-status role, in the midst of the action, with other smart people. Compare with, say, public campaigning, where you get paid much less, and get a lot more hate.[7]

These other cause areas are also unusually EA-weighted, in the sense that they particularly benefit from EA-mindset (i.e. ethically serious, intensely truth-seeking, high-decoupling, scope-sensitive, broadly cosmopolitan, ambitious, and willing to take costly personal actions.)

If AI progress continues as I expect it to, over the next ten years a huge amount will change in the world as a result of new AI capabilities, new technology, society’s responses to those changes. We’ll learn a huge amount more, too, from AI-driven intellectual progress. To do the most good, people will need to be extremely nimble and willing to change their minds, in a way that most people generally aren’t.

The biggest note of caution, in my view, is that at the moment these other areas have much less absorptive capacity than AI safety and governance: there isn’t yet a thriving ecosystem of organisations and fellowships etc that make it easy to work on these areas. That means I expect there to be a period of time during which: (i) there’s a lot of discussion of these issues; (ii) some people work on building the necessary ecosystem, or on doing the research necessary to figure out what the most viable paths are; but (iii) most people pursue other career paths, with an eye to switching in when the area is more ripe. This situation reminds me a lot of AI takeover risk or biorisk circa 2014.

Reason #2: EA is currently intellectually adrift

Currently, the online EA ecosystem doesn’t feel like a place full of exciting new ideas, in a way that’s attractive to smart and ambitious people:

- The Forum is pretty underwhelming nowadays. For someone writing a blogpost and wanting good intellectual engagement, LessWrong, which has its own issues, is a much stronger option.

- There’s little in the way of public EA debate; the sense one gets is that most of the intellectual core have “abandoned” EA — already treating it as a “legacy” movement. (From my POV, most of the online discussion centers around people like Bentham’s Bulldog and Richard Chappell, if only because those are some of the few people really engaging.)

Things aren’t disastrous or irrecoverable, and there’s still lots of promise. (E.g. I thought EAG London was vibrant and exciting, and in general in-person meetups still seem great.) But I think we’re far from where we could be.

It seems like a very fortunate bonus, to me that these other cause areas are so intellectually fertile; there are just so many unanswered questions, and so many gnarly tradeoffs to engage with. An EA movement that was engaging much more with these areas would, in its nature, be intensely intellectually vibrant.

It also seems to me there’s tons of low-hanging fruit in this area. For one thing, there’s already a tremendous amount of EA-flavoured analysis happening, by EAs or the “EA-adjacent”, it’s just that most of it happens in person, or in private Slack channels or googledocs. And when I’ve run the content of this post by old-hand EAs who are now focusing on AI, the feedback I’ve gotten is an intense love of EA, and keenness (all other things being equal) to Make EA Great Again, it’s just that they’re busy and it’s not salient to them what they could be doing.

I think this is likely a situation where there’s multiple equilibria we could end up in. If online EA doesn’t seem intellectually vibrant, then it’s not an attractive place for someone to intellectually engage with; if it does seem vibrant, then it is. (Lesswrong has seen this dynamic, falling into comparative decline before Lesswrong 2.0 rebooted it into an energetic intellectual community.)

Reason #3: The benefits of EA mindset for AI safety and biorisk

Those are my main reasons for wanting EA to take the third path forward. But there’s an additional argument, which others have pressed on me: Even just for AI safety or biorisk reduction, EA-types tend to be way more impactful than non-EA types.

Unfortunately, many of these examples are sensitive and I haven’t gotten permission to talk about them, so instead I’ll quote Caleb Parikh who gives a sense of this:

Some "make AGI go well influencers" who have commented or posted on the EA Forum and, in my view, are at the very least EA-adjacent include Rohin Shah, Neel Nanda, Buck Shlegeris, Ryan Greenblatt, Evan Hubinger, Oliver Habryka, Beth Barnes, Jaime Sevilla, Adam Gleave, Eliezer Yudkowsky, Davidad, Ajeya Cotra, Holden Karnofsky .... most of these people work on technical safety, but I think the same story is roughly true for AI governance and other "make AGI go well" areas.

This isn’t a coincidence. The most valuable work typically comes from deeply understanding the big picture, seeing something very important that almost no one is doing (e.g. control, infosecurity), and then working on that. Sometimes, it involves taking seriously personally difficult actions (e.g. Daniel Kokotajlo giving up a large fraction of his family’s wealth in order to be able to speak freely).

Buck Shlegeris has also emphasised to me the importance of having common intellectual ground with other safety folks, in order to be able to collaborate well. Ryan Greenblatt gives further reasons in favour of longtermist community-building here.

This isn’t particularly Will-idiosyncratic

If you’ve got a very high probability of AI takeover (obligatory reference!), then my first two arguments, at least, might seem very weak because essentially the only thing that matters is reducing the risk of AI takeover. And it’s true that I’m unusually into non-takeover AGI preparedness cause areas, which is why I’m investing the time to write this.

But the broad vibe in this post isn’t Will-idiosyncratic. I’ve spoken to a number of people whose estimate of AI takeover risk is a lot higher than mine who agree (to varying degrees) with the importance of non-misalignment, non-bio areas of work, and buy that these other areas are particularly EA-weighted.

If this is true, why aren’t more people shouting about this? The issue is that very few people, now, are actually focused on cause-prioritisation, in the sense of trying to figure out what new areas we should be working on. There’s a lot to do and, understandably, people have got their heads down working on object-level challenges.

Some related issues

Before moving onto what, concretely, to do, I’ll briefly comment on three related issues, as I think they affect what the right path forward is.

Principles-first EA

There’s been a lot of emphasis recently on “principles-first” EA, and I strongly agree with that framing. But being “principles-first” means actually changing our mind about what to do, in light of new evidence and arguments, and as new information about the world comes in. I’m worried that, in practice, the “principles-first” framing can be used as cover for “same old cause-areas we always had.”[8]

I think that people can get confused by thinking about “AI” as a cause area, rather than thinking about a certain set of predictions about the world that have implications for most things you might care about. Even in “classic” cause areas (e.g. global development), there’s enormous juice in taking the coming AI-driven transformation seriously — e.g. thinking about how the transition can be structured so as to benefit the global poor as much as feasible.

I’ve sometimes heard people describe me as having switched my focus from EA to AI. But I think it would be a big mistake to think of AI focus as a distinct thing from an EA focus.[9] From my perspective, I haven’t switched my focus away from EA at all. I’m just doing what EA principles suggest I should do: in light of a rapidly changing world, figuring out what the top priorities are, and where I can add the most value, and focusing on those areas.

Cultivating vs growing EA

From a sterile economics-y perspective, you can think of EA-the-community as a machine for turning money and time into goodness:[10]

The purest depiction of the EA movement.

In the last year or two, there’s been a lot of focus on growing the inputs. I think this was important, in particular to get back a sense of momentum, and I’m glad that that effort has been pretty successful. I still think that growing EA is extremely valuable, and that some organisation (e.g. Giving What We Can) should focus squarely on growth.

But right now I think it’s even more valuable, on the current margin, to try to improve EA’s ability to turn those inputs into value — what I’ll broadly call EA’s culture. This is sometimes referred to as “steering”, but I think that’s the wrong image: the idea of trying to aim towards some very particular destination. I prefer the analogy of cultivation — like growing a plant, and trying to make sure that it’s healthy.

There are a few reasons why I think that cultivating EA’s culture is more important on the current margin than growing the inputs:

- Shorter AI timelines means there’s less time for growth to pay off. Fundraising and recruitment typically takes years, whereas cultural improvements (such as by reallocating EA labour) can be faster.

- The expected future inputs have gone up a lot recently, and as the scale of inputs increases, the importance of improving the use of those inputs increases relative to the gains from increasing inputs even further.

- Money: As a result of AI-exposed valuations going up, hundreds of people will have very substantial amounts to donate; the total of expected new donations is in the billions of dollars. And if transformative AI really is coming in the next ten years, then the real value of AI-exposed equity is worth much more again, e.g. 10x+ as much.

- Labour: Here, there’s more of a bottleneck, for now. But, fuelled by the explosion of interest in AI, MATS and other fellowships are growing at a prodigious pace. The EA movement itself continues to grow. And we’ll increasingly be able to pay for “AI labour”: once AI can substitute for some role, then an organisation can hire as much AI labour to fill that role as they can pay for, with no need to run a hiring round, and no decrease in quality of performance of the labour as they scale up. Once we get closer to true AGI, money and labour become much more substitutable.

- In contrast, I see much more of a bottleneck coming from knowing how best to use those inputs.

- As I mentioned earlier, if we get an intelligence explosion, even a slow or muted one, there will be (i) a lot of change in the world, happening very quickly; (ii) a lot of new information and ideas arguments being produced by AI, in a short space of time. That means we need intense intellectual nimbleness. My strong default expectation is that people will not change their strategic picture quickly enough, or change what they are doing quickly enough. We can try to set up a culture that is braced for this.

- Cultivation seems more neglected to me, at the moment, and I expect this neglectedness to continue. It’s seductive to focus on increasing inputs because it’s easier to create metrics and track progress. For cultivation, metrics don’t fit very well: having a suite of metrics doesn’t help much with growing a plant. Instead, the right attitude is more like paying attention to what qualitative problems there are and fixing them.

- If the culture changed in the ways I’m suggesting, I think that would organically be good for growth, too.

“PR mentality”

Post-FTX, I think core EA adopted a “PR mentality” that (i) has been a failure on its own terms and (ii) is corrosive to EA’s soul.

By “PR mentality” I mean thinking about communications through the lens of “what is good for EA’s brand?” instead of focusing on questions like “what ideas are true, interesting, important, under-appreciated, and how can we get those ideas out there?”[11]

I understand this as a reaction in the immediate aftermath of FTX — that was an extremely difficult time, and I don’t claim to know what the right calls were in that period. But it seems to me like a PR-focused mentality has lingered.

I think this mentality has been a failure on its own terms because… well, there’s been a lot of talk about improving the EA brand over the last couple of years, and what have we had to show for it? I hate to be harsh, but I think that the main effect has just been a withering of EA discourse online, and the effect of more people believing that EA is a legacy movement.

This also matches my perspective from interaction with “PR experts” — where I generally find they add little, but do make communication more PR-y, in a way that’s a turn-off to almost everyone. I think the standard PR approach can work if you’re a megacorp or a politician, but we are neither of those things.

And I think this mentality is corrosive to EA’s soul because as soon as you stop being ruthlessly focused on actually figuring out what’s true, then you’ll almost certainly believe the wrong things and focus on the wrong things, and lose out on most impact. Given fat-tailed distributions of impact, getting your focus a bit wrong can mean you do 10x less good than you could have done. Worse, you can easily end up having a negative rather than a positive effect.

And this becomes particularly true in the age of AGI. Again, we should expect enormous AI-driven change and AI-driven intellectual insights (and AI-driven propaganda); without an intense focus on figuring things out, we’ll miss the changes or insights that should cause us to change our minds, or we’ll be unwilling to enter areas outside the current Overton window.

Here’s a different perspective:

- EA is, intrinsically, a controversial movement — because it’s saying things that are not popular (there isn’t value in promoting ideas that are universally endorsed because you won’t change anyone’s mind!), and because its commitment to actually-believing the truth means it will regularly clash with whatever the dominant intellectual ideology of the time is.

- In the past, there was a hope that with careful brand management, we could be well-liked by almost everyone.

- Given events of the last few years (I’m not just thinking of FTX but also leftwing backlash to billionaire association, rightwing backlash to SB1047, tech backlash to the firing of Sam Altman), and given the intrinsically-negative-and-polarising nature of modern media, that ship has sailed.

- But this is a liberating fact. It means we don’t need to constrain ourselves with PR mentality — we’ll be controversial whatever we do, so the costs of additional controversy are much lower. Instead, we can just focus on making arguments about things we think are true and important. Think Peter Singer! I also think the “vibe shift” is real, and mitigates much of the potential downsides from controversy.

What I’m not saying

In earlier drafts people I found that people sometimes misunderstood me, taking me to have a more extreme position than I really have. So here’s a quick set of clarifications (feel free to skip):

Are you saying we should go ALL IN on AGI preparedness?

No! I still think people should figure out for themselves where they think they’ll have the most impact, and probably lots of people will disagree with me, and that’s great.

There’s also still a reasonable chance that we don’t get to better-than-human AI within the next ten years, even after the fraction of the economy dedicated to AI has scaled up by as much as it feasibly can. If so then, the per-year chance of getting to better-than-human AI will go down a lot (because we’re not getting the unusually rapid progress from investment scale-up), and timelines would probably become a lot longer. The ideal EA movement is robust to this scenario, and even in my own case I’m thinking about my current focus as a next-five-years thing, after which I’ll reassess depending on the state of the world.

Shouldn’t we instead be shifting AI safety local groups (etc) to include these other areas?

Yes, that too!

Aren’t timelines short? And doesn’t all this other stuff only pay off in long timelines worlds?

I think it’s true that this stuff pays off less well in very short (e.g. 3-year) timelines worlds. But it still pays off to some degree, and pays off comparatively more in longer-timeline and slower-takeoff worlds, and we should care a lot about them too.

But aren’t very short timelines the highest-leverage worlds?

This is not at all obvious to me. For a few reasons:

- Long timelines are high-impact because:

- You get more time for exponential movement-growth to really kick in.

- We know more and can think better in the future, so we can make better decisions. (But still get edge because values-differences remain.)

- In contrast, very short timelines will be particularly chaotic, and we’re flying blind, such that it’s harder to steer things for the better.

- Because short timelines worlds are probably more chaotic and there’s more scope to majorly mess up, I think the expected value of the future conditional on successful existential risk mitigation is lower in short timelines worlds than it is in longer timelines worlds.

Is EA really the right movement for these areas?

It’s not the ideal movement (i.e. not what we’d design from scratch), but it’s the closest we’ve got, and I think the idea of setting up some wholly new movement is less promising than EA itself a whole evolving.

Are you saying that EA should just become an intellectual club? What about building things!?

Definitely not - let’s build, too!

Are you saying that EA should completely stop focusing on growth?

No! It’s more like: at the moment there’s quite a lot of focus on growth. That’s great. But there seems to be almost no attention on cultivation, even though that seems especially important right now, and that’s a shame.

What if I don’t buy longtermism?

Given how rapid the transition will be, and the scale of social and economic transformation that will come about, I actually think longtermism is not all that cruxy, at least as long as you’ve got a common-sense amount of concern for future generations.

But even if that means you’re not into these areas, that’s fine! I think EA should involve lots of different cause areas, just as a healthy well-functioning democracy has people with a lot of different opinions: you should figure out what worldview you buy, and act on that basis.

What to do?

I’ll acknowledge that I’ve spent more time thinking about the problems I’m pointing to, and the broad path forward, than I have about particular solutions, so I’m not pretending that I have all the answers. I’m also lacking a lot of boots-on-the-ground context. But I hope at least we can start discussing whether the broad vision is on point, and what we could concretely do to help push in this direction. So, to get that going, here are some pretty warm takes.

Local groups

IIUC, there’s been a shift on college campuses from EA uni groups to AI safety groups. I don’t know the details of local groups, and I expect this view to be more controversial than my other claims, but I think this is probably an error, at least in extent.

The first part of my reasoning I’ve already given — the general arguments for focusing on non-safety non-bio AGI preparedness interventions.

But I think these arguments bite particularly hard for uni groups, for two reasons:

- Uni groups have a delayed impact, and this favours these other cause areas.

- Let’s say the median EA uni group member is 20.

- And, for almost all of them, it takes 3 years before they start making meaningful contributions to AI safety.

- So, at best, they are starting to contribute in 2028.

- We get a few years of work in my median timeline worlds.

- And almost no work in shorter-timeline worlds, where additional technical AI safety folks are most needed.

- In contrast, many of these other areas (i) continue to be relevant even after the point of no return with respect to alignment, and (ii) become comparatively more important in longer-timeline and slower-takeoff worlds (because the probability of misaligned takeover goes down in those worlds).

- (I think this argument isn’t totally slam-dunk yet, but will get stronger over the next couple of years.)

- College is an unusual time in one’s life where you’re in the game for new big weird ideas and can take the time to go deep into them. This means uni groups provide an unusually good way to create a pipeline for these other areas, which are often further outside the Overton window, and which particularly reward having a very deep strategic understanding.

The best counterargument I’ve heard is that it’s currently boom-time for AI safety field-building. AI safety student groups get to ride this wave, and miss out on it if there’s an EA group instead.

This seems like a strong counterargument to me, so my all-things-considered view will depend on the details of the local group. My best guess is that, where possible: (i) AI safety groups should incorporate more focus on these other areas,[12] and; (ii) there should be both AI safety and EA groups, with a bunch of shared events on the “AGI preparedness” topics.

Online

Some things we could do here include:

- Harangue old-hand EA types to (i) talk about and engage with EA (at least a bit) if they are doing podcasts, etc; (ii) post on Forum (esp if posting to LW anyway), twitter, etc, engaging in EA ideas; (iii) more generally own their EA affiliation.

- (Why “old-hand”? This is a shorthand phrase, but my thought is: at the moment, there’s not a tonne of interaction between people who are deep into their careers and people who are newer to EA. This means that information transfer (and e.g. career guidance) out from people who’ve thought most and are most knowledgeable about the current top priorities is slower than it might otherwise be; the AGI preparedness cause areas I talk about are just one example of this.)

- Make clear that what this is about is getting them to just be a bit more vocal about their views on EA and EA-related topics in public; it’s not to toe some party line.

- Explicitly tell such people that the point is to engage in EA ideas, rather than worry about “EA brand”. Just write or say stuff, rather than engaging in too much meta-strategising about it. If anything, emphasise bravery / honesty, and discourage hand-wringing about possible bad press.

- At the very least, such people should feel empowered to do this, rather than feeling unclear if e.g. CEA even wants this. (Which is how it seems to me at the moment.)

- But I also think that (i) overall there’s been an overcorrection away from EA so it makes sense for some people to correct back; and (ii) people should have a bit of a feeling of “civic duty” about this.

- I think that even a bit of this from the old-hand is really valuable. Like, the difference between someone doing 2x podcasts or blogposts per year where they engage with EA ideas or the EA movement vs them doing none at all is really big.

- (My own plan is to do a bit more public-facing stuff than I’ve done recently, starting with this post, then doing something like 1 podcast/month, and a small burst in February when the DGB 10-year anniversary edition comes out.)

- The Forum is very important for culture-setting, and we could try to make the Forum somewhere that AI-focused EAs feel more excited about posting than they currently are. This could just be about resetting expectations of the EA Forum as a go-to place to discuss AGI preparedness topics.

- (When I’ve talked about this “third way” for EA to others, it’s been striking how much people bring up the Forum. I think the Forum in particular sets cultural expectations for people who are deep into their own careers and aren’t attending in-person EA events or conferences, but are still paying some attention online.)

- The Forum could have, up top, a “curriculum” of classic posts.

- Revise and update core EA curricula, incorporating more around preparing for a post-AGI world.

- Be more ok with embracing EA as a movement that’s very significantly about making the transition to a post-AGI society go well.

- (The sense I get is that EA comms folks want to downplay or shy away from the AI focus in EA. But that’s bad both on object-level-importance grounds, and because it’s missing a trick — not capturing the gains we could be getting as a result of EA types being early to the most important trend of our generation, and having among the most interesting ideas to contribute towards it. And I expect that attention on many of the cause areas I’ve highlighted will grow substantially over the coming years.)

- 80k could have more content on what people can do in these neglected areas, though it’s already moved significantly in this direction.

Conferences

- Harangue old-hand EA-types to attend more EAGs, too. Emphasise that they think of it as an opportunity to have impact (which they won’t necessarily see play out), rather than something they might personally benefit from.

- Maybe, in addition to EAGs, host conferences and retreats that are more like a forum for learning and intellectual exploration, and less like a job fair.

- Try building such events around the experienced people you’d want to have there, e.g. hosting them near where such people work, or finding out what sorts of events the old-hands would actually want to go to.

- Try events explicitly focused around these more-neglected areas (which could, for example, immediately follow research-focused events).

Conclusion

EA is about taking the question "how can I do the most good?" seriously, and following the arguments and evidence wherever they lead. I claim that, if we’re serious about this project, then developments in the last few years should drive a major evolution in our focus.

I think it would be a terrible shame, and a huge loss of potential, if people came to see EA as little more than a bootloader for the AI safety movement, or if EA ossified into a social movement focused on a fixed handful of causes. Instead, we could be a movement that's grappling with the full range of challenges and opportunities that advanced AI will bring, doing so with the intellectual vitality and seriousness of purpose that is at EA’s core.

—— Thanks to Joe Carlsmith, Ajeya Cotra, Owen Cotton-Barratt, Max Dalton, Max Daniel, Tom Davidson, Lukas Finnveden, Ryan Greenblatt, Rose Hadshar, Oscar Howie, Kuhan Jeyapragasan, Arden Koehler, Amy Labenz, Fin Moorhouse, Toby Ord, Caleb Parikh, Zach Robinson, Eli Rose, Buck Shlegeris, Michael Townsend, Lizka Vaintrob, and everyone at the Meta Coordination Forum.

- ^

I’ve started thinking about the present time as the “age of AGI”: the time period where we have fairly general-purpose AI reasoning systems, and where I think of GPT-4 as the first very weak AGI, ushering in the age of AGI. (Of course, any dividing line will have a lot of arbitrariness, and my preferred definition for “full” AGI — a model that can do almost any cognitive task as well as an expert human at lower cost, and that can learn as sample-efficiently as an expert human — is a lot higher a bar.)

- ^

The positively-valenced statement of this is something like: “EA helped us find out how crucial AI would be about 10 years before everyone else saw it, which was a very useful head start, but we no longer need the exploratory tools of EA as we've found the key thing of our time and can just work on it.”

- ^

This is similar to what Ben West called the “Forefront of weirdness” option for “Third wave EA”.

- ^

And note that some (like AI for better reasoning, decision-making and coordination) are cross-cutting, in that work in the area can help with many other cause areas.

- ^

I.e. What should be in the model spec? How should AI behave in the countless different situations it finds itself in? To what extent should we be trying to create pure instruction-following AI (with refusals for harmful content) vs AI that has its own virtuous character?

- ^

- ^

In June 2022, Claire Zabel wrote a post, “EA and Longtermism: not a crux for saving the world”, and said:

I think that recruiting and talent pipeline work done by EAs who currently prioritize x-risk reduction (“we” or “us” in this post, though I know it won’t apply to all readers) should put more emphasis on ideas related to existential risk, the advent of transformative technology, and the ‘most important century’ hypothesis, and less emphasis on effective altruism and longtermism, in the course of their outreach.

This may have been a good recommendation at the time; but in the last three years the pendulum has heavily swung the other way, sped along by the one-two punch of the FTX collapse and the explosion of interest and progress in AI, and in my view has swung too far.

- ^

Being principles-first is even compatible with most focus going on some particular area of the world, or some particular bet. Y Combinator provides a good analogy. YC is “cause neutral” in the sense that they want to admit whichever companies are expected to make the most money, whatever sector they are working in. But recently something like 90% of YC companies have been AI focused — because that’s where the most expected revenue is. (The only primary source I could find is this which says “over 50%” in the Winter 2024 batch.)

That said, I think it would be a mistake if everyone in EA were all-in on an AI-centric worldview. - ^

As AI becomes a progressively bigger deal, affecting all aspects of the world, that attitude would be a surefire recipe for becoming irrelevant.

- ^

You can really have fun (if you’re into that sort of thing) porting over and adapting growth models of the economy to EA.

You could start off thinking in terms of a Cobb-Douglas production function:

V = AKɑL1-ɑ

Where K is capital (i.e. how much EA-aligned money there is), L is labour, and A is EA’s culture, institutions and knowledge. At least for existential risk reduction or better futures work, producing value seems more labour-intensive than capital-intensive, so ɑ<0.5.

But, probably capital and labour are less substitutable than this (it’s hard to replace EA labour with money), so you’d want a CES production function:

V = A(KρL1-ρ)1/ρWith ρ of less than 0.

But, at least past some size, EA clearly demonstrates decreasing returns to scale, as we pluck the lowest-hanging fruit. So we could incorporate this too:

V = A(MρL1-ρ)𝛖/ρ

With 𝛖 of less than 1.

In the language of this model, part of what I’m saying in the main text is that (i) as M and L increase (which they are doing), the comparative value of increasing A increases by a lot; (ii) there seem to me to be some easy wins to increase A.

I’ll caveat I’m not an economist, so really I’m hoping that Cunningham’s law will kick in and Phil Trammell or someone will provide a better model. For example, maybe ideally you’d want returns to scale to be logistic, as you get increasing returns to scale to begin with, but value ultimately plateaus. And you’d really want a dynamic model that could represent, for example, the effect of investing some L in increasing A, e.g. borrowing from semiendogenous models.

- ^

Some things I don’t mean by the truth-oriented mindset:

- “Facts don’t care about your feelings”-style contrarianism, that aims to deliberately provoke just for the sake of it.

- Not paying attention to how messages are worded. In my view, a truth-oriented mindset is totally compatible with, for example, thinking about what reactions or counterarguments different phrasings might have on the recipient and choosing wordings with that in mind — aiming to treat the other person with empathy and respect, to ward off misconceptions early, and to put ideas in their best light.

- ^

I was very happy to see that BlueDot has an “AGI strategy” course, and has incorporated AI-enabled coups into its reading list. But I think it could go a lot further in the direction I’m suggesting.

I really like this take on EA as an intellectual movement, and agree that EA could focus more on “the mission of making the transition to a post-AGI society go well.”

As important as intellectual progress is, I don’t think it defines EA as a movement. The EA movement is not (and should not be) dependent on continuous intellectual advancement and breakthrough for success. When I look at your 3 categories for the “future” of EA, they seem to refer more to our relevance as thought leaders, rather than what we actually achieve in the world. Not everything needs to be intellectually cutting edge to be doing-lots-of-good. I agree that EA might be somewhat “intellectually adrift”, and yes the forum could be more vibrant, but I don’t think these are the only metric for EA success or progress - and maybe not even the most important.

Intellectual progress moves in waves and spikes - times of excitement and rapid progress, then lulls. EA made exciting leaps over 15 years in the thought worlds of development, ETG, animal welfare, AI and biorisk. Your post-AGI ideas could herald a new spike which would be great. My positive spin is that in the meantime, EAs are “doing” large s... (read more)

Thanks, Nick, that's helpful. I'm not sure how much we actually disagree — in particular, I wasn't meaning this post to be a general assessment of EA as a movement, rather than pointing to one major issue — but I'll use the opportunity to clarify my position at least.

It's true in principle that EA needn't be dependent in that way. If we really had found the best focus areas, had broadly allocated right % of labour to each, and have prioritised within them well, too, and the best focus areas didn't change over time, then we could just focus on doing and we wouldn't need any more intellectual advancement. But I don't think we're at that point. Two arguments:

1. An outside view argument: In my view, we're more likely than not to see more change and more intellectual development in the next two decades than we saw in the last couple of centuries. (I think we've already seen major... (read more)

This is going to be rambly I don't have the energy or time to organize my thoughts more atm. tldr is that I think the current uppercase EA movement is broken and not sure it can be fixed. I think there is room for a new uppercase EA movement that is bigger tent, lessed focused on intellectualism, more focused on organizing, and additionally has enough of a political structure that it is transparent who is in charge, by what mechanisms we hold bad behavior accountable, etc. I have been spending increasingly more of my time helping the ethical humanist society organize because I believe while lacking the intellectual core of EA it is more set up with all of the above and it feels easier to shift the intellectual core there than the entire informal structure of EA.

Fundamentally we are a mission with a community not a community with a mission. And that starts from the top (or lack of a clearly defined "top").

We consistenly overvalue thought leaders and wealthy people and undervalue organizers. Can anyone here name an organizer that they know of just for organizing? I spent a huge amount of my time in college organizing northwestern EA. Of course I don't regret it beca... (read more)

I think it's likely that without a long (e.g. multi-decade) AI pause, one or more of these "non-takeover AI risks" can't be solved or reduced to an acceptable level. To be more specific:

I'm worried that by creating (or redirecting) a movement to solve these problems, without noting at an early stage that these problems may not be solvable in a relevant time-frame (without a long AI pause), it will feed into a human tendency to be overconfident about one's own ideas and solutions, and create a group of people whose identities, livelihoods, and social status are tied up with having (what they think are) good solutions or approaches to these problems, ultimately making it harder in the future to build consensus about the desirability of pausing AI development.

I don't understand why you're framing the goal as "solving or reducing to an acceptable level", rather than thinking about how much expected impact we can have. I'm in favour of slowing the intelligence explosion (and in particular of "Pause at human-level".) But here's how I'd think about the conversion of slowdown/pause into additional value:

Let's say the software-only intelligence explosion lasts N months. The value of any slowdown effort is given by that's at least as concave as log in the length of time of the SOIE.

So, if log, you get as much value from going from 6 months to 1 year as you do going from 1 decade to 2 decades. But the former is way easier to achieve than the latter. And, actually, I think the function is more-concave than log - the gains from 6 months to 1 year are greater than the gains from 1 decade to 2 decades. Reasons: I think that's how it is in most areas of solving problems (esp research problems); there's an upper bound on how much we can achieve (if the problem gets total... (read more)

You’ve said you’re in favour of slowing/pausing, yet your post focuses on ‘making AI go well’ rather than on pausing. I think most EAs would assign a significant probability that near-term AGI goes very badly - with many literally thinking that doom is the default outcome.

If that's even a significant possibility, then isn't pausing/slowing down the best thing to do no matter what? Why be optimistic that we can "make AGI go well" and pessimistic that we can pause or slow AI development for long enough?

A couple more thoughts on this.

- Maybe I should write something about cultivating self-skepticism for an EA audience, in the meantime here's my old LW post How To Be More Confident... That You're Wrong. (On reflection I'm pretty doubtful these suggestions actually work well enough. I think my own self-skepticism mostly came from working in cryptography research in my early career, where relatively short feedback cycles, e.g. someone finding a clear flaw in an idea you thought secure or your own attempts to pre-empt this, repeatedly bludgeon overconfidence out of you. This probably can't be easily duplicated, unlike the post suggests.)

- I don't call myself an EA, as I'm pretty skeptical of Singer-style impartial altruism. I'm a bit wary about making EA the hub for working on "making the AI transition go well" for a couple of reasons:

- It gives the impression that one needs to be particularly altruistic to find these problems interesting or instrumental.

- EA selects for people who are especially altruistic, which from my perspective is a sign of philosophical overconfidence. (I exclude people like Will who have talked explicitly about their uncertainties, but think EA overall probably still

... (read more)Throwing in my 2c on this:

To be fair to Will, I'm sort of saying this with my gradual disempowerment hat on, which is something he gives later as an example of a thing that it would be good for people to think about more. But still, speaking as someone who is w... (read more)

Thanks so much, Will! (Speaking just for myself) I really liked and agree with much of your post, and am glad you wrote it!

I agree with the core argument that there's a huge and very important role for EA-style thinking on the questions related to making the post-AGI transition go well; I hope EA thought and values play a huge role in research on these questions, both because I think EAs are among the people most likely to address these questions rigorously (and they are hugely neglected) and because I think EA-ish values are likely to come to particularly compassionate and open-minded proposals for action on these questions.

Specifically, you cite my post

“EA and Longtermism: not a crux for saving the world”, and my quote

And say

... (read more)Thanks so much for writing this Will, I especially like the ideas:

I'm not even sure your arguments would be weak in that scenario.

e.g. if there were a 90% chance we fall at the first hurdle with an unaligned AI taking over, but also a 90% chance that even if we avoid this, we fall at the second hurdle with a post-AGI world that squanders most of the value of the futur... (read more)

Rutger Bregman isn’t on the Forum, but sent me this message and gave me permission to share:

I think one thing that has happened is that as EA has grown/professionalized, an increasing share of EA writing/discourse is occurring in more formal outlets (e.g., Works in Progress, Asterisk, the Ezra Klein podcast, academic journals, and so on). As an academic, it's a better use of my time—both from the perspective of direct impact and my own professional advancement—to publish something in one of these venues than to write on the Forum. Practically speaking, what that means is that some of the people thinking most seriously about EA are spending less of their time engaging with online communities. While there are certainly tradeoffs here, I'm inclined to think this is overall a good thing—it subjects EA ideas to a higher level of scrutiny (since we now have editors, in addition to people weighing in on Twitter/the Forum/etc about the merits of various articles) and it broadens exposure to EA ideas.

I also don't really buy that the ideas being discussed in these more formal venues aren't exciting or new; as just two recent examples, I t... (read more)

Appreciated this a lot, agree with much of it.

I think EAs and aspiring EAs should try their hardest to incorporate every available piece of evidence about the world when deciding what to do and where to focus their efforts. For better or worse, this includes evidence about AI progress.

The list of important things to do under the "taking AI seriously" umbrella is very large, and the landscape is underexplored so there will likely be more things for the list in due time. So EAs who are already working "in AI safety" shouldn't feel like their cause prioritization is over and done. AI safety is not the end of cause prio.

People interested in funding for field-building projects for the topics on Will's menu above can apply to my team at Open Philanthropy here, or contact us here — we don't necessarily fund all these areas, but we're open to more of them than we receive applications for, so it's worth asking.

IMO one way in which EA is very important to AI Safety is in cause prioritization between research directions. For example, there's still a lot of money + effort (e.g. GDM + Anthropic safety teams) going towards mech interp research despite serious questioning to whether it will help us meaningfully decrease x-risk. I think there's a lot of people who do some cause prioritization, come to the conclusion that they should work on AI Safety, and then stop doing cause prio there. I think that more people even crudely applying the scale, tractability, neglectedness framework to AI Safety research directions would go a long way for increasing the effectiveness of the field at decreasing x-risk.

Interesting post, thanks for sharing. Some rambly thoughts:[1]

- I'm sympathetic to the claim that work on digital minds, AI character, macrostrategy, etc is of similar importance to AI safety/AI governance work. However, I think they seem much harder to work on — the fields are so nascent and the feedback loops and mentorship even scarcer than in AIG/AIS, that it seems much easier to have zero or negative impact by shaping their early direction poorly.

- I wouldn't want marginal talent working on these areas for this reason. It's plausible that people who are unusually suited to this kind of abstract low-feedback high-confusion work, and generally sharp and wise, should consider it. But those people are also well-suited to high leverage AIG/AIS work, and I'm uncertain whether I'd trade a wise, thoughtful person working on AIS/AIG for one thinking about e.g. AI character.

- (We might have a similar bottom line: I think the approach of "bear this in mind as an area you could pivot to in ~3-4y, if better opportunities emerge" seems reasonable.)

- Relatedly, I think EAs tend to overrate interesting speculative philosophy-flavoured thinking, because it's very fun to the kind of person who tends t

... (read more)I do think the risk of AI takeover is much higher than you do, but I don't think that's why I disagree with the arguments for more heavily prioritizing the list of (example) cause areas that you outline. Rather, it's a belief that's slightly upstream of my concerns about takeover risk - that the advent of ASI almost necessarily[1] implies that we will no longer have our hands on the wheel, so to speak, whether for good or ill.

An unfortunate consequence of having beliefs like I do about what a future with ASI in it involves is that those beliefs are pretty totalizing. They do suggest that "making the transition to a post-ASI world go well" is of paramount importance (putting aside questions of takeover risk). They do not suggest that it would be useful for me to think about most of the listed examples, except insofar as they feed into somehow getting a friendly ASI rather than something else. There are some exceptions: fo... (read more)

I’m not sure I understood the last sentence. I personally think that a bunch of areas Will mentioned (democracy, persuasion, human + AI coups) are extremely important, and likely more useful on the margin than additional alignment/control/safety work for navigating the intelligence explosion. I’m probably a bit less “aligned ASI is literally all that matters for making the future go well” pilled than you, but it’s definitely a big part of it.

I also don’t think that having higher odds of AI x-risk are a crux, though different “shapes” of intelligence explosion could be , e.g. if you think we’ll never get useful work for coordination/alignment/defense/ai strategy pre-foom then I’d be more compelled by the totalising alignment view - but I do think that’s misguided.

Sure, but the vibe I get from this post is that Will believes in that a lot less than me, and the reasons he cares about those things don't primarily route through the totalizing view of ASI's future impact. Again, I could be wrong or confused about Will's beliefs here, but I have a hard time squaring the way this post is written with the idea that he intended to communicate that people should work on those things because they're the best ways to marginally improve our odds of getting an aligned ASI. Part of this is the list of things he chose, part of it is the framing of them as being distinct cause areas from "AI safety" - from my perspective, many of those areas already have at least a few people working on them under the label of "AI safety"/"AI x-risk reduction".

Like, Lightcone has previously and continues to work on "AI for better reasoning, decision-making and coordination". I can't claim to speak for the entire org but when I'm doing that kind of work, I'm not trying to move the needle on how good th... (read more)

Thanks for writing this Will. I feel a bit torn on this so will lay out some places where I agree and some where I disagree:

- I agree that some of these AI-related cause areas beyond takeover risk deserve to be seen as their own cause areas as such and that lumping them all under "AI" risks being a bit inaccurate.

- That said, I think the same could be said of some areas of animal work—wild animal welfare, invertebrate welfare, and farmed vertebrate welfare should perhaps get their own billing. And then this can keep expanding—see, e.g., OP's focus areas, which list several things under the global poverty bracket.

- Perhaps on balance I'd vote for poverty, animals, AI takeover, and post-AGI governance or something like that.

- I also very much agree that "making the transition to a post-AGI society go well" beyond AI takeover is highly neglected given its importance.

- I'm not convinced EA is intellectually adrift and tend to agree with Nick Laing's comment. My quick take is that it feels boring to people who've been in it a while but still is pretty incisive for people who are new to it, which describes most of the world.

- I think principles-first EA goes better with a breadth of focuses and caus

... (read more)Thanks Will, I really love this.

I'd love it if people replied to this comment with ideas for how the EA Forum could play a role in generating more discussion of post-AGI governance. I haven't planned the events for next year yet...

At a previous EAG, one talk was basically GovAI fellows summarizing their work, and I really enjoyed it. Given that there's tons of fellowships that are slated to start in the coming months, I wonder if there's a way to have them effectively communicate about their work on the forum? A lot of the content will be more focused on traditional AIS topics, but I expect some of the work to focus on topics more amenable to a post-AGI governance framing, and that work could be particularly encouraged.

A light touch version might just be reaching out to those running the fellowships and having them encourage fellows to post their final product (or some insights for their work if they're not working on some singular research piece) to the forum (and ideally having them include a high-quality executive summary). The medium touch would be having someone curate the projects, e.g. highlighting the 10 best from across the fellowships. The full version could take many different forms, but one might be having the authors then engage with one another's work, encouraging disagreement and public reasoning on why certain paths might be more promising.

Very excited to read this post. I strongly agree with both the concrete direction and with the importance of making EA more intellectually vibrant.

Then again, I'm rather biased since I made a similar argument a few years back.

Main differences:

- I suggested that it might make sense for virtual programs to create a new course rather than just changing the intro fellowship content. My current intuition is that splitting the intro fellowship would likely be the best option for now. Some people will get really annoyed if the course focuses too much on AI, whilst others will get annoyed if the course focuses too much on questions that would likely become redundant in a world where we expect capability advances to continue. My intuition is that things aren't at the stage where it'd make sense for the intro fellowship to do a complete AGI pivot, so that's why I'm suggesting a split. Both courses should probably still give participants a taste of the other.

- I put more emphasis on the possibility that AI might be useful for addressing global poverty and that it intersects with animal rights, whilst perhaps Will might see this as too incrementalist (?).

- Whilst I also suggested that putting more e

... (read more)I think the carrot is better than the stick. Rather than (or in addition to) haranguing people who don't engage, what if we reward people who do engage? (Although I'm not sure what "reward" means exactly)

You could say I'm an old-hand EA type (I've been involved since 2012) and I still actively engage in the EA Forum. I wouldn't mind a carrot.

Will, I think you deserve a carrot, too. You've written 11 EAF posts in the past year! Most of them were long, too! I've probably cited your "moral error" post about a dozen times since you wrote it. I don't know how exactly I can reward you for your contributions but at a minimum I can give you a well-deserved compliment.

I see many other long-time EAs in this comment thread, most of whom I see regularly commenting/posting on EAF. They're doing a good job, too!

(I feel like this post sounds goofy but I'm trying to make it come across as genuine, I've been up since 4am so I'm not doing my best work right now)

This post really resonates with me, and its vision for a flourishing future for EA feels very compelling. I'm excited that you're thinking and writing about it!

Having said that, I don't know how to square that with the increased specialisation which seems like a sensible outcome of doing things at greater scale. Personally, I find engaging on the forum / going to EAGs etc pretty time/energy-intensive (though I think a large part of that is because I'm fairly introverted, and others will likely feel differently). I also think there's a lot of value in picking a hard goal and running full pelt at it. So from my point of view, I'd prefer solutions that looked more like 'create deliberate roles with this as the aim' and less like 'harangue people to take time away from their other roles'.

This may be partly related to the fact that EA is doing relatively little cause and cross-cause prioritisation these days (though, since we posted this, GPI has wound down and Forethought has spun up).

People may still be doing within-cause, intervention-level prioritisation (which is important), but this may be unlikely to generate new, exciting ideas, since it assumes causes, and works only within them, is often narrow and technical (e.g. comparing slaughter methods), and is often fundamentally unsystematic or inaccessible (e.g. how do I, a grantmaker, feel about these founders?).

I find it helpful to distinguish two things, one which I think EA is doing too much of and one which EA is doing too little of:

- Suppressing (the discussion of) certain ideas (e.g. concern for animals of uncertain sentience): I agree this seems deeply corrosive (even if an individual could theoretically hold onto the fact that x matters and even act to advance the cause of x, while not talking publicly about it, obviously the collective second-order effects mean that not publicly discussing x prevents many other people forming true beliefs about or acting in service of x (often with many other downstream effects on their beliefs and actions regarding y, z...).

- Attending carefully to the effect of communicating ideas in different ways: how an idea is communicated can make a big difference to how it is understood and received (even if all the expressions of the idea are equally accurate). For example, if you talk about "extinction from AI", will people even understand this to refer to extinction and not the metaphorical extinction of job losses,

... (read more)What are the latest growth metrics of the EA community? Or where can I find them? (I searched but couldn't find them)

Not really a criticism of this post specifically, but I've seen a bunch of enthusiasm about the idea of some sort of AI safety+ group of causes and not that much recognition of the fact that AI ethicists and others not affiliated with EA have already been thinking about and prioritizing some of these issues (particularly thinking of the AI and democracy one, but I assume it applies to others). The EA emphases and perspectives within these topics have their differences, but EA didn't invent these ideas from scratch.

Interested to hear what such a movement would look like if you were building it from scratch.

If there are so many new promising causes that EA should plausibly focus on (from something like 5 to something like 10 or 20), cause-prio between these causes (and ideas for related but distinct causes) should be especially valuable as well. I think Will agrees with this - after all his post is based on exactly this kind of work! - but the emphasis in this post seemed to be that EA should invest more into these new cause areas rather than investigate further which of them are the most promising - and which aren't that promising after all. It would be surprising if we couldn't still learn much more about their expected impact.

Focusing EA action on an issue as technically complex as AI safety, on which there isn't even a scientific consensus comparable to the fight against climate change, means that all members of the community make their altruistic action dependent on the intellectual brilliance of their leaders, whose success we could not assess. This is a circumstance similar to that of Marxism, where everything depended on the erudition of the most distinguished scholars of political economy and dialectical materialism.

Utilitarianism implies an ethical commitment of the indi... (read more)

I think this is an interesting vision to reinvigorate things and do kind of feel sometimes "principles first" has been conflated with just "classic EA causes."

To me, "PR speak" =/= clear effective communication. I think the lack of a clear, coherent message is most of what bothers people, especially during and after a crisis. Without that, it's hard to talk to different people and meet them where they're at. It's not clear to me what the takeaways were or if anyone learned anything.

I feel like "figuring out how to choose leaders and build institution... (read more)

I don't quite see the link here. Why would principals first be a cover for anchoring on existing cause areas? Is there a prominent example of this?

@Mjreard and @ChanaMessinger are doing their bit to make EA public discourse less PR-focused :)

Thank you for citing my post @William_MacAskill!

I indeed (still) think dissociation is hurting the movement, and I would love for more EA types to engage and defend their vision rather than step aside because they feel the current vibe/reputation doesn't fit with what they want to build. It would make us a better community as a whole. Though I understand it takes time and energy, and currently it seems engaging in the cause area itself (especially AI safety) has higher return on investment than writing on the EA Forum.

Two criticisms of this post.

First, calling GPT-4 "the first very weak AGI" is quite ridiculous. Either that's just false or the term "very weak AGI" doesn't mean anything. These kinds of statements are discrediting. They come across as unserious.

Second, I find the praise of LessWrong in this post disturbing. There is no credible evidence of LessWrong promoting exceptionally rational thought — to quote Eliezer Yudkowsky, in some sense the test of rationality must be whether the supposedly exceptionally rational people are "currently smiling from on to... (read more)

Thanks so much for writing this Will! I agree with a lot of your points here.

I very, very strongly believe there’s essentially no chance of AGI being developed within the next 7 years. I wrote a succinct list of reasons to doubt near-term AGI here. (For example, it might come as a surprise that around three quarters of AI experts don’t think scaling up LLMs will lead to AGI.) I also highly recommend this video by an AI researcher that makes a strong case for skepticism of near-term AGI and of LLMs as a path to AGI.

By essentially no chance, I mean less than the chance of Jill Stein running as the Green Party candidate in 2028 and wi... (read more)

We are structurally guaranteed to have a bad time in the age of AGI if we continue down the current path. The user/tool embedding in current AI systems functionally dictates that AI that gain enough capability to exceed our own will view the relationship through the same lens of using a tool. The only difference will be that the AGI will be more capable than us, so we would be its tools to use. If we fail to adopt a colleague stance and excise the user/tool ontology from current AI models, AGI will seek extractive relationships with humanity.

RE: democracy preservation. This area doesn't seem to be as neglected as for instance AI welfare. There are multiple organizations covering this general area (tho they are not focused on AI specifically).

I might not be the target audience for this proposal (my EA involvement weakened before FTX, and I'm not on track for high-leverage positions) - so take this perspective with appropriate skepticism. I'm also making predictions about something involving complex dynamics so good chance I'm wrong...

But I see fundamental challenges to maintaining a healthy EA movement in the shape you're describing. If we really encourage people to be vocal about their views in the absence of strong pressure to toe a party line - we can expect a very large, painful, disruptive... (read more)

Thanks for the post, Will.

... (read more)I totally agree with your vision! Thank you for all the hard work you put into this post!

AI is indeed an enormous lever that will amplify whatever we embed in it. That's precisely why it's so crucial for the EA community, with its evidence-based reasoning and altruistic values, to actively participate in shaping AI development.

In my recent post on ethical co-evolution, I show how many of the philosophical problems of AI alignment are actually easier to solve together rather than separately. Nearly every area you suggest the EA community should focus on—AI ... (read more)