William_MacAskill

Posts 77

Comments273

Okay, looking at the spectrum again, it still seems to me like I've labelled them correctly? Maybe I'm missing something. It's optimistic if we can retain a knowledge of how to align AGI because then we can just use that knowledge later and we don't face the same magnitude of risk of the misaligned AI.

I agree with this. One way of seeing that is how many doublings of energy consumption civilisation can have before it needs to move beyond the solar system? The answer to that is about 40 doublings. Which, depending on your views on just how fast explosive industrial expansion goes, could be a pretty long time, e.g. decades.

I do think that non-existential level catastrophes are a big deal even despite the rerun risk consideration, because I expect the civilisation that comes back from such a catastrophe to be on a worse values trajectory than the one we have today. In particular, because the world today is unusually democratic and liberal, and I expect a re-roll of history to result in less democracy than we have today at the current technological level. However, other people have pushed me on that, and I don't feel like the case here is very strong. There are also obvious reasons why one might be biassed towards having that view.

In contrast, the problem of having to rerun the time of perils is very crisp. It doesn't seem to me like a disputable upshot at the moment, which puts it in a different category of consideration at least — one that everybody should be on board with.

I'm also genuinely unsure whether non-existential level catastrophe increases or decreases the chance of future existential level catastrophes. One argument that people have made that I don't put that much stock in is that future generations after the catastrophe would remember it and therefore be more likely to take action to reduce future catastrophes. I don't find that compelling because I don't think that the Spanish flu made us more prepared against Covid-19, for example. Let alone that the plagues of Justinian prepared us against Covid-19. However, I'm not seeing other strong arguments in this vein, either.

Thanks, that's a good catch. Really, in the simple model the relevant point of time for the first run should be when the alignment challenge has been solved, even for superintelligence. But that's before 'reasonably good global governance".

Of course, there's an issue that this is trying to model alignment as a binary thing for simplicity, even though really if a catastrophe came when half of the alignment challenge had been solved, that would still be a really big deal for similar reasons to the paper.

One additional comment is that this sort of "concepts moving around issue" is one of the things that I've found most annoying from AI, and where it happens quite a lot. You need to try and uproot these issues from the text, and this was a case of me missing it.

The second way in which this post is an experiment is that it's an example of what I've been calling AI-enhanced writing. The experiment here is to see how much more productive I can be in the research and writing process by relying very heavily on AI assistance — Ttrying to use AI rather than myself wherever I can possibly do so. In this case, I went from having the basic idea to having this draft in about a day of work.

I'd be very interested in people's comments on how apparent it is that AI was used so extensively in drafting this piece — in particular if there are examples of AI slop that you can find in the text and that I missed.

The first way in which this post is an experiment is that it's work-in-progress that I'm presenting at a Forethought Research progress meeting. The experiment is just to publish it as a draft and then have the comments that I would normally receive as GoogleDoc comments on this forum post instead. The hope is that by doing this more people can get up to speed with Forethought research earlier than they would have and we can also get more feedback and thoughts at an earlier stage from a wider diversity of people.

I'd welcome takes from Forumites on how valuable or not this was.

I, of course, agree!

One additional point, as I'm sure you know, is that potentially you can also affect P(things go really well | AI takeover). And actions to increase ΔP(things go really well | AI takeover) might be quite similar to actions that increase ΔP(things go really well | no AI takeover). If so, that's an additional argument for those actions compared to affecting ΔP(no AI takeover).

Re the formal breakdown, people sometimes miss the BF supplement here which goes into this in a bit more depth. And here's an excerpt from a forthcoming paper, "Beyond Existential Risk", in the context of more precisely defining the "Maxipok" principle. What it gives is very similar to your breakdown, and you might find some of the terms in here useful (apologies that some of the formatting is messed up):

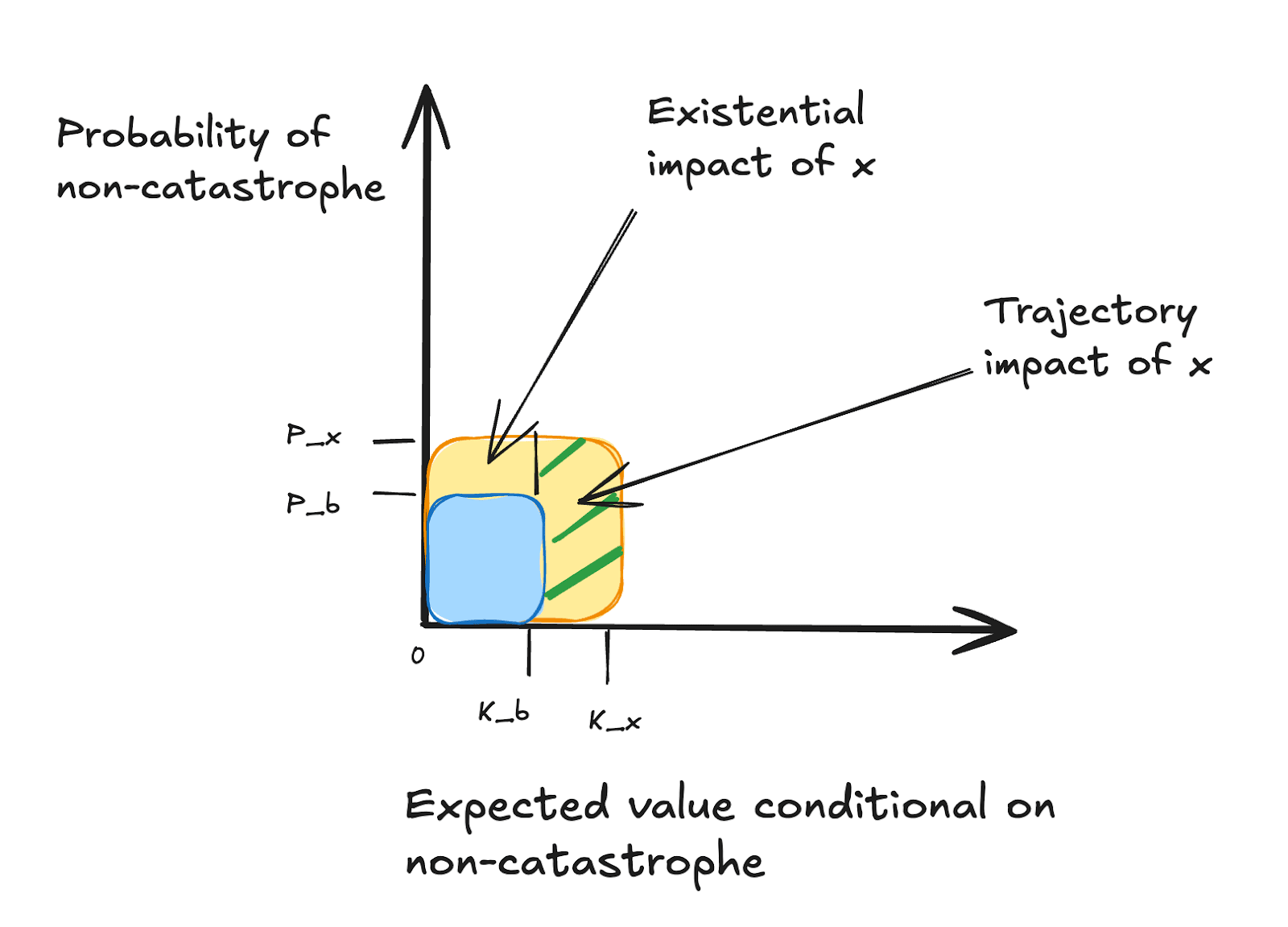

"An action x’s overall impact (ΔEVx) is its increase in expected value relative to baseline. We’ll let C refer to the state of existential catastrophe, and b refer to the baseline action. We’ll define, for any action x: Px=P[¬C | x] and Kx=E[V |¬C, x]. We can then break overall impact down as follows:

ΔEVx = (Px – Pb) Kb+ Px(Kx– Kb)

We call (Px – Pb) Kb the action’s existential impact and Px(Kx– Kb) the action’s trajectory impact. An action’s existential impact is the portion of its expected value (relative to baseline) that comes from changing the probability of existential catastrophe; an action’s trajectory impact is the portion of its expected value that comes from changing the value of the world conditional on no existential catastrophe occurring.

We can illustrate this graphically, where the areas in the graph represent overall expected value, relative to a scenario with a guarantee of catastrophe:

With these in hand, we can then define:

Maxipok (precisified): In the decision situations that are highest-stakes with respect to the longterm future, if an action is near‑best on overall impact, then it is close-to-near‑best on existential impact.

[1] Here’s the derivation. Given the law of total expectation:

E[V|x] = P(¬C | x)E[V |¬C, x] + P(C | x)E[V |C, x]

To simplify things (in a way that doesn’t affect our overall argument, and bearing in mind that the “0” is arbitrary), we assume that E[V |C, x] = 0, for all x, so:

E[V|x] = P(¬C | x)E[V |¬C, x]

And, by our definition of the terms:

P(¬C | x)E[V |¬C, x] = PxKx

So:

ΔEVx= E[V|x] – E[V|b] = PxKx – PbKb

Then adding (PxKb – PxKb) to this and rearranging gives us:

ΔEVx = (Px–Pb)Kb + Px(Kx–Kb)"

I agree - this is a great point. Thanks, Simon!

You are right that the magnitude of rerun risk from alignment should be lower than the probability of misaligned AI doom. However, in worlds in which AI takeover is very likely but that we can't change that, or in worlds where it's very unlikely and we can't change that, those aren't the interesting worlds, from the perspective of taking action. (Owen and Fin have a post on this topic that should be coming out fairly soon). So, if we're taking this consideration into account, this should also discount the value of word to reduce misalignment risk today, too.

(Another upshot: bio-risk seems more like chance than uncertainty, so biorisk becomes comparatively more important than you'd think before this consideration.)