Quick takes

EAs are trying to win the "attention arms race" by not playing. I think this could be a mistake.

- The founding ideas and culture of EA was created and “grew up” in the early 2010s, when online content consumption looked very different.

- We’ve overall underreacted to shifts in the landscape of where people get ideas and how they engage with them.

- As a result, we’ve fallen behind, and should consider making a push to bring our messaging and content delivery mechanisms in line with 2020s consumption.

- Also, EA culture is dispositionally calm, rational, and dry.

- Th

Hey y'all,

My TikTok algorithm recently presented me with this video about effective altruism, with over 100k likes and (TikTok claims) almost 1 million views. This isn't a ridiculous amount, but it's a pretty broad audience to reach with one video, and it's not a particularly kind framing to EA. As far as criticisms go, it's not the worst, it starts with Peter Singer's thought experiment and it takes the moral imperative seriously as a concept, but it also frames several EA and EA-adjacent activities negatively, saying EA quote "is spending millions on hos...

5% of Americans identify as being on the far left

However, I would strongly wager that the majority of this sample does not believe in the three ideological points you outlined around authoritarianism, terrorist attacks, and Stalin & Mao (I think it is also quite unlikely that the people viewing the Tik Tok in question would believe these things either). Those latter beliefs are extremely fringe.

The Ezra Klein Show (one of my favourite podcasts) just release an episode with GiveWell CEO Elie Hassenfeld!

Idea for someone with a bit of free time:

While I don't have the bandwidth for this atm, someone should make a public (or private for, say, policy/reputation reasons) list of people working in (one or multiple of) the very neglected cause areas — e.g., digital minds (this is a good start), insect welfare, space governance, AI-enabled coups, and even AI safety (more for the second reason than others). Optional but nice-to-have(s): notes on what they’re working on, time contributed, background, sub-area, and the rough rate of growth in the field (you pr...

I’ve seen a few people in the LessWrong community congratulate the community on predicting or preparing for covid-19 earlier than others, but I haven’t actually seen the evidence that the LessWrong community was particularly early on covid or gave particularly wise advice on what to do about it. I looked into this, and as far as I can tell, this self-congratulatory narrative is a complete myth.

Many people were worried about and preparing for covid in early 2020 before everything finally snowballed in the second week of March 2020. I remember it personally....

Following up a bit on this, @parconley. The second post in Zvi's covid-19 series is from 6pm Eastern on March 13, 2020. Let's remember where this is in the timeline. From my quick take above:

...On March 8, 2020, Italy put a quarter of its population under lockdown, then put the whole country on lockdown on March 10. On March 11, the World Health Organization declared covid-19 a global pandemic. (The same day, the NBA suspended the season and Tom Hanks publicly disclosed he had covid.) On March 12, Ohio closed its schools statewide. The U.S. declared a nationa

Reading Will's post about the future of EA (here) I think that there is an option also to "hang around and see what happens". It seems valuable to have multiple similar communities. For a while I was more involved in EA, then more in rationalism. I can imagine being more involved in EA again.

A better earth would build a second suez canal, to ensure that we don't suffer trillions in damage if the first one gets stuck. Likewise, having 2 "think carefully about things movements" seems fine.

It hasn't always felt like this "two is better than one" feeling...

Well, the evidence is there if you're ever curious. You asked for it, and I gave it.

David Thorstad, who writes the Reflective Altruism blog, is a professional academic philosopher and, until recently, was a researcher at the Global Priorities Institute at Oxford. He was an editor of the recent Essays on Longtermism anthology published by Oxford University Press, which includes an essay co-authored by Will MacAskill, as well as essays by a few other people well-known in the effective altruism community and the LessWrong community. He has a number of publish...

Hi, does anyone from the US want to donation-swap with me to a German tax-deductible organization? I want to donate $2410 to the Berkeley Genomics Project via Manifund.

it's quite easy, I actually already did it with printful + shopify. I stalled out because (1) I realized it's much more confusing to deal with all the copyright stuff and stepping on toes (I don't want to be competing with ea itself or ea orgs and didn't feel like coordinating with a bunch of people. (2) you kind of get raked using a easy fully automated stack. Not a big deal but with shipping hoodies end up being like 35-40 and t shirts almost 20. I felt like given the size of EA we should probably just buy a heat press or embroidery machine since w...

A quick OpenAI-o1 preview BOTEC for additional emissions from a sort of Leopold scenario ~2030, assuming energy is mostly provided by natural gas, since I was kinda curious. Not much time spent on this and took the results at face value. I (of course?) buy that emissions don't matter in short term, in a world where R&D is increasingly automated and scaled.

Phib: Say an additional 20% of US electricity was added to our power usage (e.g. for AI) over the next 6 years, and it was mostly natural gas. Also, that AI inference is used at an increasing rate, sa...

I live in Australia, and am interested in donating to the fundraising efforts of MIRI and Lightcone Infrastructure, to the tune of $2,000 USD for MIRI and $1,000 USD for Lightcone. Neither of these are tax-advantaged for me. Lightcone is tax advantaged in the US, and MIRI is tax advantaged in a few countries according to their website.

Anyone want to make a trade, where I donate the money to a tax-advantaged charity in Australia that you would otherwise donate to, and you make these donations? As I understand it, anything in Effective Altruism Austral...

Londoners!

@Gemma 🔸 is hosting a co-writing session this Sunday, for people who would like to write "Why I Donate" posts. The plan is to work in poms, and publish something during the session.

A semi-regular reminder that anybody who wants to join EA (or EA adjacent) online book clubs, I'm your guy.

Copying from a previous post:

...I run some online book clubs, some of which are explicitly EA and some of which are EA-adjacent: one on China as it relates to EA, one on professional development for EAs, and one on animal rights/welfare/advocacy. I don't like self-promoting, but I figure I should post this at least once on the EA Forum so that people can find it if they search for "book club" or "reading group." Details, including links for joining each

In Developmenet, a global development-focused magazine founded by Lauren Gilbert, has just opened their first call for pitches. They are looking for 2-4k word stories about things happening in the developing world. They're especially excited about pitches from people living in low and middle income countries. They pay 2k USD per article, submissions close Jan 12. More info here

The mental health EA cause space should explore more experimental, scalable interventions, such as promoting anti-inflammatory diets at school/college cafeterias to reduce depression in young people, or using lighting design to reduce seasonal depression. What I've seen of this cause area so far seems focused on psychotherapy in low-income countries. I feel like we're missing some more out-of-the-box interventions here. Does anyone know of any relevant work along these lines?

A few points:

- There is still a lot of progress to be made in low-income country psychotherapy, which I think many EAs find counterintuitive. StrongMinds and Friendship Bench could both be about 5× cheaper, and have found ways to get substantially cheaper every year for the past half decade or so. At Kaya Guides, we’re exploring further improvements and should share more soon.

- Plausibly, you could double cost-effectiveness again if it were possible to replace human counsellors with AI in a way that maintained retention (the jury is still out here).

- The Hap

What are some resources for doing their own GPR that is longer than the couple months recommended in this 80k article but shorter than a lifetime's worth of work as a GP researcher?

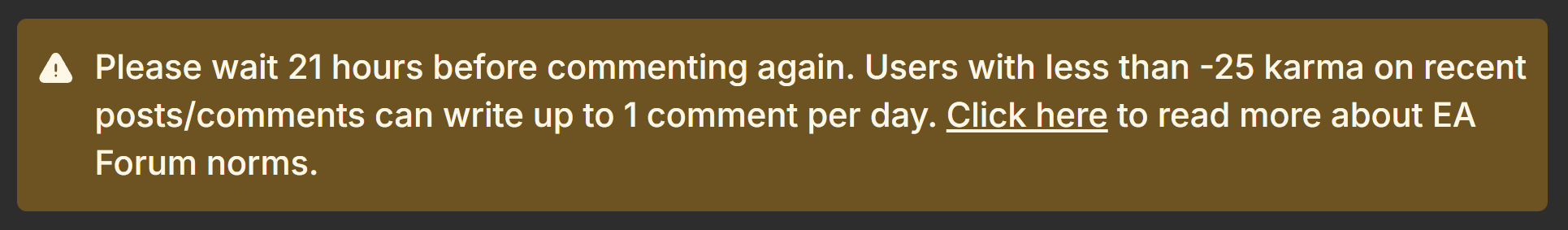

Rate limiting on the EA Forum is too strict. Given that people karma downvote because of disagreement, rather than because of quality or civility — or they judge quality and/or civility largely on the basis of what they agree or disagree with — there is a huge disincentive against expressing unpopular or controversial opinions (relative to the views of active EA Forum users, not necessarily relative to the general public or relevant expert communities) on certain topics.

This is a message I saw recently:

You aren't just rate limited for 24 hours once you fal...

- You're shooting the messenger. I'm not advocating for downvoting posts that smell of "the outgroup", just saying that this happens in most communities that are centered around an ideological or even methodological framework. It's a way you can be downvoted while still being correct, especially from the LEAST thoughtful 25% of EA forum voters

- Please read the quote from Claude more carefully. MacAskill is not an "anti-utilitarian" who thinks consequentialism is "fundamentally misguided", he's the moral uncertainty guy. The moral parliament usually recommends actions similar to consequentialism with side constraints in practice.

I probably won't engage more with this conversation.

Here's some quick takes on what you can do if you want to contribute to AI safety or governance (they may generalise, but no guarantees). Paraphrased from a longer talk I gave, transcript here.

- First, there’s still tons of alpha left in having good takes.

- (Matt Reardon originally said this to me and I was like, “what, no way”, but now I think he was right and this is still true – thanks Matt!)

- You might be surprised, because there’s many people doing AI safety and governance work, but I think there’s still plenty of demand for good takes, and

EA Connect 2025: Personal Takeaways

Background

I'm Ondřej Kubů, a postdoctoral researcher in mathematical physics at ICMAT Madrid, working on integrable Hamiltonian systems. I've engaged with EA ideas since around 2020—initially through reading and podcasts, then ACX meetups, and from 2023 more regularly with Prague EA (now EA Madrid after moving here). I took the GWWC 10% pledge during the event.

My EA focus is longtermist, primarily AI risk. My mathematical background has led me to take seriously arguments that alignment of superintelligent AI may face fund...