TLDR

Longtermists usually focus on lowering existential risk, and in practice typically prioritise extinction risk. I argue that

- resource entropy (due to picking the low hanging fruit) between civilisations is inevitable, and might dramatically reduce p(flourishing) after a non-extinction catastrophe

- thinking about p(flourishing) requires longtermists to be more explicitly ambitious - not to settle for a civilisation that never leaves Earth

- this greater ambition ironically makes the case for moving longtermist cause priorities closer to the rest of society’s - though perhaps also closer to effective accelerationism

Quick warning: approximately half of this essay is in footnotes. You can skip them all without losing the core argument, but don't believe the forum's reading time estimate - or your lying scrollbar - if you're a fellow footnote-completionist.

Longtermists think too short-term

… about happy futures

The most ambitious form of the longtermist project is a grand vision of a future of human descendants living in some form around billions of stars, or more.[1] The longtermist movement is nominally concerned with maximising the probability of reaching that state, as well as the quality of life in it, but has generally emphasised the heuristic of minimising short term extinction risk. For example, Derek Parfit, the godfather of modern longtermism, famously claimed that the difference between 99% elimination of humanity and complete extinction would be ‘very much greater’ than the difference between 0% and 99% loss of life.[2]

In this essay I argue that longtermists should shift substantial focus away from extinction-level catastrophes, particularly though not exclusively towards sub-extinction catastrophes. To motivate this shift, let's start by clarifying what ‘success’ looks like by longtermist lights. Echoing Parfit, if we imagine three scenarios:

- Extinction within the next few decades

- Surviving the circa-600-million years until the Earth becomes uninhabitable and then going extinct

- Becoming an interstellar species for much of the time until the universe becomes uninhabitable

… then the difference in value between 3 and 2 is very much greater than the difference between 2 and 1.[3]

Following this thought experiment, for this essay I define flourishing and success as follows

Flourishing: Becoming an interstellar species for much of the time until the universe becomes uninhabitable - or ‘becoming stably interstellar’, for short[4]

Success: Flourishing directly from a given state, without any civilisational catastrophe

… and about the path through less happy futures[5]

This focus means when considering non-extinction global catastrophes we should focus not only on prospects for ‘recovery’ but on the whole counterfactual difference in probability of eventual flourishing.[6] That is, rather than thinking about p(doom), a popular proxy measure, we should focus on the actual goal. So to assess the counterfactual value of some catastrophe, we need to consider the difference in p(flourishing):

Alternatively, since from a longtermist perspective the cost of extinction can be approximately represented as the loss of our entire chance of flourishing, it can help to represent the cost of counterfactual catastrophes in somewhat DALY-analogous units of extinction:[7]

Unfortunately, very little literature that examines prospects after non-extinction catastrophes has considered the question beyond the probability of eventual ‘recovery’. Recovery is rarely defined and certainly has no standard definition,[8] so I’ll propose and use the following definition:

Recovery: A civilisation regaining a level of technology up to but not beyond the technology to cause its own civilisational collapse

Using this definition (and assuming that recovery is a prerequisite for flourishing) then if we break down p(flourishing | catastrophe) as

… then while there is a reasonably large literature relevant to p(recovery | catastrophe) very little literature - if any - has focused on .[9]

I can only speculate as to why this disparity exists. My best guess is that people tend to assume that once we’ve recovered technological civilisation, we’ve both done the hard part and given an existence proof: since it can be done once, it can be done as many times as necessary. On these assumptions, if recovery is sufficiently likely, the probability of eventual success is largely unaltered after even major global catastrophes. But I believe this would be a recklessly optimistic view, and that this factor might be where most of the difference in probability of success lies.

The parable of the apple tree

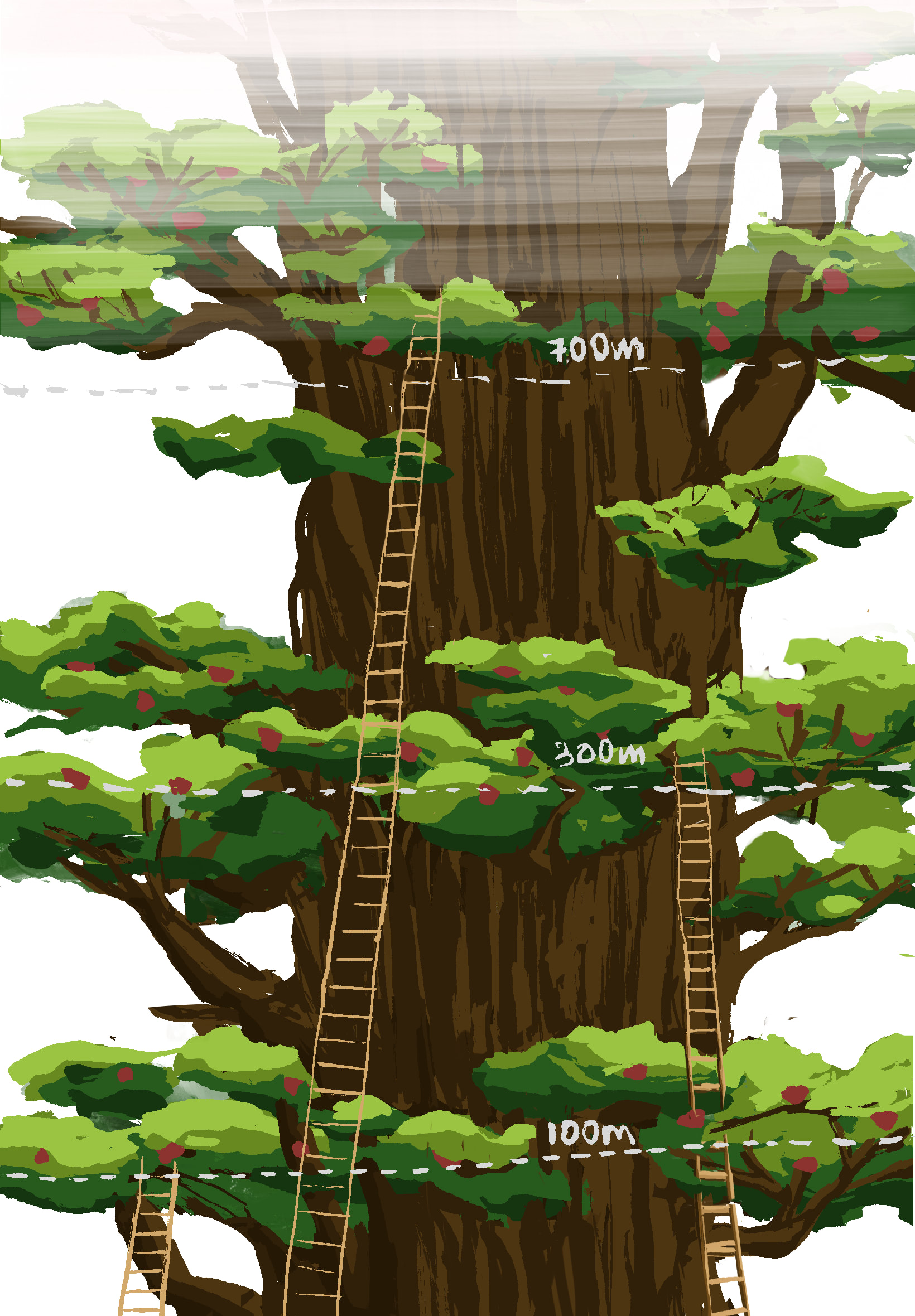

Illustration by Siao Si Looi

We can illustrate this by taking the common metaphor of low-hanging fruit more literally than usual, to give a parable for and toy model of our own civilisation.

Picture a civilisation comparable to ours in most respects, except that all its calories come from an unfathomably tall apple tree (with exceptionally calorific apples). Ascent is always possible, but new ladder technology makes it easier and less costly, with each new generation of ladder being taller than the last.

In this civilisation, it’s possible that this dynamic could go on indefinitely - perhaps even accelerating if the ladders ever reach the otherwise inaccessible forest canopy. But it’s clear that for any given length of ladder, the cost of ascending to a given branch is increasing. In particular, if this civilisation were ever to (literally) fall, and hence restart its ladder development, getting sufficient apples to feed a given population size would be far more expensive - with the difference greater each time it happened.

A simple equation for p(success)

To think about this quantitatively, let’s start by breaking down what ‘flourishing’ might entail. Since it’s defined as being interstellar, it almost certainly necessitates passing two milestones, in order:

1. Time of perils

Humanity gains the technology to cause its own civilisational collapse: one could define this as the possession of nuclear weapons, bioengineered pandemics, weaponised tool AI, asteroid redirection and possibly other weaponry;

2. Interstellar proliferation

Reaching some technological state far more advanced than that of the start of the time of perils, which enables the colonisation of other stars

By definition, reaching interstellar proliferation requires not deploying any civilisation-collapsing technologies while passing through the time of perils.[10]

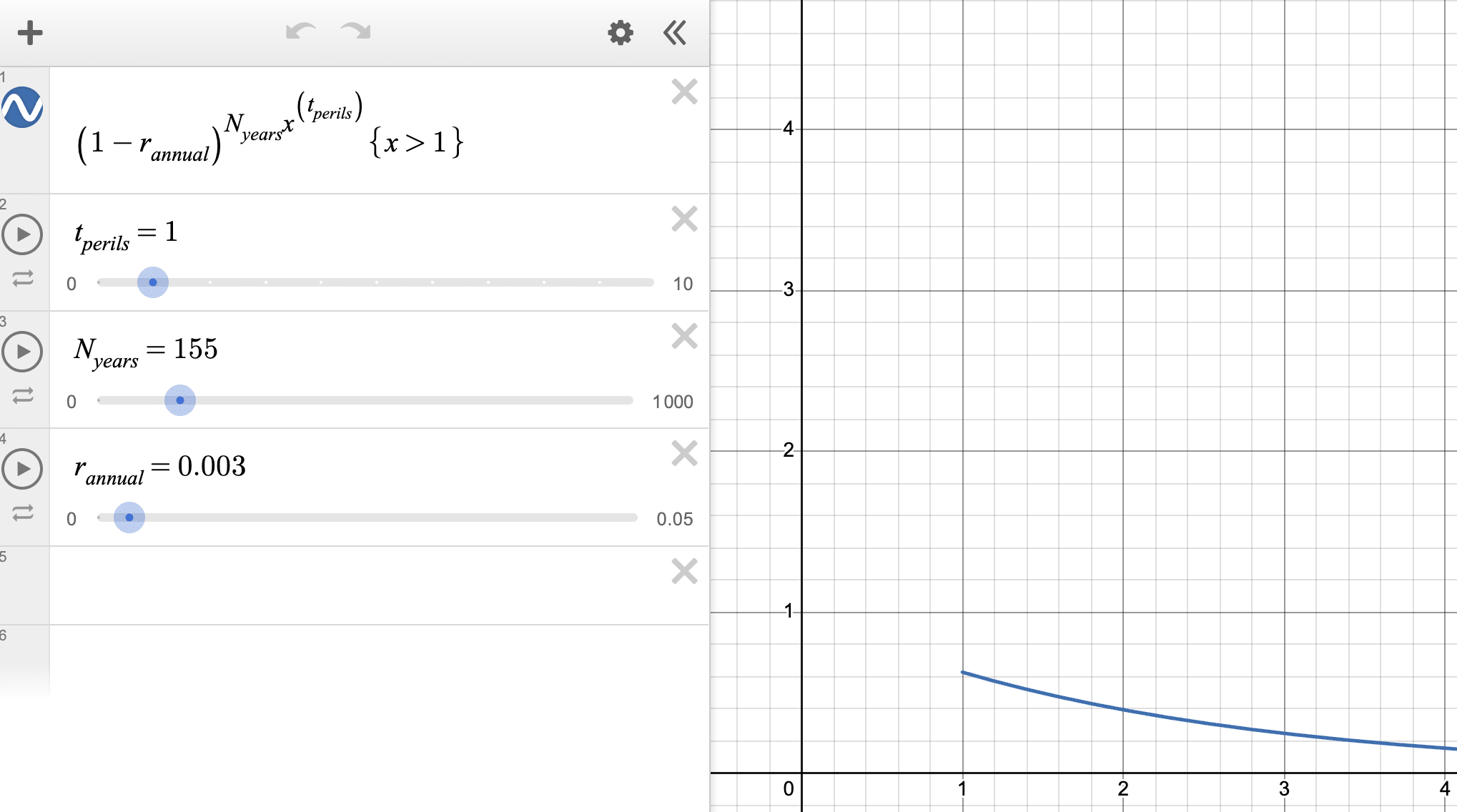

Since we have various data and estimates on discrete annual events, we might model the probability of directly reaching interstellar proliferation from our current time of perils as

where

- r_annual is an approximately constant (or at least usefully averageable) annual risk of deploying civilisation-collapsing technology; and

- N_years is the expected number of years it would take to reach interstellar proliferation from our time of perils[11]

… though while in some ways I treat these values as ‘constant’, on this model we would treat the effect of any relevant decision we might make as a counterfactual change to one of the values.

At this point we can plug in some actual estimates of these values to assess our prospects.

Disclaimer: none of the value estimates I make in this essay are financial advice, and hence I’ve relegated my working to footnotes, but I think it’s useful to give and explain my range of guesses for illustration purposes. Later in the essay I’ll link to an interactive graph where you can test the implications of your own best guesses.

We entered our current time of perils around 1945, with the detonation of the first nuclear bombs, and have so far survived 80 years without blowing ourselves up, so we can subtract those 80 from the top row of the following table to calculate its values:

| 120-year perils[12] | 155-years perils[13] | 360-year perils[14] | |

| 0.1% annual collapse risk[15] | 96% | 93% | 76% |

| 0.3% annual collapse risk[15] | 87% | 80% | 43% |

| 1.6% annual collapse risk[16] | 52% | 30% | 1.1% |

Table 1: Current p(success) on varying initial assumptions of r_annual and N_years

Fruit-picking (or, scrumping from future generations)

Let’s now consider the challenges faced by post-collapse civilisations in their own times of perils.

The claim that many key resources are depleting is unlikely to be new to any longtermist - though it’s rarely framed as a major factor in longtermist priorities.[17] But, per the parable above, our civilisation progressively picks the lowest hanging apples from the tree, as will each civilisation after ours, and we should expect this process to make life exponentially harder for each future generation to successfully clear its own time of perils.

Some of these ‘apples’ are literally consumed in the process, such as fossil fuels, fissile materials, beneficial aspects of the environment such biodiversity, climate, perhaps topsoil. Others, like phosphorus, copper, and freshwater can’t literally be destroyed or consumed.[18] But the general phenomenon is one of moving our pool of resources to ever higher entropy states.

Typical examples of such entropy include dispersion over the sea or land. In The Knowledge, Lewis Dartnell wryly describes modern agriculture as ‘an efficient pipeline for stripping nutrients from the land and flushing them into the ocean’. Meanwhile, metals corrode at a rate that means absent external input (i.e. consumption of a different set of resources), within perhaps 500 years much of the metal we’ve ever refined will have corroded into thinly spread particles.[19] On top of that, metal recycling loses material fast enough that most of any given feedstock would probably be irretrievable within a couple of centuries of industrial reuse.[20]

Other factors, like metal ships sinking, environmental change in formerly urban areas and perhaps nuclear war might accelerate the process, potentially eclipsing the importance of specific corrosion rates. For this essay, my argument is just that it’s a factor that longtermists shouldn’t ignore.

And the problem exacerbates itself. The slower pace of technological development caused by increased resource scarcity causes each civilisation to stay in the high-consumption time of perils longer, thus increasing the per-civilisation resource consumption.

For our purposes, we can operationalise the effect of resource attrition as multiplying the N_years it would take to get from one level of technology to the next by some value, N_friction, using our best guesses about our current rate as a basis. For simplicity, we might treat N_friction as a per-collapse constant. Then we can update our flourishing equation to be, for the kth civilisation

kth civilisation’s p(success):

To go back to our apple tree model, we might imagine the first civilisation plucking all the apples within 100 metres of the ground. If N_friction is 2, the next civilisation would pluck all the apples within 300 metres of the ground, the next 700 metres - and so on, for any future civilisations.[21]

You can test the implications of your own opinions on this interactive graph:

The X-axis is different values of k-friction, and the y-axis is the probability of getting through the tperilsth time of perils

As ever, I don’t want to get attached to specific estimates of the value of N_friction, and I think it’s extremely hard to put a sensible estimate on it even to within orders of magnitude - but it's important to try. So Tables 2-4 give prospects for various civilisations, comparing N_friction values in powers of 2 for comparability, and with supporting narratives for N_friction in footnotes. These narratives are roughly inspired by the difference in energy return on investments (EROI) from various sources - though I think a reasonable N_friction estimate could be substantially higher than even table 4's.

| 120 years | 155 years | 360 years | |

| 0.1% annual collapse risk | 84% | 80% | 60% |

| 0.3% annual collapse risk | 60% | 52% | 22% |

| 1.6% annual collapse risk | 6% | 3% | Effectively 0 |

Table 2: Civilisation 2’s prospects given a x (i.e. a 1.4x) entropy burden[22]

| 120 years | 155 years | 360 years | |

| 0.1% annual collapse risk | 62% | 54% | 24% |

| 0.3% annual collapse risk | 24% | 16% | 1% |

| 1.6% annual collapse risk | Effectively 0 | Effectively 0 | Effectively 0 |

Table 3: Civilisation 2’s prospects given a 4x entropy burden[23] or, equivalently,

Civilisation 4’s prospects given a 1.4x entropy burden

| 120 years | 155 years | 360 years | |

| 0.1% annual collapse risk | 15% | 8% | 0.3% |

| 0.3% annual collapse risk | 0.3% | Effectively 0 | Effectively 0 |

| 1.6% annual collapse risk | Effectively 0 | Effectively 0 | Effectively 0 |

Table 4: Civilisation 2’s prospects given a 16x entropy burden[24] or, equivalently,

Civilisation 3’s prospects given a 4x entropy burden or

Civilisation 5’s prospects given a 1.4x entropy burden

In summary

- A single civilisational collapse could be almost as bad as extinction: taking the top-left cell of Tables 1 and 4, which seem optimistic except for the high friction estimate, collapse would be equivalent to about extinctions.

- On more modest friction assumptions but slightly more pessimistic risk/duration estimates, we might again have at most one more realistic chance at flourishing (and that with much reduced odds of success).

- Substantially higher values of N_friction rapidly drive the cost of even relatively ‘mild’ civilisational collapses to levels virtually indistinguishable from the cost of extinction.

Also the relative (i.e. unit-of-extinction-) cost of a civilisational collapse is higher the higher one’s annual risk estimates and the longer one’s base timelines are - since they respectively increase the absolute friction penalty and increase the expected duration through which we’ll have to pay it.

Given the wild uncertainty around this value, more research into its factors is urgently needed by the longtermist community.

Counter and counter-counterarguments

The imminent threat of AGI makes all this irrelevant

If you think that

- AGI will arrive in not much more than a decade; and

- If it goes wrong it will drive us extinct; and

- If it goes well it will solve all our other problems

Then this essay is irrelevant. But the less confident one is about each of these claims, the stronger the case for prioritising other catastrophic risks:

- If AGI is significantly more than a decade away and higher annual risk estimates of other catastrophes are plausible, then we might still have a greater expected loss of value from those catastrophes - even if 2. and 3. are true

- If AGI will merely collapse our society, by (say) triggering a nuclear launch or shutting down communications, then we might still have greater expected value by reducing our susceptibility to or improving our recoverability from nuclear war - even if 1. and 3. are true

- If a world with benign AGI looks less like a stable utopia and more like, perhaps, an acceleration of business as usual with many of the same risks, then even if 1. and 2. are true, we need to have a continued plan for dealing with those accelerated risks

Reclaimed technology makes tech progress easy for future civilisations

Luisa Rodriguez and Carl Shulman have informally suggested that future civilisations might have it as easy as us, or even more so, due to all the resources we’ve strewn around on the ground. I think this is a factor but its effect size is small:

- It gives a windfall that might increase the base rate of each subsequent civilisation’s development, but can’t outweigh the exponential decay of fruit-picking. Each civilisation will still tend to have a higher cost-per-resource than the last

- I expect the effect any such windfall to be substantially smaller than the range across outcomes given by high uncertainties around almost every factor in this picture

- It’s very sensitive to the duration between civilisations. As seen above, if the gap is more than a few centuries or more than one civilisational fall and rise, most of the windfall will be lost. This means longtermists should still care, at the very least, about the forms the nonextinction collapse of civilisation would be likely to take[25]

R_annual and N_friction might be too low for this to matter

This might be true, but we shouldn’t assume it without substantially more justification than I’ve seen anyone give.

Remarks

If my argument is correct, then it affects longtermist prioritisation in various ways. On this model, we will never strictly undergo an existential catastrophe by the classic definition - we always retain our potential for a flourishing future. But across multiple civilisational collapses, and arguably even after a single one, the probability of achieving that future will diminish so much as to be effectively 0, even given arbitrarily many chances to rebuild. This way of thinking means nothing and everything is an existential risk.

All else being equal, technological acceleration is desirable under this view, potentially even at the cost of increased catastrophic risk, for two reasons:

- It reduces the N_years term in our risk equation, and this can outweigh a decrease to the value of our base term

- Even if our total probability of our current civilisation’s p(success) remains constant (or shrinks!), by resolving things one way or the other more quickly such that fewer resources are consumed, it reduces the expected N_friction value

(though obviously acceleration that doesn’t increase risk is strongly preferable)

While all else is rarely equal, this provides a longtermist argument for global health and development, complementary to Alexandrie and Eden’s tentative call for longtermism-driven bednet donation. Quite aside from the immediate suffering involved, an early death squanders the resources used to create and sustain that person, increasing the resource entropy of our current era - i.e. increasing N_years in the current time of perils.

This model also suggests that there might be substantial value in conserving resources that can be reused across multiple civilisations - i.e. looking for ways to reduce N_friction. One well-evidenced way to do that is to limit atmospheric CO2 in collapse-resilient ways; another option might be to look for better ways to deal with the resources in sewage than pumping them into the ocean.

But by far the strongest and surely least controversial consequence of this model is that longtermists should seek to reduce non-extinction catastrophic risk almost as urgently as they do extinction risks - and arguably more so, if less extreme catastrophes seem more likely.

Acknowledgements

Thanks to Peter Hozák, Jonathan Ng, Abelard Podgorski and Siao Si Looi for discussions and input on the structure and substance of the essay - and in the last case for the beautiful illustration as well. Any remaining catastrophes are my own.

- ^

Greaves and MacAskill's Case for Strong Longtermism considers scenarios with up to future lives (a Milky Way colonised with digital life). Nick Bostrom in Superintelligence gives an upper estimate of lives (colonising the Virgo Supercluster), which Newberry in ‘How many lives does the future hold?’ calls ‘conservative’, confusingly then offering as his own highest estimate. Perhaps even these estimates will look exceptionally conservative if it turns out to be possible to mine black holes for up to years, the potential period for which they might persist.

- ^

This is reflected in other prominent longtermist writings, such as Kelsey Piper's Human extinction would be a uniquely awful tragedy (emphasis mine), 80k's Longtermism: a call to protect future generations ('we think the primary upshot is that it makes it more important to urgently address extinction risks in the present'), and implicitly in Toby Ord's quantification of existential risks in The Precipice:

Existential catastrophe via Chance within next 100 years Asteroid or comet impact ~ 1 in 1,000,000 Supervolcanic eruption ~ 1 in 10,000 Stellar explosion ~ 1 in 1,000,000,000 Total natural risk ~ 1 in 10,000 Nuclear war ~ 1 in 1,000 Climate change ~ 1 in 1,000 Other environmental damage ~ 1 in 1,000 “Naturally” arising pandemics ~ 1 in 10,000 Engineered pandemics ~ 1 in 30 Unaligned artificial intelligence ~ 1 in 10 Unforeseen anthropogenic risks ~ 1 in 30 Other anthropogenic risks ~ 1 in 50 Total anthropogenic risk ~ 1 in 6 Total existential risk ~ 1 in 6

Specifically, he rates 'existential' risks from sources like nuclear war or severe climate change as orders of magnitude lower than from unaligned AI and engineered pandemics.Since he presumably doesn't believe (and doesn't indicate in the discussion that he believes) that nuclear catastrophe or severe climate change are orders of magnitude less likely than AI- or engineered pandemic catastrophes, the implication seems to be that he views extinction risk as the dominant factor in existential risk.

- ^

Using the estimates cited in footnote 1, surviving until Earth becomes uninhabitable would allow between and lives (Greaves & MacAskill's estimates), so let’s split the log difference and say that would yield times as much human value as there will be in the currently living humans’ lifetimes.

But it would only yield about a th as much value as colonising the solar system (combination of Greaves & MacAskill's estimates), at least a th as much as colonising the accessible universe (Newberry's estimate), or a th as much as flourishing in a Black Hole era.

- ^

Ideally we might have a more fluid definition that permits degrees of success, but that’s unnecessarily complex for current purposes.

- ^

This and the ‘A simple equation ...’ section draw on but heavily simplify my Courting Virgo sequence. If you find yourself shouting ‘that’s far too simplistic!’ at the assumptions I make here, then I agree, and encourage you to check the sequence out - though I probably went too far in the other direction there.

- ^

This discussion intentionally disregards the quality of future life, treating it as a per-person constant. One might expect quality of life to be net worse after a non-extinction catastrophe, which would add further support most of my conclusions.

- ^

This has the side benefit of making it easier to quantitatively compare s-risks, since they can potentially be equivalent multiple extinctions (although on sufficiently pessimistic views, extinction might be positive and s-risks worth negative extinctions).

- ^

For a review of the myriad uses of the word ‘recovery’, see ‘Recovery’ from catastrophe is a vague goal, along with that section's associated footnote .

- ^

I certainly haven’t exhaustively searched the field, but to give some sense of the ratio I’ve found to date:

- in Essays on Longtermism alone there are at least two essays relating to recovery (and not to subsequent success); What We Owe the Future dedicates a full chapter to recovery from collapse but doesn’t discuss more advanced states than 'reindustrialising'

- I once put together this reading list on collapse, and to my admittedly fading memory nothing in the ‘read’ section gave p(flourishing|recovery) recovery more than a passing mention. The most explicit mention of the scenario I remember was in Adaptation to and Recovery from Global Catastrophe, which notes the issue but doesn’t discuss its probability or nature.

- ^

This argument is agnostic as to whether there might be additional requirements for stability, such as attainment of societal wisdom or establishment of a benign universal government.

- ^

The actual path from our current time of perils to flourishing is unlikely to be smooth, even in the best case - we might experience multiple ‘small’ catastrophes that delay our getting there by some number of years. But for simplicity here, we’ll consider those small catastrophes to already be factored in to our estimate of expected number of years.

- ^

Lower estimate taken from the highly scientific method of ‘double Elon Musk’s estimate’ of the time to develop a Mars colony. While interplanetary colonies are far from being an interstellar civilisation they would provide substantial defence against at least nuclear war and bioengineered pandemics, and are likely a necessary if not sufficient step in eventually outlasting Earth’s natural lifetime.

If that seems much too optimistic to treat as ‘flourishing’, being more pessimistic will strengthen the urgency of my arguments.

- ^

The 155-year estimate is taken from Metaculus’s current estimate of the chance of having a self-sustaining Mars colony by 2100 as 40%, albeit having sat at around 60% for most of the poll’s life.

- ^

Upper estimate taken from the highly scientific method of ‘approx date given by the most conservative two of five EAs I polled (one offline)’.

- ^

Shulman and Thornley’s essay How Much Should Governments Pay to Prevent Catastrophes? cites estimates of annual probability of nuclear war between 0.02% and 0.38%.

The 7.6% chance of a pathogenic catastrophe they cite works out to a 0.1% risk per year, comparable to the nuclear estimate - although the cited outcome of causing ‘the human population to drop by at least 10%’ might be too modest to collapse civilisation, so perhaps it should be lower.

The closest thing they cite to an aggregate figure for AI catastrophe amounts to 0.07% per year, again close to 0.1% - but those numbers relate to ‘existential risk’ which is hard to disambiguate into ‘extinction’ and ‘non-extinction’. Ord’s and Carlsmith’s estimates are approximately an order of magnitude lower, and possibly lower still if we interpret ‘existential catastrophe’ as potentially including non-extinction civilisational collapse.

So if we sum the somewhat optimistic and pessimistic takes of these estimates we get roughly 0.1% and 0.3% annual risk respectively.

- ^

On an empirical view of history, one might conclude that Shulman and Thornley’s cited estimates (in footnote 15) are substantially too optimistic. The Wikipedia list of nuclear close calls is a frankly terrifying collection of clusterfucks - such as President Nixon drunkenly ordering a nuclear strike against North Korea and having to be overruled by his Secretary of State. Even if we dismiss the lesser-known examples on that list as too small-scale or having too many safeguards, we know that:

- During the Cuban Missile Crisis, Kennedy estimated the risk of nuclear war as ‘between 1 in 3 and even’

- Also during the Cuban Missile Crisis, but unbeknownst at the time to Kennedy, Vasily Arkipov was the only one of his submarine’s three commanding officers to oppose a nuclear launch - fortunately his submarine’s command structure was unusual in requiring launch approval from all three of them

- Stanislav Petrov refused to report an apparent nuclear strike from the US. He might have been unique in his outfit in being disposed not to do so: ‘Petrov said at the time he was never sure that the alarm was erroneous. He felt that his civilian training helped him make the right decision. He said that his colleagues were all professional soldiers with purely military training and, following instructions, would have reported a missile launch if they had been on his shift.’ (Wikipedia)

Let’s naively assume that a) Kennedy was correct, b) the ratio of refusal on Arkhipov’s submarine was representative, such that each officer on the submarine had a 1/3 chance of refusing, and c) someone in Petrov’s position had a 50-50 chance of sending the alert, and his superiors had a 50-50 chance of then counterlaunching. As seen in the above bullets, each of these assumptions seems optimistic in its own way. Yet if we multiply them out we get or only a 35% chance of not having had a nuclear war. If we distribute that over the last 80 years, that works out as 1.3% chance of war per year. I adjust this figure up to what seems to me a still-conservative-for-upper-estimate 1.6% to account for a) the uncertainties in the above bullets, b) all the other near misses, c) the fact that nuclear risks are generally thought to be increasing from when the predictions Shulman and Thornley cite were made, and d) all the other possible catastrophes.

For a more detailed take on annual nuclear war probabilities, which estimates between 1% or 2% per year, see Barrett, Baum and Hostetler's Analyzing and Reducing the Risks of Inadvertent Nuclear War Between the United States and Russia.

- ^

The phenomenon is often remarked on as a problem for our current society, as well as extensively discussed in the collapse literature I mentioned above. But following the general pattern of collapse literature focus, its significance is almost entirely discussed in terms of its significance to recovery, rather than its importance in flourishing thereafter.

For example, in What We Owe the Future’s extended footnotes, MacAskill writes ‘My credence on recovery from such a catastrophe, with current natural resources, is 95 percent or more; if we’ve used up the easily accessible fossil fuels, that credence drops to below 90 percent,’ and then moves to discussing trajectory change.

Again, I surmise that this is on a more specific form of the above assumption that in the current time of perils we have various other forms of energy generation to us. On this view, even though those other forms tend to pay off more slowly, by the time we regain sufficient technology to build these, we’ve basically done the hard work, and therefore from there it’s just a question of time until we can produce any given amount of any key resource. I'm not going to disagree with the latter claim, but cf. rest of essay...

- ^

At least not at scale without far more advanced technology than ours.

- ^

It’s surprisingly hard to find practical information on long-term corrosion rates across metals under ‘natural’ conditions. This table lists various corrosion rates of various alloys under many industrial conditions, but it’s hard to say how other metals would compare to these (although it seems zinc-coated steel would corrode 1-3 orders of magnitude faster), or to say which of these conditions best approximates a) the median state of the median refined metal in the world today, or b) the median refined metal in the decades or possibly centuries following civilisational collapse.

The 500 year figure comes from The World Without Us by Alan Weisman: ‘After 500 years, what is left depends on where in the world you lived. If the climate was temperate, a forest stands in place of a suburb; minus a few hills, it’s begun to resemble what it was before developers, or the farmers they expropriated, first saw it. Amid the trees, half-concealed by a spreading understory, lie aluminum dishwasher parts and stainless steel cookware, their plastic handles splitting but still solid.’

- ^

As with corrosion it’s hard to get concrete data on real entropy rates, but a 2015 report on recycling estimates that ‘about 8% of the material entering the furnace is lost to the steel production process, ending up either as recaptured contaminants, air emissions, or within slag’ (without specifying the proportions of each).

Unlike burn off and evaporation, metal in slag can be reclaimed, but this tends to be more energy-intensive than the original recycling process, since by definition the concentration of metal in slag is lower than the original feedstock.

A 2004 report on the metal lifecycle estimated that between 9 and 50% is ‘lost’ for different alloys, though notes that most of this is probably as reusable slag.

To get my ‘within a couple of centuries’ remark, I imagine that if refined metal were the sole or primary source of metals for an industrialising civilisation, and assume 5% is lost per metal generation, then half of it would be used up within 13 generations. If a large proportion of that civilisation's original metal stock comes from recycling, I imagine they would recycle their own technology substantially more often than we do - perhaps the average refined metal molecule in such a world would be recycled once every decade. Then within 130 years most of the original feedstock would be gone.

As ever, these numbers are meant to be illustrative of the principle. Between successive civilisations, exact attrition rates are less important to resource entropy than whether the severity of civilisational collapse was such that we fell back a few decades or a few centuries (or more). Looking forward more than one civilisation, we can probably assume that virtually all of the scrap from our current civilisation has become inaccessible.

For a formal expression of the entropic nature of material resource reuse, see Gutowski and Dahmus, Mixing Entropy and Product Recycling.

- ^

This means that N_friction isn’t really independent of N_years and r_annual, since the longer we expect each civilisation to last before collapsing, the more time it will spend fruitpicking. But since the relationship is very unclear, we can treat it as an independent variable for estimation purposes.

- ^

A world where either the EROI estimates are too pessimistic, or subsequent civilisations can perhaps either rely primarily on nuclear power, or streamline their economy to spend substantially less energy on nonproductive pursuits, or get much better EROI when their economy is optimised around renewables from the start, or get a substantial boost from wreckage lying around from the previous civilisation, or where mineral resources are extremely fungible.

More abstractly, one could think of this as a world where future civilisations are primarily limited by one main factor, which isn't too severe, and in the time it takes to deal with that factor, lesser problems have been solved.

- ^

A world with no major efficiency gains over the EROI estimates, but perhaps a handful of fossil resources remain accessible and nuclear makes a respectable contribution, or civilisation manages to reindustrialise in highly insolated areas (those with substantial input from sunlight).

Alternatively, one could think of this as a world where future civilisations are limited by a handful of individually surmountable factors that multiplicatively causing substantial friction. E.g. The difficulties of generating industrial levels energy without fossil fuels are exacerbated by the difficulties of feeding enough of a population with depleted fertilisers.

- ^

A world where the majority of energy has to come from solar with no efficiency gains, or where climate change has substantially reduced the liveable areas (perhaps to less isolated areas), or where the friction from scarcity of various key minerals further multiplies the friction from increased cost of energy, or where increased burden of disease or environmental damage substantially degrade human ability.

Alternatively, one could think of this as a world where future civilisations are limited multiplicatively limited by a handful of substantive or many moderate sources of friction. E.g. the difficulties in footnote 23, are further multiplied by meaningfully depleted uranium reserves, by many endemic infectious diseases, further by a depleted set of practical crops in a hotter and less biodiverse world ... etc

A vision of a still-worse world comes from Toby Ord in The Precipice, arguing against even runaway climate change as an existential risk:

With sufficient warming there would be parts of the world whose temperature and humidity combine to exceed the level where humans could survive without air conditioning. [However] even with an extreme 20°C of warming there would be many coastal areas (and some elevated regions) that would have no days above the temperature/humidity threshold.

I’m unsure how optimistic Ord is about flourishing after such dire straits, but personally I find it hard to credit humanity with a realistic chance of proceeding from anywhere near this state to interstellar proliferation.

- ^

I haven’t seen any estimates even under optimal assumptions of cheap to gather this windfall might be. One of the advantages of mining is that you’re extracting all the ore from a small number of sources, so you can have a dedicated transport infrastructure. Having to roam across hundreds of miles to reclaim scrap might substantially reduce its utility.

Some thoughts:

Thanks for the extensive reply! Thoughts in order:

I think this is fair, though if we're not fixing that issue then it seems problematic for any pro- longtermism view, since it implies the ideal outcome is probably destroying the biosphere. Fwiw I also find it hard to imagine humans populating the universe with anything resembling 'wild animals', given the level of control we'd have in such scenarios, and our incentives to exert it. That's not to say we couldn't wind up with something much worse though (planetwide factory farms, or some digital fear-driven economy adjacent to Hanson's Age of Em)

It's whatever the cost in expected future value extinction today would be. The cost can be negative if wild-animal-suffering proliferates, and some trajectory changes could have a negative cost of more than 1 UoEs if they make the potential future more than twice as good, and vice versa (a positive cost of more than 1 UoE if they make the future expectation negative from positive).

But in most cases I think its use is to describe non-extinction catastrophes as having a cost C such that 0 < C < 1UoE.

Good point. I might write a v2 of this essay at some stage, and I'll try and think of a way to fix that if so.

I'm not sure I follow your confusion here, unless it's a restatement of what you wrote in the previous bullet. The latter statement, if I understand it accurately is closer to my primary thesis. The first statement could be true if

a) Recovery is hard; or

b) Developing technology beyond 'recovery' is hard

I don't have a strong view on a), except that it worries me that so many people who've looked into it think it could be very hard, yet x-riskers still seem to write it off as trivial on long timelines without much argument.

b) is roughly a subset of my thesis, though one could believe the main source of friction increase would come when society runs out of technological information from previous civilisations.

I'm not sure if I'm clearing anything up here...

So would I, though modelling it sensibly is extremely hard. My previous sequence's model was too simple to capture this question, despite being probably too complicated for what most people would consider practical use. To answer comparative value loss, you need to look at at least:

My old model didn't have much to say on any beyond the first three of these considerations.

Though if we return to the much simpler model and handwave a bit, if we suppose that annual non-extinction catastrophic risk is between 1 and 2%, then 10-20 year risk is between 20 and 35%. If we also suppose that chances of flourishing after collapse drop by 10 or more %, that puts it in the realm of 'substantially bigger threat than the more conservative AI x-riskers view AI as, substantially smaller than the most pessimistic views of AI x-risk'.

It could be somewhat more important either if chances of flourishing after collapse drop by substantially more (as I think they do), and much more important if we could persistently reduce catastrophic risk that persist for beyond the 10-20-year period (e.g. by moving towards stable global governance or at least substantially reducing nuclear arsenals).

Very nice, thank you for writing.

It seems plausible that p(annual collapse risk) is in part a function of the N friction as well? I think you may cover some of this here but can't really remember.

e.g. a society with less non-renewables will have to be more sustainable survive/grow -> resulting population is somehow more value aligned -> reduced annual collapse risk.

or on the other side

nukes still exist and we can still launch them -> we have higher N friction in pre nuclear age in the next society -> increased annual collapse risk.

(i have a bad habit of just adding slop onto models and think this isn't at all something that need be in the scope of original post just a curiousity).

Thanks :)

I don't think I covered any specific relationship between factors in that essay (except those that were formally modelled in), where I was mainly trying to lay out a framework that would even allow you to ask a question. This essay is the first time I've spent meaningful effort on trying to answer it.

I think it's probably ok to treat the factors as a priori independent, since ultimately you have to run with your own priors. And for the sake of informing prioritisation decisions, you can decide case by case how much you imagine your counterfactual action changing each factor.

How on earth is Derek Parfit the godfather of longermism? If I recall correctly, this is the person who thinks future people are somehow not actual people, thereby applying the term "person affecting views" to exactly the opposite of the set of views a longtermist would think that label applies to.

I remember him discussing person-affecting views in Reasons and Person, but IIRC (though it's been a very long time since I read it) he doesn't particularly advocate for them. I use the phrase mainly because of the quoted passage, which appears (again IIRC) in both The Precipice and What We Owe the Future, as well as possibly some of Bostrom's earlier writing.

I think you could equally give Bostrom the title though, for writing to my knowledge the first whole paper on the subject.

Ah. I mistakenly thought that Parfit coined the term "person affecting view", which is such an obviously biased term I thought he must have been against longtermism, but I can't actually find confirmation of that so maybe I'm just wrong about the origin of the term. I would be curious if anyone knows who did coin it.

As far as I know, Parfit did coin it; he just didn't mean "person-affecting views" to be "obviously biased" in favor of person-affecting views, since he rejected person-affecting views. The idea behind the term is not that "future people are somehow not actual people": a proponent of a person-affecting view will generally agree that I have obligations to improve the welfare of someone who will exist in the future no matter what. (Maybe I can do something now to benefit, in the future, some kid who will be conceived on the other side of the world five minutes from now.) The idea is rather that people who aren't actual (at any point, ever--as opposed to merely possible) are not actual people. In that event, the idea is that no person is negatively affected if they aren't created, since there is no person to negatively affect.

Lets clarify this a bit then. Suppose there is a massive nuclear exchange tomorrow, which leads in short order to the extinction of humanity. I take it both proponents and opponents of person affecting views will agree that that is bad for the people who are alive just before the nuclear detonations, and die either from those detonations or shortly after because of them. Would that also be bad for a person who counterfactually would have been conceived the day after tomorrow, or in a thousand years had there not been a nuclear exchange? I think the obviously correct answer is yes, and I think the longtermist has to answer yes, because that future person who exists in some timelines and not others is an actual person with actual interests that any ethical person must account for. My understanding is that person-affecting views say no, because they have mislabeled that future person as not an actual person. Am I misunderstanding what is meant by person-affecting views? Because if I have understood the term correctly, I have to stand by the position that it is an obviously biased term.

Put another way, it sounds like the main point of a person-affecting view is to deny that preventing a person from existing causes them harm (or maybe benefits them if their life would not have been worth living). Such a view does this by labeling such a person as somehow not a person. This is obviously wrong and biased.

The person who would have been born if my parents had had sex five seconds later than they did when they conceived me--call him "Justin"--is not an actual person. He is a merely possible person. He does not actually exist any more than Sherlock Holmes actually exists. Of course, if my parents had had sex five seconds later, then he would be an actual person, and I would be the merely possible person. But they did not, so he is not an actual person. And accordingly my parents did not negatively affect any actual person by failing to conceive Justin, because Justin is not actual.

Similarly, if a nuclear war kills everyone, then there are no actual future people. They are merely possible people--just like all of the infinitely many possible people who would have existed in all the possible futures that wouldn't have occurred anyway would have been merely possible people regardless of whether the war happened. None of them actually exist, just like Sherlock Holmes doesn't exist. Of course, if the nuclear war had not occurred, then they would be born and would be actual people. But it did happen, so they are not actual people. And accordingly the nuclear war does not negatively affect any actual future person, because the future people (given that, actually, there are no future people) are not actual.

I think what you want to say is that you can negatively affect people who, actually, are merely possible by preventing them from coming into existence, and for that reason you think "person-affecting view" is an inaccurate term. That's a view you can take. But the crux is not whether future people are actual people (everyone agrees they are, if they actually exist at some point) and anyway this is not really the way to figure out what Parfit thought about longtermism.