A survey of 2,778 AI researchers conducted in October 2023, seven months after the release of GPT-4 in March 2023, used two differently worded definitions of artificial general intelligence (AGI). The first definition, High-Level Machine Intelligence, said the AI system would be able to do any “task” a human can do. The second definition, Full Automation of Labour, said it could do any “occupation”. (Logically, the former definition would seem to imply the latter, but this is apparently not how the survey respondents interpreted it.)

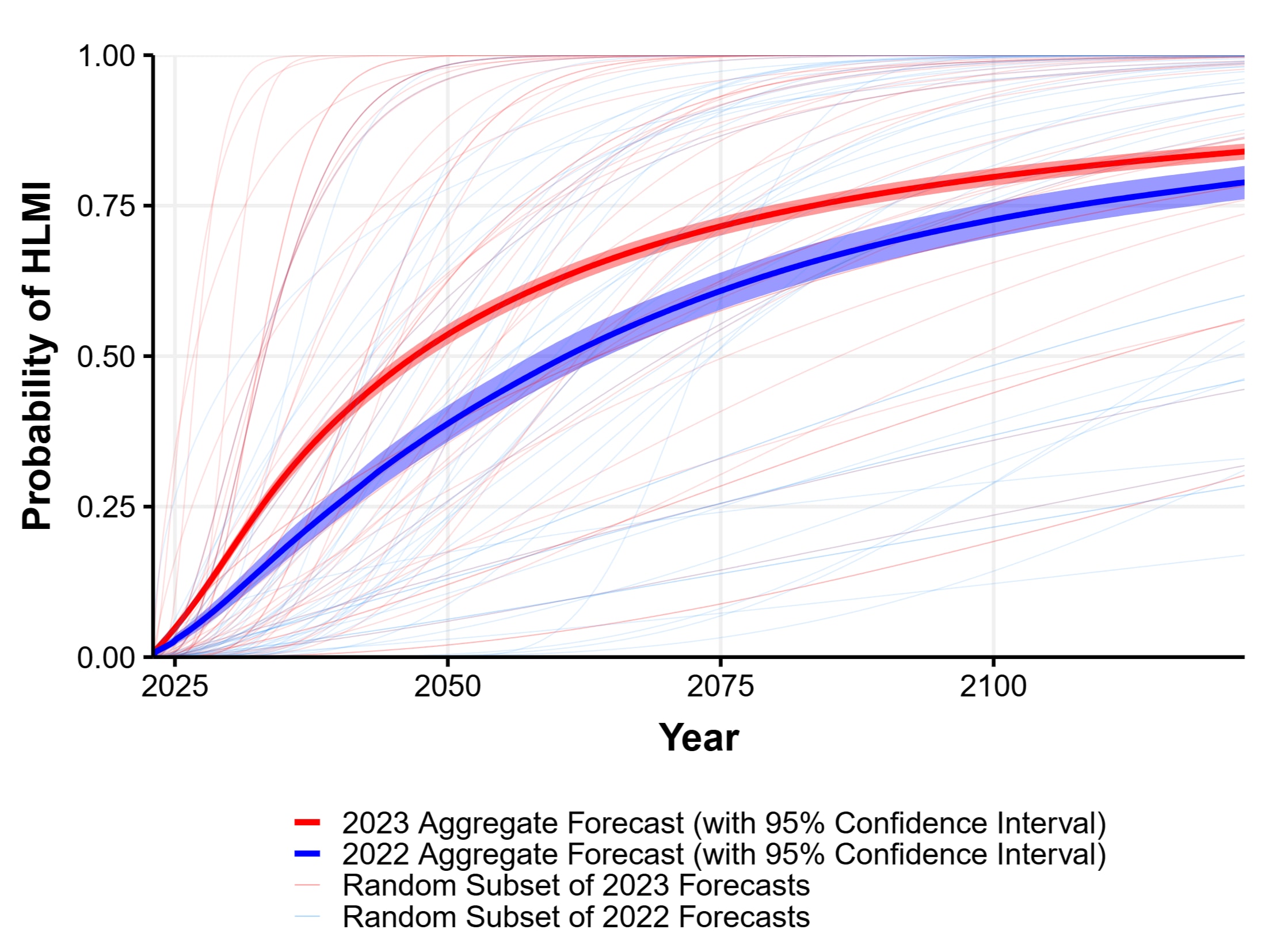

The forecast for High-Level Machine Intelligence was as follows:

- 10% probability by 2027

- 50% probability by 2047

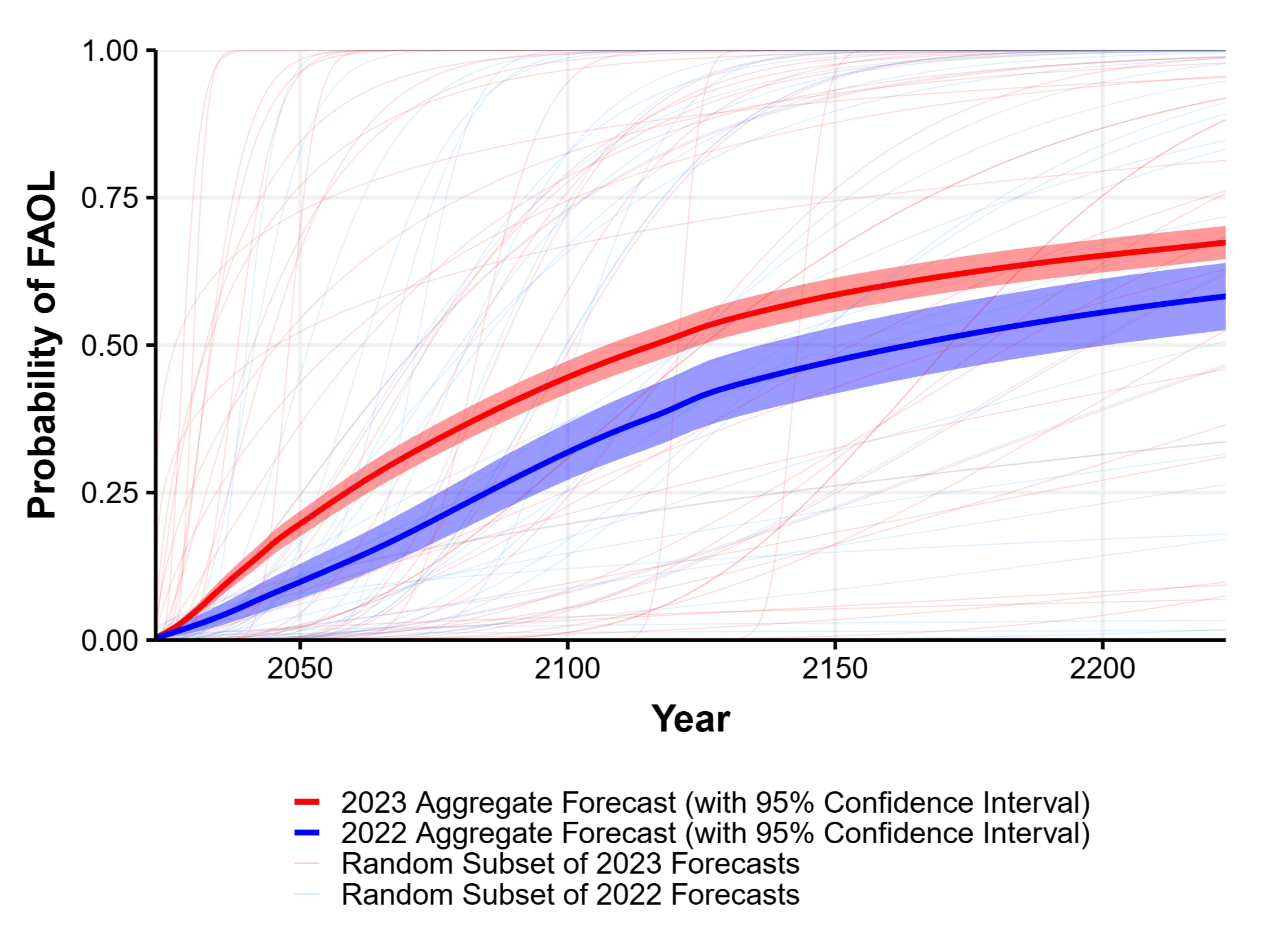

For Full Automation of Labour, the forecast was:

- 10% probability by 2037

- 50% probability by 2116

Here are those forecasts in graphical form:

Another survey result of interest: 76% of AI experts surveyed in 2025 thought it was unlikely or very unlikely that current AI methods, such as large language models (LLMs), could be scaled up to achieve AGI.

Also of interest: there have been at least two surveys of superforecasters about AGI, but, unfortunately, both were conducted in 2022 before the launch of ChatGPT in November 2022. These surveys used different definitions of AGI. Check the sources for details.

The Good Judgment superforecasters gave the following forecast:

- 12% probability of AGI by 2043

- 40% probability of AGI by 2070

- 60% probability of AGI by 2100

The XPT superforecasters forecast:

- 1% probability of AGI by 2030

- 21% probability of AGI by 2050

- 50% probability of AGI by 2081[1]

- 75% probability of AGI by 2100

My opinion: forecasts of AGI are not rigorous and can’t actually tell us much about when AGI will be invented. We should be extremely skeptical of all these numbers because they’re all just guesses.

That said, if you are basing your view of when AGI will be invented largely on the guesses of other people, you should get a clear picture of what those guesses are. If you just rely on the guesses you happen to hear, what you hear will be biased. For example, people are more likely to repeat guesses that AGI will be invented shockingly soon because that’s much more interesting than a guess it will be invented sometime in the 2100s. You might also have a filter bubble or echo chamber bias where you hear a biased sample of guesses based on your social networks rather than a representative sample of AI experts or expert forecasters.

I have never heard a good, principled argument for why people in effective altruism should believe guesses that put AGI much sooner than the guesses above from the AI researchers and the superforecasters. You should worry about selectively accepting some evidence and rejecting other evidence due to confirmation bias.

My personal guess — which is as unrigorous as anyone else’s — is that the probability of AGI before January 1, 2033 is significantly less than 0.1%. One reason I have for thinking this is that the development of AGI will require progress in fundamental science that currently isn’t getting much funding or attention, and that science usually takes a long time to move from a pre-paradigmatic stage to a stage where engineers have mastered building technology using the new scientific paradigm. As far as I know, it’s never in history been only 7 years.

The overall purpose of this post is to expose people in effective altruism to differing viewpoints from what you might have heard so far, and to encourage you to worry about confirmation bias and about filter bubble/echo chamber bias. I strongly believe that, in time, many people in EA will come to regret how the movement’s focus has shifted toward AGI and will come to see it as a mistake. People will wonder why they ever trusted a minority of experts over the majority or why they trusted non-expert bloggers, tweeters, and forum posters over people with expertise in AI or forecasting. I’m not saying that the expert majority forecasts are right, I’m saying that all AGI forecasts are completely unrigorous and worthy of extreme skepticism, but, if you already put your trust in forecasting, at least by exposing yourself to the actual diversity of opinion, you might begin to question things you accepted too readily.

A few other relevant ideas to consider:

- LLM scaling may be running out of steam, both in terms of compute and data

- A recent study found that AI coding assistants make human coders 19% less productive

- There is growing concern about an AI financial bubble because LLMs are not turning out to practically useful for as many things as was hoped, e.g., the vast majority of businesses report no financial benefit from using LLMs and many companies have abandoned their efforts to use them

- Despite many years of effort from top AI talent and many billions of dollars of investment, self-driving cars remain at roughly the same level of deployment as 5 years ago or even 10 years ago, nowhere near substituting for human drivers on a large scale[2]

- ^

The other figures come from the Forecasting Research Institute's post on the EA Forum, but the 50% by 2081 figure comes from this Time article:

Henshall, Will. “When Might AI Outsmart Us? It Depends Who You Ask.” TIME, 19 Jan. 2024, time.com/6556168/when-ai-outsmart-humans/.

The article says:Respondents were asked to give probabilities for 2030, 2050, and 2100. Probability distributions were fitted based on these responses.

- ^

Andrej Karpathy, an AI researcher formerly at OpenAI who led Tesla’s autonomous driving AI from 2017 to 2022, recently made the following remarks on a podcast:

…self-driving cars are nowhere near done still. The deployments are pretty minimal. Even Waymo and so on has very few cars. … Also, when you look at these cars and there’s no one driving, I actually think it’s a little bit deceiving because there are very elaborate teleoperation centers of people kind of in a loop with these cars. I don’t have the full extent of it, but there’s more human-in-the-loop than you might expect. There are people somewhere out there beaming in from the sky. I don’t know if they’re fully in the loop with the driving. Some of the time they are, but they’re certainly involved and there are people. In some sense, we haven’t actually removed the person, we’ve moved them to somewhere where you can’t see them.

That's interesting that people may still be kind of in the loop through teleoperations centers, but I doubt it would be one to one, and the fact that Waymo is 5x safer (actually much safer than that because almost all the serious accidents were caused by humans, not the Waymos) is mostly due to the tech.

The first part is false, e.g. "Driverless taxi usage in California has grown eight fold in just one year". The second part is true, but the fact that self-driving cars are safer and cheaper (once they pay off the R&D) means that they most likely will soon substitute for human drivers on a large scale absent banning.

We should be cautious about talking about the safety of self-driving cars and avoid conflating safety with overall performance or competence, especially considering that humans are in the loop. Self-driving cars are geofenced to the safest areas (and the human drivers who make up the collision statistics they are compared against are not). They are not pure AI systems but an AI-human hybrid or "centaur".

Moreover, my understanding is that the software engineers and AI engineers working on these systems are doing a huge amount of special casing all the time. The ratio of Waymo engineers to Waymo self-driving cars is roughly around 1:1 or maybe 1:2. It's not clear they could actually scale up the number of cars 10x or 100x using their current methods without also scaling up the number of engineers commensurately. Every time there's so much as the branches of a bush protruding into a street, an engineer has to do a special task, or else someone gets sent out to trim the branches. This is a sort of convoluted, semi-Mechanical Turk way of doing autonomous driving.

The growth in the size of the deployment is from a very small baseline. If a company starts with 10 self-driving cars and then scales up to 1,000 self-driving cars, that's an increase of 100x, but it's from such a small baseline that you need to put "100x" in context.

As of August 2025, Waymo said it had 2,000 vehicles in its commercial fleet in the United States (so, presumably a bit more if you include its test fleet). In 2020, Waymo had around 600 vehicles in its fleet (possibly combining its commercial and test fleet, I'm not sure). Compare that to the 280 million registered vehicles in the United States. In 5 years, Waymo has gone from 0.0002% of the overall U.S. vehicle fleet to 0.0007%, which is why I say the level of deployment is roughly the same. The growth is from a very small baseline and the overall deployment remains very small.

I think there is essentially no chance that self-driving cars will substitute for human drivers on a large scale within the next 5 years. By a large scale, I mean scaling up to the size of something like 1% or 10% of the overall U.S. vehicle fleet. Waymo is basically still a science project living off of some very patient capital. I think people should look at the abrupt demise of Cruise Automation as an important lesson. There is no reason it should have shut down if development of the technology was going as well as it said. It would also be hard to believe that it was so far behind Waymo that its failure makes sense but Waymo's would not. (It's not just Cruise, but several other companies like Uber ATG and Pronto that have shut down or pivoted to an easier problem.)

These companies have aggressive PR and marketing, are always courting investors because they burn a lot of capital, and typically avoid transparency or openness. We may not know that Waymo's engineers, managers, or investors are losing faith in the technology until it abruptly shuts down. Or it may just continue for many, many years as a science project, not getting meaningfully closer to a large-scale commercial deployment.

Keep in mind the Google self-driving car project started in 2009 and the "Waymo" name was adopted in 2016. It's been a long time at this. Billions have been spent on R&D. This has been a slog and it will most likely continue to be a slog, possibly ending in a wind down when the capital gets less patient.

I wouldn't say that the cities Waymo are in are the safest areas. But I was critical of the claim that Teslas were ~4x safer a few years back because they were only driving autonomously on highways (which are much safer). What's the best apples-to-apples comparison you have seen?

Metalculus says Dec 31 for half of new cars sold being autonomous, which would pretty much guarantee 1% of US fleet in 5 years, so you might find some people there to bet...

Thanks for the reply.

Waymo’s self-driving cars are geofenced down to the street level, e.g., they can drive on this street but not on that street right next to it. Waymo carves up cities into the places where it’s easiest for its cars to drive and where the risk is lowest if they make a mistake.

Tesla is another good example to look at besides Waymo because there is no geofencing and no teleoperation or remote assistance. Anyone can buy a Tesla and have access to the latest, greatest, most cutting-edge version of self-driving car technology. It isn’t very good. There is a cottage industry on YouTube of people posting long vlogs of their semi-autonomous drives in their Teslas. The AI makes constant mistakes and it’s not anywhere close to being able to handle driving on its own. If you just let the cars loose with no human oversight, they would all get into wrecks within a day. Or else get stuck, paralyzed with indecision in the middle of the road somewhere.

I wish I had the kind of money to just throw at a bunch of technological forecasting bets. But, if I wanted to do that, it would probably be more efficient to do it through the stock market. It’s complex to disentangle, e.g., how much of Tesla’s valuation is based on robotaxi optimism, but placing bets also has its own complexities, such as whether it’s even legal and whether you’ll be able to compel someone to pay up if you win.

I made a bet in the past about autonomous vehicles that I regret. It won’t resolve for many years, but now I think I was way overoptimistic about how soon full autonomy would be deployed. Thankfully all the money is just going to charity either way. (And good charities on both sides of the bet.)

I don’t really believe anything Metaculus says about anything. It’s effectively just an opinion poll. But I’m not sure I would believe it much more if it were a prediction market. The stock market is sort of an indirect prediction market, and I’m not sure I really trust its long-term technological forecasting, either. The signal is much clearer when it’s about near-term financial performance, e.g., if the valuations of AI companies plummeted, that would be a sign of AI’s current capabilities in commercial applications.

You see weird examples like Nikola, the company that was trying to make hydrogen trucks, peaking at a market cap of $27 billion. Now it’s worth under $4 million. The former CEO was sentenced to four years in prison for fraud (but got pardoned by Donald Trump after donating $1.8 million to his campaign, which looks like blatant corruption). I do mostly trust the stock market to shake these things out in the long run, but you can have periods of time where things can very distorted for a while. I think that’s what’s happening with LLMs right now. The valuations are bound to come way down sooner or later.

Interesting. But if the vast majority of the serious accidents that did occur were the fault of other drivers, wouldn't that be strong evidence that the Waymos were safer (apples to apples at least for those streets)?

Good question. To know for sure, you would have to collect data on the rate of at-fault collisions for human drivers in specifically just those geofenced areas, which would be onerous. I don't particularly blame Waymo for not collecting this data, but it has to be said this is a methodological problem.

There's also been the concern raised for a long time that Waymos may cause collisions that, legally, they aren't at fault for. So, if Waymos are prone to suddenly breaking due to harmless obstacles like plastic bags or simply because of mistakes made by the perception system, then they could get rear-ended a lot, but rear-ending is always the fault of the car in the rear as a matter of law. Legally, this would count as completely safe, but common sense tells us that if a technology is statistically causing a large number of collisions, that's actually a safety concern.

You did mention serious accidents specifically. I should look at the safety paper that was published by Waymo this year to see how they address this. This is the kind of disclosure of internal safety data that self-driving car companies have historically been extremely reluctant to make and that I would have killed to get my hands on back when I was writing a lot about self-driving cars. But the general difficulty can be seen in this report published by RAND Corporation in 2016. More serious accidents (those causing injuries or deaths) are much less common than minor collisions so, statistically, you need a lot more data before you can be confident about their actual frequency. The Waymo safety paper says it's looking at data from 57 million miles. According to the RAND report, that wouldn't be quite enough data to establish a lower collision rate than human drivers or even half as much as you'd need to establish a lower injury rate, but I guess maybe it could if the frequency were much, much less in the observed data. (However, you'd still have the bias introduced by comparing data from driving in geofenced areas to data collected about humans driving everywhere.)

I am most curious of all to see what independent experts make of Waymo's paper, since of course Waymo will try to make itself look as good as possible and will omit anything too inconvenient.

What I want to emphasize more than anything is the distinction between safety and competence or overall performance. Particularly in the context of forecasting widespread deployment of robotaxis or using autonomous driving as a point of reference for AI generally or for AGI. A car that just stays parked all the time is 100% safe. With current technology, you could most likely get an autonomous car to drive around a closed track indefinitely and be almost 100% safe. Same for a car just circling the block in one of those fake cities in California used for testing. Safety is not the same as competence for overall performance.

Intuitively, a self-driving car being safer than a human driver evokes the idea that the car can drive anywhere and do anything, all its own, and it gets into collisions less often. People point to safety claims from Waymo and say self-driving is a solved problem — we already have a superhuman AI driver. But all that Waymo is really claiming is that the rate its vehicles get into collisions and cause injuries is less than the average rate than human drivers. I don't think Waymo would make the stronger version of the claim because they know that's not true and they would probably worry about being sued by investors or getting punished by the SEC. (Well, maybe not under this administration. Is there still an SEC?)

If safety were the same as overall performance or competence, there would be no need for geofencing and there would be no need for teleoperation or remote assistance. You could just let driveless vehicles loose anywhere and everywhere with no human in the loop.

A good analogy for Waymo is "chimeras" in chess, which combined chess-playing AIs and human players. It's a system that combines AI and human intelligence, not a pure AI system. This is how Waymo describes how its remote assistance works in a May 2024 blog post:

So, the humans are not remote controlling the car like they're playing a racing game (à la Grand Turismo), it's more like they're playing The Sims and saying "go here", "do this". Although it sounds like they can also even draw a specific path for the car to follow. (To visualize what drawing a path looks like, look at the orange ribbon in this video. That's what the car is "predicting" or planning as its path.)

Clearly, this is not the same thing as a car that actually knows how to drive by itself. What's worse, the human's role in the system is invisible, so we don't know what's AI and what's a Mechanical Turk.

Looking at Tesla is probably more informative to see the state of the art. Teslas have cheaper sensors (i.e. no lidar) but the fleet is 1,000x larger, so Tesla has a 1,000x larger source of data to sample from and train on. When you watch the vlogs of Teslas in semi-autonomous mode, you see when the human intervenes. If you watch a vlog of a Waymo trip, you will never be able to tell when a human is intervening remotely or not.

There's also what I mentioned before, which is that strict geofencing allows Waymo to special case the whole thing, down to each individual bush next to the road. This is a quote from a 2018 article about Cruise, not Waymo, but the same concepts apply:

Like other companies, Waymo uses HD maps which require a lot of human annotation and constant updating. So, instead of sending someone out to trim the bush, an employee might annotate the bush as something that causes problems that the car needs to give a wide berth when it's in that portion of that lane, or if it's really problematic they might just mark that street off as somewhere the Waymos don't go.

There's also been this big debate and conversation in autonomous driving tech for many years about hand-coded behaviours vs. machine learned behaviours. Both have well-known issues. Hand-coded behaviours are extremely brittle. It's hard to imagine even a million engineers at a million computers typing enough rules to handle every scenario a car could ever encounter in the world. Machine learned behaviours are brittle in their own way, i.e., neural networks can go crazy when they encounter something weird or novel that doesn't match their training dataset. So, there's been a lot of discussion about the strengths and weaknesses of each approach, and proposals for the right combination or hybridization of them.

This is more on the level of rumour or speculation than reliable knowledge, but what I've heard conjectured is that Waymo engineers solve one problem at a time as they come up in their geofenced areas. In principle, these hand-coded rules might be general purpose, e.g., whenever a car encounters a bush, give it a wide berth. But then you don't know if these rules will generalize well outside of the geofences areas. What if that's a good policy for bushes in San Francisco or Austin, but another city has bushes planted on the median dividing the street? Then you might have the Waymo getting uncomfortable close to parked cars or pedestrians on the sidewalk. Just a made-up example. The overall idea is that if, statistically, every self-driving car has its own personal engineer writing code to solve the problems it encounters on its daily routes, then maybe that will work for very limited deployments, but it won't scale to 280 million vehicles.

This is a very long comment in response to a very short question. But I think it's worth getting into the weeds on this. If the whole fate of the world depends on whether AGI is imminent or not, then self-driving cars are an important point of comparison to get right. Some people claim that self-driving is solved and use that as evidence to argue for near-term AGI. But self-driving isn't solved and, actually, the fact that it isn't solved raises inconvenient questions for the hypothesis that AGI will be invented soon.

Tesla's fleet does something like 800 years of driving per year, so it's hard to argue the problem is just a lack of data rather than something more fundamental. And is not data inefficiency itself a fundamental problem with the current paradigm of AI? You can try to argue that computer vision and navigating a body or robot around a physical environment is something entirely different than the kind of cognitive capabilities that would constitute AGI, but then are we really saying that AGI won't be able to see, walk, or drive? Really?

I think Waymo, Cruise, et al. are also a good lesson about how technology companies can put up an appearance of inevitable progress racing toward a transformative endpoint, but if you do a little detective work the story falls apart. I was a bit scandalized when François Chollet said that, like how Waymo does the special casing with HD maps and probably with hand-coded rules as well, OpenAI and other LLM companies use a workforce of tens of thousands of full-time contractors (across the whole LLM industry) to apply special case fixes to mistakes LLMs make. This gives the appearance of increasing LLM intelligence, but it's just a Mechanical Turk!

Thanks-I appreciate the skeptical take. I have updated, but not all the way to thinking self driving is less safe than humans. Another thing I've been concerned about is that the self driving cars I assume would be relatively new, so they would have fewer mechanical problems than the average car. But mechanical problems make up a very small percent of total accidents, so that wouldn't change things much. I agree that if there is a lot of babysitting required, then it would not be scalable. But I'm estimating that even without the babysitting and geofencing, it would still be safer than humans with all the drunk/sleepy/angry/teen/over 80 drivers.

I agree this does have some lessons for AGI. Though interestingly the progress on self driving cars is the slowest of all the areas measured in the graph below:

Thanks for the reply!

I want to be very clear on this point. I don’t think safety (narrowly construed) is the crux of the matter. I don’t know if Waymo’s self-driving cars are safer than humans or not on an apples-to-apples basis (i.e. accounting for the biasing effect of geofencing on the data). They might be for all I know.[1] But also there isn’t a single Waymo in the world that drives without some level of human assistance, so we're judging a human-AI hybrid system in any case. As for Waymos on their own with no human intervention, there is no data on that (as far as I know).

What I’m certain of is that Waymos without human assistance, and even with human assistance, are far less competent than humans at driving overall. They can’t drive as well as a human does. Overall competency is the crux of the matter, not safety (narrowly construed). And particularly overall competency without human assistance.

One way to try to get at this distinction is to say that Waymos are not SAE Level 5. Here are the definitions of the SAE Levels of Driving Automation, including Level 5:

Waymo fails to meet the following conditions:

To be clear, Waymo does not claim its vehicles are SAE Level 5.

Do you think Waymos can drive as well as a human does, even without geofencing, even without human assistance? Do you think they are SAE Level 5? If so, why don’t they deploy now to everywhere in the world, or at least everywhere in America, all at once?[2]

Also, if Waymo was able to solve self-driving, why did Cruise Automation give up? (Cruise announced it was shutting down in December 2024, so they had the opportunity to benefit from recent AI progress.)

It seems clear to me the technology isn’t nearly capable of deploying everywhere now, and that’s my point. Safety, narrowly construed, is not the same thing as overall competency. A self-driving car that never does anything because it's paralyzed with caution is 100% safe but has zero competency.

If you construe safety more broadly, as in how safe would Waymos be if there were suddenly 10 million of them driving all over the world with their current level of capabilities, and with no human assistance, then in that broader sense of safety, I think Waymos would certainly be far less safe than the average human driver. If they actually drove — and didn't just freeze up out of caution — then they would definitely crash at a much higher rate than humans do.

Yes, something like 93% of crashes are caused by human error and mechanical problems are only something like 1% (see, e.g., this study).

I think if you really want to a get an intuitive sense of this, you should watch some Tesla FSD vlogs on YouTube. It seems to me like if you let Teslas drive without human oversight, they would crash all day every day until there was nothing left but a heaping wreck of millions of cars within about a week.

I can sort of back this up with data, although the data is not conclusive. The source for Tesla FSD disengagements that METR cites (in the paper you linked to above) says the average is 31 miles between disengagements and 464 miles to critical disengagements. The average American drives about 13,500 miles per year. If a critical disengagement meant the car would crash without human intervention, this implies, left to its own devices, Tesla FSD would crash around 30 times a year, or about once every two weeks. If 10% of critical disengagements would lead to a crash, that’s around 3 crashes a year, which of course is far too many.[3]

You could try to explain this by saying Tesla’s technology just really sucks compared to Waymo’s, but why would this be? Lidar? (If this is true, since we’re talking about self-driving as evidence about near-term AGI, why should AI need lasers to see properly?) Not being able to attract top talent? Not enough inference compute in the car? None of these explanations seem to add up, from my point of view. Especially since Tesla has a distinct advantage in being able to sample data from millions of cars, which no other company can do.

The gap between Tesla FSD and superhuman Level 5 driving is quite significant, so if Waymo has really achieved superhuman Level 5 driving, the explanation would need to account for a very large difference between the Waymo and Tesla FSD. (And, for that matter, between Waymo and its defunct former competitors like Cruise, Uber ATG, Voyage, Argo, Pronto, Lyft Level 5, Drive.ai, Apple's Project Titan, and Ghost Locomotion — not to belabour the point, but there's been a lot of cracks at this.) It's hard to quantify how much Tesla FSD would have to improve to be superhuman, but if we just say, naively, the rate of critical disengagements should be the same as the rate of reported accidents for humans, then the rate needs to come down by more than 1,000x. If it's all disengagements (not just critical ones), then it's over 10,000x. You could make different assumptions and maybe get this as low as 10x — maybe drivers are disengaging 100x or 1,000x more than necessary to attain human-level safety — but it's an unrigorous ballpark figure in any case.

On a final note, I'm bothered by METR's focus on tasks with a 50% success rate. I mean, it's fine to track whatever you want, but I disagree with how people interpret what this means for overall AI progress. Humans do many, many tasks with a 99.9%+ success rate. Driving is the perfect example. If you benchmarked AI progress against a 99.9% success rate, I imagine that graph would just turn into one big, flat line on the bottom at 0.0, and what story would that tell about AI progress?

I still haven't taken the time and effort to look through the new information carefully, but while Googling something else entirely, I stumbled on a 2024 paper that, on page 4, analyzes Waymo's crash rate, partially adjusts for the geofencing, and finds that Waymo's crash rate is higher than crash rate for the average human driver but lower than the average Uber or Lyft driver. However, the authors give a big caveat: "the industry is very far from making meaningful statistical comparisons with human drivers" and "these data should be taken as preliminary estimates."

In the long run, it would be a multi-trillion-dollar revenue opportunity, possibly the largest financial opportunity in the history of capitalism so far. The unit economics may be a problem now, but they would surely improve vastly through economies of scale, particularly the mass manufacturing of the lidar. Seizing that opportunity seems like the logical thing to do.

Alphabet has $98 billion in cash and short-term investments. For comparison, the total, cumulative amount of capital raised by Tesla from 2008 up until early 2018 was $38 billion, including both debt and equity. In Q1 2018, Tesla produced about 35,000 vehicles. At that point in time, the company was unprofitable and cash flow negative almost every quarter in its entire history, so that amount of capital raised represents the amount of capital invested; Tesla wasn't re-investing profits or free cash flow. So, Alphabet definitely has the capital to fund scaling up Waymo if the opportunity is really there.

The Tesla drivers' site defines the differences in how drivers should report critical disengagements vs. overall disengagements:

Less than 100% of critical disengagements would lead to collisions. Just because you run a red a red light, blow through a stop sign, or drive against oncoming traffic doesn't guarantee you'll crash. But I would also have to think more than 0% of non-critical disengagements would lead to collisions. I have to imagine some of these behaviours modestly increase the risk of a crash, if only by confusing other drivers. We also can't account, just based on this data, how many of these less serious errors would compound over time, if the car were left to its own devices, or would otherwise lead to a more dangerous situation.

As a side note, I'm not sure how reliable these disengagement rates are in any case, since they're self-reported by Tesla drivers, and I'm not sure I really trust that.

Interesting, but AI says:

"Exceptions exist where the lead driver may share or bear full fault, such as if they:

So if plastic bags are not a valid reason to stop, it sounds like the Waymo would be at fault for the rear-end accident.

I agree other company failures are evidence for your point. I think Waymo is trying to scale up, and they are limited by cars at this point.

It sounds like Tesla solves the problem of unreliability by having the driver take over. And Waymo solves it by the car stopping and someone remotely taking over. So if that has to happen every 464 miles (say 10 hours) and it takes the remote operator five minutes to deal with (probably less but there would be downtime), then that means you would only need one remote operator for 120 cars. I think that's pretty scalable. It would not be as safe when it can drive anywhere as Waymo's claim of roughly 20 times as safe (it's five times fewer accidents but then the remaining accidents were mostly the other drivers' faults), but I still think it would be safer than the average human driver.

I agree that 50% is not realistic for many tasks. But they do plot some data for higher percent success:

Roughly I think going from 50% to 99.9% would be 2 hours to 4 seconds, not quite 0, but very bad!

Here's what a California law firm blog says about it:

A Texas law firm blog says that if the lead driver stops abruptly for no reason, they could at most be found partially at fault, but not wholly at fault.

Thank you. Waymo could certainly deploy a lot more cars if supply of cars fitted with its hardware were the primary limitation. In 2018, Waymo and Jaguar announced a deal where Jaguar would produce up to 20,000 I-Pace hatchbacks for Waymo. The same year, Waymo and Chrysler announced a similar deal for up to 62,000 Pacifica minivans. It's 7 years later and Waymo's fleet is still only 2,000 vehicles. I would bet Waymo winds down operations before deploying 82,000 total vehicles.

Alphabet deciding to open up Waymo to external investment is an interesting signal, especially given that Alphabet has $98 billion in cash and short-term investments. This started in 2020 and is ongoing. The more optimistic explanation I heard is that Alphabet felt some pressure from employees to give them their equity-based compensation, and that required getting a valuation for Waymo, which required external investors. A more common explanation is simply that this is cost discipline; Alphabet is seeking to reduce its cash burn. But then that also means reducing Alphabet's own equity ownership of Waymo, and therefore its share of the future opportunity.

Something I had completely forgotten is that Waymo shut down its self-driving truck program in 2023. This is possibly a bad sign. It's interesting given that Aurora Innovation pivoted from autonomous cars to autonomous trucks, which I believe was on the theory that trucks would be easier. Anthony Levandowski also pivoted from cars to semi-trucks when he founded Pronto AI because semi-trucks were an easier problem, but then pivoted again to off-road dump trucks that haul rubble at mines and quarries (usually driving back and forth in a straight line over and over, in an area with no humans nearby).

I couldn't find any reliable information for Waymo, but I found a 2023 article where a Cruise spokesperson said there was one human doing remote assistance for every 15 to 20 autonomous vehicles. That article cites a New York Times article that says a human intervention was needed every 2.5 to 5 miles.

That's interesting, thanks. My other criticism, which is maybe not a criticism of METR's work itself but rather a criticism of how other people interpret it, is just how narrow these tasks are. To infer that being able to do longer and longer tasks implies rapid AGI progress seems like it would require several logical inference steps between the graph and that conclusion, and I don't think I've ever seen anyone spell out the logic. Which tasks you look at and how they are graded is another crucial thing to consider.

I don't understand the details of the coding, math, or question and answer (Q&A or QA) benchmarks, but is the "time" dimension of these not just LLMs producing larger outputs, i.e., using more tokens? And, if so, why would LLMs performing tasks that use more tokens, although it may well be a sign of LLM improvement, indicate anything about AGI progress?

So many more tasks would seem way more interesting to me if we're trying to assess AGI progress, such as:

If you only choose the kind of tasks that are least challenging for LLMs and you don't choose the kind of tasks that tend to confound LLMs but whose successful completion would be indicative of the sort of capabilities that would be required for AGI, then aren't you just measuring LLM progress and not AGI progress?

This bugs me because I've seen people post the METR time horizon graph as if it just obviously indicates rapid progress toward AGI, but, to me, it obviously doesn't indicate that at all. I imagine if you asked AI researchers or cognitive scientists, you would get a lot of people agreeing that the graph doesn't indicate rapid progress toward AGI.

I mean, this is an amazing graph and a huge achievement for DeepMind, but it isn't evidence of rapid progress toward AGI:

It's just the progress of one AI system, AlphaStar, on one task, StarCraft.

You could make a similar graph for MuZero showing performance on 60 different tasks, namely, chess, shogi, and go, plus 57 Atari games. What does that show?

If you make a graph with a few kinds of tasks on it that LLMs are good at, like coding, math, and question and answer on automatically gradable benchmarks, what does that show? How do you logically connect that to AGI?

Incidentally, how good is o3 (or any other LLM) at chess, shogi, go, and Atari games? Or at StarCraft? If we're making progress toward artificial general intelligence, shouldn't one system be able to do all of these things?

DeepMind announced Gato in 2022, which tried to combine as many things as possible. But (correct me if I'm wrong) Gato was worse at all those things than models trained to do just one or a few of them. So, that's anti-general artificial intelligence.

I just see so much unclear reasoning when it comes to this sort of thing. The sort of objections I'm bringing up are not crazy complicated or esoteric. To me they just seem straightforward and logical. I imagine you would hear these kinds of objections or better ones if you asked some impartial AI researchers if they thought the METR graph was strong evidence for rapid progress toward AGI, indicating AGI is likely within a decade. I'm sure somebody somewhere has stated these kind of objections before. So, what gives?

The whole point of my post above is that the majority of AI experts think a) LLMs probably won't scale to AGI and b) AGI is probably at least 20 years away, if not much longer. So, why don't people in EA engage more with experts who think these things and ask them why they think that? I'm sure they could do a much better job coming up with objections than me. Then you can either accept the objections are right and change your mind or come up with a convincing reply to the objections. But to just not anticipate the objections or not talk to people who are knowledgeable enough to raise objections is very strange. What kind of research is that? That's so unrigorous! Where's the due diligence?

I think EA is in this weird, crazy filter bubble/echo chamber when it comes to AGI where if there's any expert consensus on AGI, it's against the assertions many people in EA make about AGI.[1] And if you try to point out the fairly obvious objections or problems with the arguments or evidence put forward, sometimes people can be incredibly scornful.[2] I think if people do this, they should just write in a permanent marker on their forehead "I have bad epistemic practices". Then they'll at least save people some time from trying to talk to them. Because personally attacking or insulting someone just for disagreeing with them (as most experts do, I might add!!) means they don't want to hear the evidence or reasoning that might change their mind. Or maybe they do want to, on some level, but, for whatever reason, they're sure acting like they don't.

I am trying to nudge in my very small way people in EA to apply more rigour to their arguments and evidence and to stimulate curiosity about what the dissenting views are. E.g., if you learn most AI experts disagree with you and you didn't know that before, that should make you curious about why most experts disagree, and should introduce at least a little doubt about your own views.

If anyone has suggestions on how to stimulate more curiosity about dissenting views on near-term AGI among people in EA, please leave a reply or message me.

As I mentioned in the post, 76% of AI experts think it's unlikely or very unlikely that current AI methods will scale to AGI, but this comment from Steven Byrnes a while ago that said when he talked to "a couple dozen AI safety / alignment researchers at EAG bay area", he spoke to "a number of people, all quite new to the fields of AI and AI safety / alignment, for whom it seems to have never crossed their mind until they talked to me that maybe foundation models won’t scale to AGI". That's bananas. That's a huge problem. How can the three-quarters majority view in a field not even be known as a concept to people in EA who are trying to work in that field?

Some recent examples here (the comment) and here (the downvotes) which are comparatively mild because I honestly can't stomach looking at the worse examples right now.

That's very helpful! I'm guessing that since 2023, a person could manage more vehicles than that, and it will continue to improve. But let's just work with that number. I don't think the pay for a person solving problems for Waymos would need to be that much higher than taxi drivers but even if it is 1.5x or 2x, that would still be an order of magnitude reduction in labor cost. Of course there is additional hardware cost for autonomous vehicles, but I think that can be paid off with a reasonable duty cycle. So then if you grant that the geofencing is less than a 20x safety advantage, I think there is an economic case for the chimeras, as you say.

My understanding is that 50% success on a 1 hour task means the human expert takes ~1 hour, but the computer time is not specified.

As for AGI, I do think that some people conflate the 50% success time horizon at coding tasks reaching ~one month as AGI, when it really only means superhuman coder (I think something like 50% success rate is appropriate for long coding tasks, because humans rarely produce code that works on the first try). However, once you get to superhuman coder, the logic goes that the progress in AI research will dramatically accelerate. In AI 2027, I think there was some assumption like it would take 90 years at current progress to get something like AGI, but when things accelerate, it ends up happening in a year or two. Another worry is that you might not need AGI to have something catastrophic, because it could become superhuman in a limited number of tasks, like hacking.

You're right it's not fully general, but AI systems are much more general than they were 7 years ago.

LessWrong is also known for having very short timelines, but I think the last survey indicated median time for AGI was 2040. So I do think that it is a vocal minority in EA and LW that have median timelines before 2030. However, I do agree with them that we should be spending significant effort on AI safety because it's plausible that AGI comes in the next five years.

Though it's true that the expert surveys you cite have much longer timelines, I would guess that if you took a poll of people in the AI labs (which I think would qualify as a group of experts - biased though they may be, they do have inside information), their median timelines would be before 2030.

This could be true, but you have to account for other elements of the cost structure. For example, can you improve the ratio of engineers to autonomous vehicles from the current ratio of around 1:1 or 1:2 to something like 1:1000 or 1:10,000?

It seems like Waymo is using methods that scale with engineer labour rather than learning methods that scale with data and compute. So, to deploy more vehicles in more areas would require commensurately more engineers, and, in addition to being too expensive, there are simply not enough of them on Earth.

It doesn’t mean that, either. METR has found that current frontier AI systems are worse in real world, practical use cases than not using AI at all.

Automatically gradable benchmarks generally don’t seem to have much to do with the ability to do tasks in the real world. So, predicting real world performance based on benchmark performance seems to just be an invalid inference.

Anecdotally, what I hear from people who say they find AI coding assistants useful is that it saves them the time it would take to copy and paste code from Stack Exchange. I have never heard anything along the lines of "it came up with a new idea" or "it was creative". Yet this is what human-level coding would require.

The obvious objection to this as it pertains to the initial advent of AGI, rather than to superintelligence, is that this is a chicken-and-egg problem. If you need AGI to do AGI R&D, AGI can’t help you develop AGI because you haven’t invented it yet. You would need a sub-human AI that can do tasks that speed up AI research and AI engineering. And that seems dubious. Is automating this kind of work not an AGI-level problem?

I don’t know if I believe this. LLMs are impressive, but their scope is fairly narrow. They have memorized most of the important digital/digitized text that’s available. Their next-token prediction and everything layered on top of that like fine-tuning to follow instructions, reinforcement learning from human feedback (RLHF), and Chain of Thought results in some impressive behaviours. But they are extremely brittle. They routinely make errors on very basic tasks.

I think of LLMs more like another type of AI system that are proficient in one area, comparable to game-playing RL agents. LLMs are good at many text-related tasks (including math and coding, which are also text) but they aren’t able to generalize beyond the text-related tasks they have massive amounts of training data for. They don’t do well outside of text-related tasks, they don’t do well with novelty, they frequently fail to reason properly, etc.

So, I’m not sure LLMs are all that more general than previous systems like MuZero and AlphaZero.

Part of generality or generalization is that you should see positive transfer learning, i.e., having skills in some domains should improve the AI system’s skills in other domains. But it seems like we see is the opposite. That is, it seems like we see negative transfer learning. Training an AI on many diverse, heterogeneous tasks from multiple domains seems to hurt performance. That’s narrowness, not generality.

That’s very interesting, if you remember correctly. I would be interested in seeing survey data both for LessWrong and for EA.

I don't think you can have it both ways: A superhuman coder (that is actually competent, which you don't think AI assistants are now) is relatively narrow AI, but would accelerate AI progress. A superhuman AI researcher is more general (which would drastically speed up AI progress), but is not fully general. I would argue that LLMs now are more general than AI researcher tasks (though LLMs are currently not good at all of those tasks), because LLMs can competently discuss philosophy, economics, political science, art, history, engineering, science, etc.

I'm claiming that they could approach overall staff to vehicle ratio of 1:10 if the number of real-time helpers (which don't have to be engineers) and vehicles were dramatically scaled up, and that's enough for profitability.

The 2023 LessWrong survey was median 2040 for singularity, and 2030 for "By what year do you think AI will be able to do intellectual tasks that expert humans currently do?". The second question was ambiguous, and some people put it in the past. I haven't seen a similar survey result for EAs, but I expect longer timelines than LW.

I definitely disagree with this. Hopefully what I say below will explain why.

The general in artificial general intelligence doesn't just refer to having a large repertoire of skills. Generality is about the ability to learn to generalize beyond what a system has seen in its training data. An artificial general intelligence doesn't just need to have new skills, it needs to be able to acquire new skills, and to acquire new skills that have never existed in history before by developing them itself — just as humans do.

If a new video game comes out today, I'm able to play that game and develop a new skill that has never existed before.[1] I will probably get the hang of it in a few minutes, with a few attempts. That's general intelligence.

AlphaStar was not able to figure out how to play StarCraft using pure reinforcement learning. It just got stuck using its builders to attack the enemy, rather than figuring out how to use its builders to make buildings that produce units that attack. To figure out the basics of the game, it needed to do imitation learning on a very large dataset of human play. Then, after imitation learning, to get as good as it did, it needed to do an astronomical amount of self-play, around 60,000 years of playing StarCraft. That's not general intelligence. If you need to copy a large dataset of human examples to acquire a skill and millennia of training on automatically gradable, relatively short time horizon tasks (which often don't exist in the real world), that's something, and it's even something impressive, but it's not general intelligence.

Let's say you wanted to apply this kind of machine learning to AI R&D. The necessary conditions don't apply. You don't have a large dataset of human examples to train on. You don't have automatically gradable, relatively short time horizon tasks with which to do reinforcement learning. And if the tasks require real world feedback and can't be simulated, you certainly don't have 60,000 years.

I like what the AI researcher François Chollet has to say about this topic in this video from 11:45 to 20:00. He draws the distinction between crystallized behaviours and fluid intelligence, between skills and the ability to learn skills. I think this is important. This is really what the whole topic of AGI is about.

Why have LLMs absorbed practically all text on philosophy, economics, political science, art, history, engineering, science, and so on and not come up with a single novel and correct idea of any note in any of these domains? They are not able to generalize enough to do so. They can generalize or interpolate a little bit beyond their training data, but not very much. It's that generalization ability (which is mostly missing in LLMs) that's the holy grail in AI research.

There are two concepts here. One is remote human assistance, which Waymo calls fleet response. The other is Waymo's approach to the engineering problem. I was saying that I suspect Waymo's approach to the engineering problem doesn't scale. I think it probably relies on engineers doing too much special casing that doesn't generalize well when a modest amount of novelty is introduced. So, Waymo currently has something like 1,500 engineers to operate in the comparatively small geofenced areas where it currently operates. If it wanted to expand where it drives to a 10x larger area, would its techniques generalize to that larger area, or would it need to hire commensurately more engineers?

I suspect that Waymo faces the problem of trying to do far too much essentially by hand, just adding incremental fix after fix as problems arise. The ideal would be to, instead, apply machine learning techniques that can learn from data and generalize to new scenarios and new driving conditions. Unfortunately, current machine learning techniques do not seem to be up to that task.

Thank you. Well, that isn't surprising at all.

Okay, well maybe the play testers and the game developers have developed the skill before me, but then at some point one of them had to be the first person in history to ever acquire the skill of playing that game.

Those AI researcher forecasts are problematic - it just doesn’t make sense to put the forecasts for when AIs can do any task and when they can do any occupation so far apart. It suggests they’re not putting much thought into it/not thinking carefully. That is a principled reason to pay more attention to both skeptics and boosters who are actually putting in work to make their views clear, internally coherent, and convincing.

I agree that the enormous gap of 69 years between High-Level Machine Intelligence and Full Automation of Labour is weird and calls the whole thing into question. But I think all AGI forecasting should be called into question anyway. Who says human beings should be able to predict when a new technology will be invented? Who says human beings should be able to predict when the new science required to invent a new technology will be discovered? Why should we think forecasting AGI beyond anything more than a wild guess is possible?

I don't see a lot of rigour, clarity, or consistency with any AGI forecasting. For example, Dario Amodei, the CEO of Anthropic, predicted in mid-March 2025 that by mid-September 2025, 90% of all code would be written by AI. When I brought this up on the EA Forum, the only response I got was just to deny that he ever made this prediction, when he clearly did, and even he doesn't deny it, although I think he's trying to spin it in a dishonest way. If when a prediction about progress toward AGI is falsified people's response is to simply deny the prediction was made in the first place, despite it being on the public record and discussed well in advance, what hope is there for AGI forecasting? Anyone can just say anything they want at any time and there will be no scrutiny applied.

Another example that bothers me was when the economist Tyler Cowen said in April 2025 that he thinks o3 is AGI. Tyler Cowen isn't nearly as central to the AGI debate as Dario Amodei, but he's been on Dwarkesh Patel's podcast to discuss AGI and he's someone who is held in high regard by a lot of people who think seriously about the prospect of near-term AGI. I haven't really seen anyone criticize Cowen's claim that o3 is AGI, although I may simply have missed it. If you can just say that an AI system is AGI whenever you feel like it, then you can just say your prediction is correct when the time rolls around no matter what happens.

Edit: I don’t want to get lost in the sauce here, so I should add that I totally agree it’s way more interesting to listen to people who go through the trouble of thinking through their views clearly and who express them well. Just saying a number doesn’t feel particularly meaningful by contrast. I found this recent video by an academic AI researcher, Edan Meyer, wonderful in that respect:

The point of view he presents in this video seems very similar to what the Turing Award-winning pioneer of reinforcement learning Richard Sutton believes, but this video is by far the clearest and most succinct statement of that sort of reinforcement learning-influenced viewpoint I’ve seen so far. I find interviews with Sutton fascinating, but the way he talks is a bit more indirect and enigmatic.

I also find Yann LeCun to be a compelling speaker on this topic (another Turing Award winner, for his pioneering contributions to deep learning). I think many people who believe in near-term AGI from scaling LLMs have turned Yann LeCun into some sort of enemy image in their minds, probably because his style is confrontational and he speaks with confidence and force against their views. I often see people unfairly misrepresent and caricaturize what LeCun has to say when they should listen carefully, interpret generously, and engage with the substance (and do so respectfully). Just dismissing your most well-qualified critics out of hand is a great way to end up wrong and woefully overconfident.

I find Sutton and LeCun’s predictions about the timing of AGI and human-level kind of interesting, but that’s so much less interesting than what they have to say about the design principles of intelligence, which is fascinating and feels incredibly important. Their predictions on the timing are pretty much the least interesting part.

Zvi has criticized both Amodei's and Cowen's claims.