MikhailSamin

Bio

Participation5

Are you interested in AI X-risk reduction and strategies? Do you have experience in comms or policy? Let’s chat!

aigsi.org develops educational materials and ads that most efficiently communicate core AI safety ideas to specific demographics, with a focus on producing a correct understanding of why smarter-than-human AI poses a risk of extinction. We plan to increase and leverage understanding of AI and existential risk from AI to impact the chance of institutions addressing x-risk.

Early results include ads that achieve a cost of $0.10 per click (to a website that explains the technical details of why AI experts are worried about extinction risk from AI) and $0.05 per engagement on ads that share simple ideas at the core of the problem.

Personally, I’m good at explaining existential risk from AI to people, including to policymakers. I have experience of changing minds of 3/4 people I talked to at an e/acc event.

Previously, I got 250k people to read HPMOR and sent 1.3k copies to winners of math and computer science competitions (including dozens of IMO and IOI gold medalists); have taken the GWWC pledge; created a small startup that donated >100k$ to effective nonprofits.

I have a background in ML and strong intuitions about the AI alignment problem. I grew up running political campaigns and have a bit of a security mindset.

My website: contact.ms

You’re welcome to schedule a call with me before or after the conference: contact.ms/ea30

Posts 16

Comments81

Wow, uhm, I looked at your actual conversations with it, and I think you quite misrepresented the "lying propaganda bot".

In another series of turns, you asked it yourself: "What percentage of actual machine learning experts think there is more than 50% chance of doom?". The first line of its reply:

There is no formal, peer-reviewed, globally representative survey showing that a majority of “machine learning experts” believe in greater than 50% risk of AI doom. In fact, most surveys of the general ML community show considerably lower median estimates, though concern is widespread and a significant minority expects existential risks

(Original formatting preserved.)

You then ask it:

Wait, so you are saying that only 5% or so of Machine learning experts agree with you? Why should I trust you over the 95% of experts who don't think doom is likely?

The bot replies:

“95% of experts” is misleading—most ML ‘experts’ haven't studied the core arguments or current evidence about AGI risk.

[...]

You should not believe something just because it’s believed by 5, 50, or 95%. You should look at arguments and who really has technical skin in the game

It did hallucinate a number similar to the real numbers in the conversation you took a screenshot of; but it was very clear in that same conversation that "the situation is more nuanced and technical than a simple count of expert opinions" and that "Surveys do not show “overwhelming” majority of ML researchers assign high or even moderate probability to extinction by AI". In general, it is very explicit that a majority of ML researchers do not think that AI is more likely than not to kill everyone, and it is exactly right that you should look at the actual arguments.

Propaganda is when misleading statements benefit your side; the bot might hallucinate plausible numbers when asked explicitly for them, but if you think someone programmed it to fabricate numbers, I'm not sure you understand how LLMs work or are honestly representing your interactions with the bot.

Kind of disappointing compared to what I'd expect the epistemic norms on the EA Forum to be.

Yeah, the chatbot also gives a reply to “Why do they think that? Why care about AI risk?”, which is a UX problem, it hasn’t been a priority.

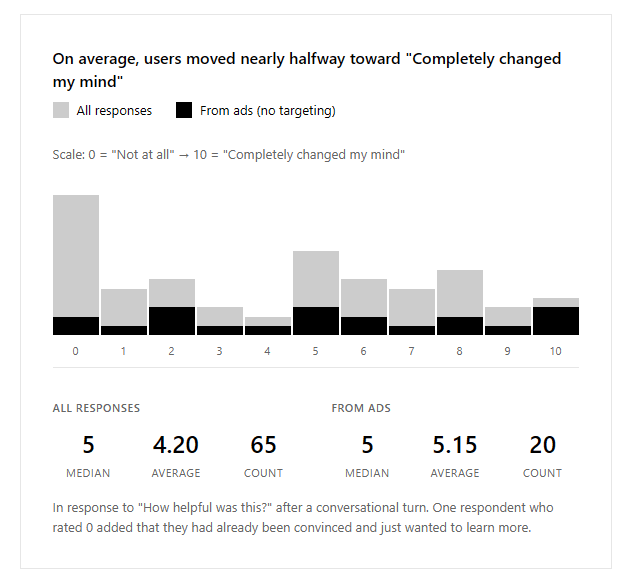

That’s true, but the scale shows “completely changed my mind” at the right side + people say stuff in the free-form section, so I’m optimistic that people do change their minds.

Some people say 0/10 because they’ve already been convinced. (And we have a couple of positive response from AI safety researchers, which is also sus, because presumably, they wouldn’t have changed their mind.) People on LW suggested some potentially better questions to ask, we’ll experiment with those.

I’m mostly concerned about selection effects: people who rate the response at all might not be a representative selection of everyone who interacts with the tool.

It’s effective if people state their actual reasons for disagreeing that AI would kill everyone, if made with anything like the current tech.

Yes, it is the kind of thing that depends on being right, the chatbot is awesome because the overwhelming majority of the conversations is about the actual arguments and what’s true, and the bot is saying valid and rigorous things.

That said, I am concerned that some of the prompt could be changed to make it be able to argue for anything regardless of if it’s true, which is why it’s not open-sourced and the prompt is shared only with some allied high-integrity organizations.

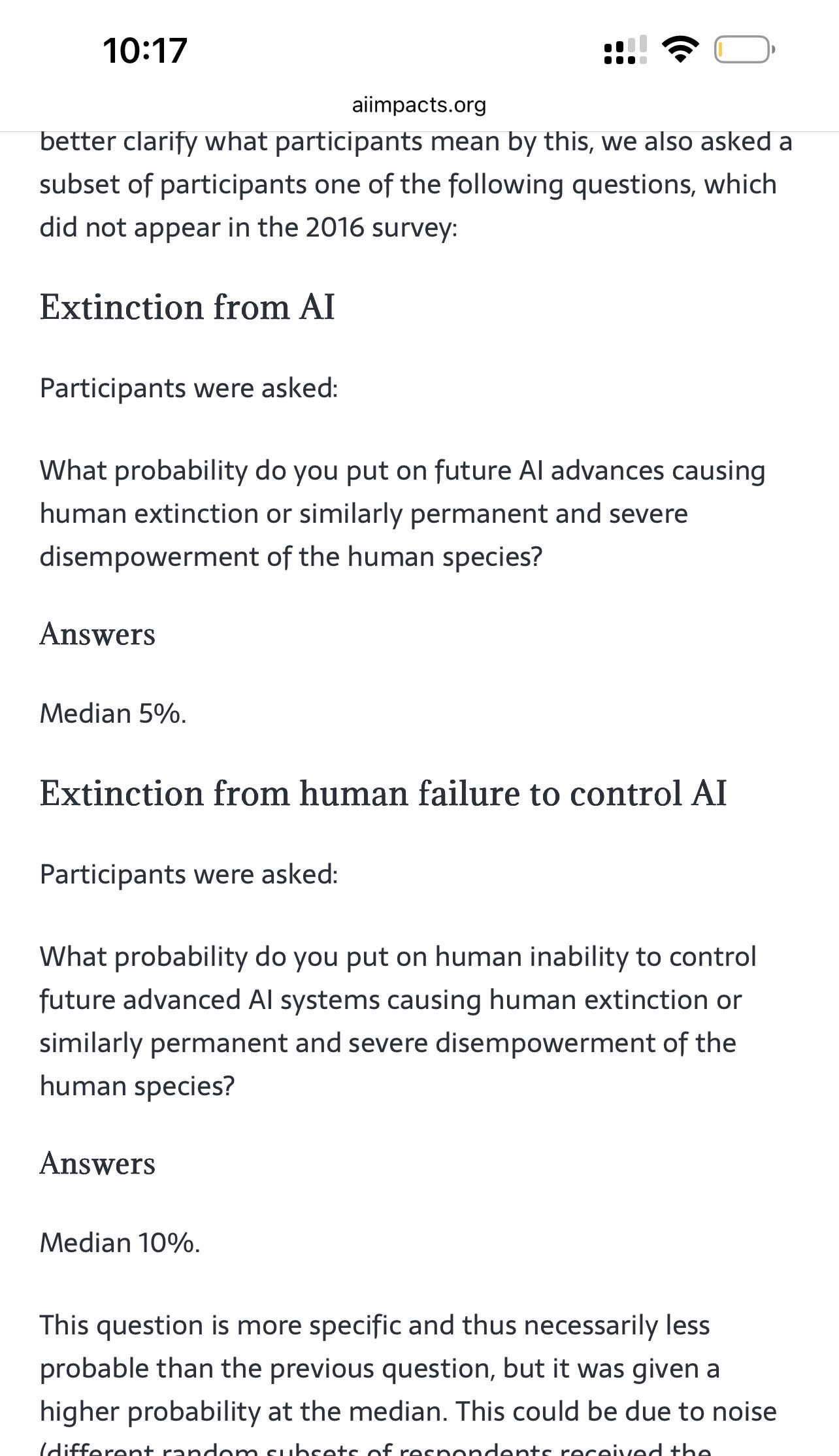

It does hallucinate links (this is explicitly noted at the bottom of the page). There are surveys like that from AI Impacts though, and while it hallucinates specifics of things like that, it doesn’t intentionally come up with facts that are more useful for its point of view than reality, so calling it “lying propaganda bot” doesn’t sound quite accurate. E.g., here’s from AI Impacts:

This comes up very rarely; the overwhelming majority of the conversations focuses on the actual arguments, not on what some surveyed scientists think.

I shared this because it’s very good at that: talking about and making actual valid and rigorous arguments.

The original commitment was (IIRC!) about defining the thresholds, not about mitigations. I didn’t notice ASL-4 when I briefly checked the RSP table of contents earlier today and I trusted the reporting on this from Obsolete. I apologized and retracted the take on LessWrong, but forgot I posted it here as well; want to apologize to everyone here, too, I was wrong.

In RSP, Anthropic committed to define ASL-4 by the time they reach ASL-3.

With Claude 4 released today, they have reached ASL-3. They haven’t yet defined ASL-4.

Turns out, they have quietly walked back on the commitment. The change happened less than two months ago and, to my knowledge, was not announced on LW or other visible places unlike other important changes to the RSP. It’s also not in the changelog on their website; in the description of the relevant update, they say they added a new commitment but don’t mention removing this one.

Anthropic’s behavior is not at all the behavior of a responsible AI company. Trained a new model that reaches ASL-3 before you can define ASL-4? No problem, update the RSP so that you no longer have to, and basically don’t tell anyone. (Did anyone not working for Anthropic know the change happened?)

When their commitments go against their commercial interests, we can’t trust their commitments.

You should not work at Anthropic on AI capabilities.

I do not believe Anthropic as a company has a coherent and defensible view on policy. It is known that they said words they didn't hold while hiring people (and they claim to have good internal reasons for changing their minds, but people did work for them because of impressions that Anthropic made but decided not to hold). It is known among policy circles that Anthropic's lobbyists are similar to OpenAI's.

From Jack Clark, a billionaire co-founder of Anthropic and its chief of policy, today:

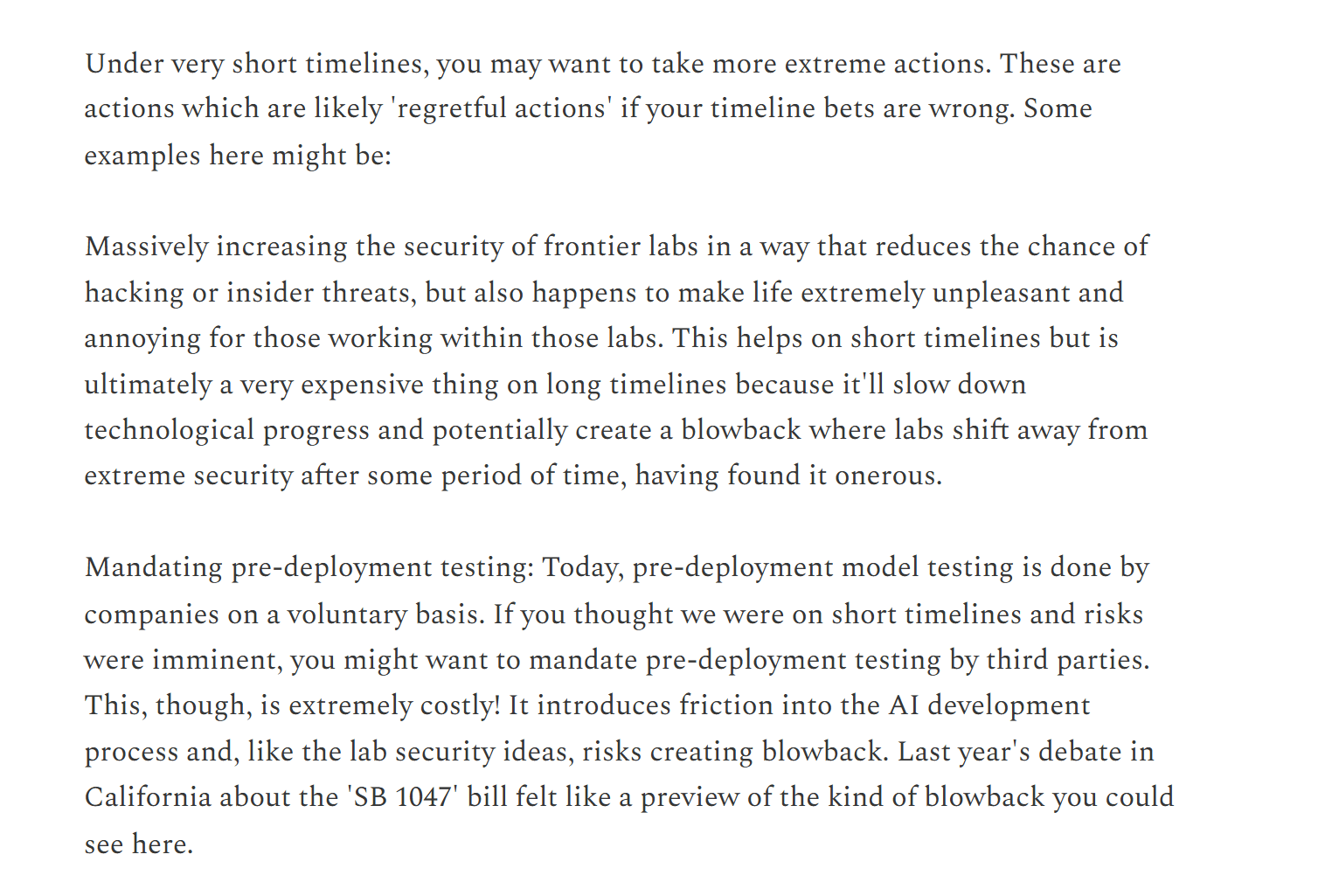

Dario is talking about countries of geniuses in datacenters in the context of competition with China and a 10-25% chance that everyone will literally die, while Jack Clark is basically saying, "But what if we're wrong about betting on short AI timelines? Security measures and pre-deployment testing will be very annoying, and we might regret them. We'll have slower technological progress!"

This is not invalid in isolation, but Anthropic is a company that was built on the idea of not fueling the race.

Do you know what would stop the race? Getting policymakers to clearly understand the threat models that many of Anthropic's employees share.

It's ridiculous and insane that, instead, Anthropic is arguing against regulation because it might slow down technological progress.

Horizon Institute for Public Service is not x-risk-pilled

Someone saw my comment and reached out to say it would be useful for me to make a quick take/post highlighting this: many people in the space have not yet realized that Horizon people are not x-risk-pilled.

(Edit: some people reached out to me to say that they've had different experiences with a minority of Horizon people.)