Charlie_Guthmann

Bio

pre-doc at Data Innovation & AI Lab

previously worked in options HFT and tried building a social media startup

founder of Northwestern EA club

Posts 10

Comments266

it's quite easy, I actually already did it with printful + shopify. I stalled out because (1) I realized it's much more confusing to deal with all the copyright stuff and stepping on toes (I don't want to be competing with ea itself or ea orgs and didn't feel like coordinating with a bunch of people. (2) you kind of get raked using a easy fully automated stack. Not a big deal but with shipping hoodies end up being like 35-40 and t shirts almost 20. I felt like given the size of EA we should probably just buy a heat press or embroidery machine since we probably want to produce 100s+.

Anyway feel free to reach out and we can chat!

here is the example site I spun up, again not actually trying to sell those products was just testing if I could do it https://tp403r-fy.myshopify.com/

Thank you for writing this. To be honest, I'm pretty shocked that the main discussions around the anthropic IPO have been about "patient philanthropy" concerns and not the massive, earth shattering conflicts of interest (both for us as non-anthropic members of the EA community and for anthropic itself which will now have a "fiduciary responsibility"). I think this shortform does a pretty good job summarizing my concern. The missing mood is big. I also just have a sense that way too many of us are living in group houses and attending the same parties together, and that AI employees are included in this + I think if you actually hear those conversations at parties they are less like "man I am so scared" and more like "holy shit that new proto-memory paper is sick". Conflicts of interest, nepotism, etc. are not taken seriously enough by the community and this just isn't a new problem or something I have confidence in us fixing.

Without trying to heavily engage in a timelines debate, I'll just say it's pretty obvious we are in go time. I don't think anyone should be able to confidently say that we are more than a single 10x or breakthrough away from machines being smarter than us. I'm not personally huge in beating the horn for pause AI, I think there are probably better ways to regulate than that. That being said, I genuinely think it might be time for people to start disclosing their investments. I'm paranoid about everyone's motives (including my own).

You are talking about the movement scale issues, with the awareness that crashing anthropic stock could crash ea wealth. That's charitable but let's be less charitable - way too many people here have yolo'd significant parts of their networth on ai stocks, low delta snp/ai calls, etc. and are still holding the bag. Assuming many of you are anything like me, you feel in your brain that you want the world to go well, but I personally feel happier when my brokerage account goes up 3% than when I hear news that AI timelines are way longer than we thought because xyz.

Again kind of just repeating you here but I think it's important and under discussed.

Tangential to the main post but I just read your shortform comment on less wrong and really agree. From a variety of different anecdotal experiences, I'm getting increasingly paranoid about trusting anyone here regarding ai opinions. How can I trust someone if 50% of their money is in Nvidia and snp 5 delta calls? if we pause AI they stand to lose so much. I don't think this is a small amount of the community either.

( I have most of my money in snp stock and ~15% specifically in tech stocks so I'm still quite exposed to AI progress but significantly less than a lot of people here and I still find myself rooting for ai bull market sometimes because i'm selfish).

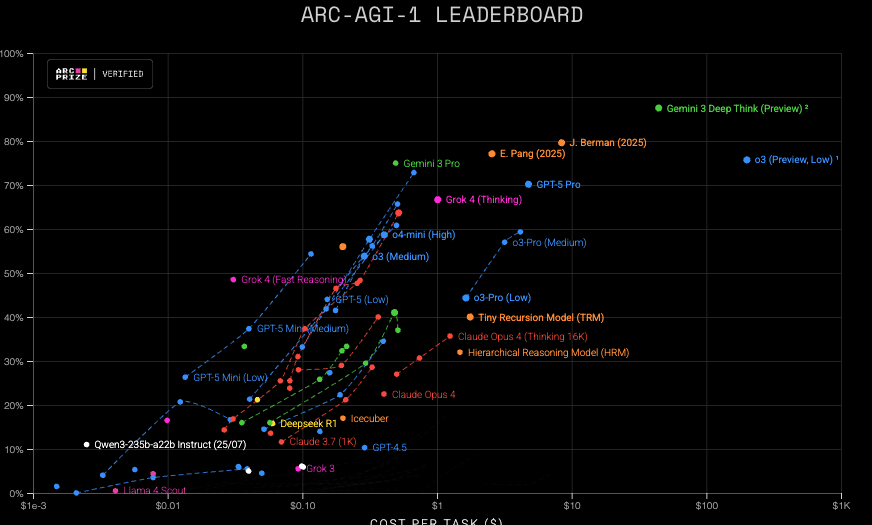

benchmarking model behavior seems increasingly hard to keep track off.

I think there are a bunch of separate layers that are being analyzed, and it's increasingly complicated the degree to which they are separate.

e.g.

level 1 -> pre-trained only

level 2 -> post-trained

level 3 -> with ____ amount of inference (pro, high, low, thinking, etc.)

level 4 -> with agentic scaffolding (Claude code, swe agent, codex)

level 5 -> context engineering setups inside of your agentic repo (ACE, GEPA, ai scientists)

level 6 -> The built digital environment (arguably could be included partially in level 4, stuff like api's being crafted to be better for llms, workflows being re written to accomplish the same goal in a more verifiable way, ui's that are more readable by llms).

In some sense you can boil all of this down to cost per run, like ARC, but you will miss important differences in model behavior on a fixed frontier.

https://jeremyberman.substack.com/p/how-i-got-the-highest-score-on-arc-agi-again

if you read J. Berman substack, you will see he uses existing llms to get his scores with an evolutionary scaffolding (hard to place this as being level 4/5). While I'm decently bitter lesson pilled, It seems plausible we will see proto-agis popping up that are heavily scaffolded before raw models reach that level (though also plausibly we might just see the really useful, generalizable scaffolds get consumed by the model soon thereafter). The behavior of j bermans system is going to be different than the first raw model to hit that score with no scaffolding and pose different threats at the same level of intelligence.

How politicized will AI get in the next (1,2,5) and what will those trends look like?

I think we are investing as a community more in policy/advocacy/research but the value of these things might be heavily a function of the politicization/toxicity of AI. Not a strong prior but I'd assume that like OMB/Think tanks get to write a really large % of the policy for boring non electorally dominant issues but have much less hard power when the issue at hand is like (healthcare, crime, immigration).

Hi wyatt there is some work on number 1 (although agreed needs a lot more). I keep posting this stack so maybe i should curate it into a sequence.

https://forum.effectivealtruism.org/s/wmqLbtMMraAv5Gyqn

https://forum.effectivealtruism.org/posts/W4vuHbj7Enzdg5g8y/two-tools-for-rethinking-existential-risk-2

https://forum.effectivealtruism.org/posts/zuQeTaqrjveSiSMYo/a-proposed-hierarchy-of-longtermist-concepts

https://forum.effectivealtruism.org/posts/fi3Abht55xHGQ4Pha/longtermist-especially-x-risk-terminology-has-biasing

https://forum.effectivealtruism.org/posts/wqmY98m3yNs6TiKeL/parfit-singer-aliens

https://forum.effectivealtruism.org/posts/zLi3MbMCTtCv9ttyz/formalizing-extinction-risk-reduction-vs-longtermism

https://static1.squarespace.com/static/58e2a71bf7e0ab3ba886cea3/t/5a8c5ddc24a6947bb63a9bc9/1519148520220/Todd+Miller.evpsych+of+ETI.BioTheory.2017.pdf

https://forum.effectivealtruism.org/posts/mzT2ZQGNce8AywAx3

https://reflectivedisequilibrium.blogspot.com/2012/03/are-pain-and-pleasure-equally-energy.html

Very nice, thank you for writing.

It seems plausible that p(annual collapse risk) is in part a function of the N friction as well? I think you may cover some of this here but can't really remember.

e.g. a society with less non-renewables will have to be more sustainable survive/grow -> resulting population is somehow more value aligned -> reduced annual collapse risk.

or on the other side

nukes still exist and we can still launch them -> we have higher N friction in pre nuclear age in the next society -> increased annual collapse risk.

(i have a bad habit of just adding slop onto models and think this isn't at all something that need be in the scope of original post just a curiousity).

I don't see this strain of argument as particularly action relevant. I feel like you are getting way to caught up in the abstractions of what "agi" is and such. This is obviously a big deal, this is obviously going to happen "soon" and/or already "happening", it's obviously time to take this very serious and act like responsible adults.

Ok so you think "AGI" is likely 5+ years away. Are you not worried about anthropic having a fiduciary responsibility to it's shareholders to maximize profits? I guess reading between the lines you see very little value in slowing down or regulating AI? While leaving room for the chance that our whole disagreement does revolve around our object level timeline differences, I think you probably are missing the forrest from the trees here in your quest to prove the incorrectness of people with shorter timelines.

I am not a doom maximilist in the sense that I think this technology is already profoundly world-bending and scary today. I am worried about my cousin becoming a short form addicted goonbot with an AI best friend right now - whether or not robot bees are about to gorge my eyes out.

I think there are a reasonably long list of sensible regulations around this stuff (both x-risk related and more minor stuff) that would probably result in a large drawdown in these companies valuations and really the stock market at large. For example but not limited to - AI companionship, romance, porn should probably be on a pause right now while the government performs large scale AB testing, the same thing we should have done with social media and cellphone use especially in children that our government horribly failed to do because of its inability to utilitize RCTs and the absolute horrifying average age of our president and both houses of congress.