I’ve seen a few people in the LessWrong community congratulate the community on predicting or preparing for covid-19 earlier than others, but I haven’t actually seen the evidence that the LessWrong community was particularly early on covid or gave particularly wise advice on what to do about it. I looked into this, and as far as I can tell, this self-congratulatory narrative is a complete myth.

Many people were worried about and preparing for covid in early 2020 before everything finally snowballed in the second week of March 2020. I remember it personally.

In January 2020, some stores sold out of face masks in several different cities in North America. (For example, in New York.) The oldest post on LessWrong tagged with "covid-19" is from well after this started happening. (I also searched the forum for posts containing "covid" or "coronavirus" and sorted by oldest. I couldn’t find an older post that was relevant.) The LessWrong post is written by a self-described "prepper" who strikes a cautious tone and, oddly, advises buying vitamins to boost the immune system. (This seems dubious, possibly pseudoscientific.) To me, that first post strikes a similarly ambivalent, cautious tone as many mainstream news articles published before that post.

If you look at the covid-19 tag on LessWrong, the next post after that first one, the prepper one, is on February 5, 2020. The posts don't start to get really worried about covid until mid-to-late February.

How is the rest of the world reacting at that time? Here's some polling from late January that gives a sense of public sentiment at the time.

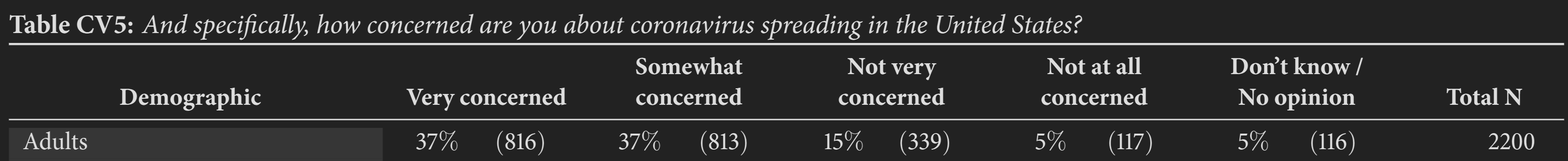

Morning Consult ran a poll from January 24-26, 2020 that found 37% of Americans were very concerned about the novel coronavirus spreading in the U.S.:

An Ipsos poll of Canadians from January 27-28 found similar results:

Half (49%) of Canadians think the coronavirus poses a threat (17% very high/32% high) to the world today, while three in ten (30%) think it poses a threat (9% very high/21% high) to Canada. Fewer still think the coronavirus is a threat to their province (24%) or to themselves and their family (16%).

Here's a New York Times article from February 2, 2020, entitled "Wuhan Coronavirus Looks Increasingly Like a Pandemic, Experts Say", well before any of the worried posts on LessWrong:

The Wuhan coronavirus spreading from China is now likely to become a pandemic that circles the globe, according to many of the world’s leading infectious disease experts.

The prospect is daunting. A pandemic — an ongoing epidemic on two or more continents — may well have global consequences, despite the extraordinary travel restrictions and quarantines now imposed by China and other countries, including the United States.

The tone of the article is fairly alarmed, noting that in China the streets are deserted due to the outbreak, it compares the novel coronavirus to the 1918-1920 Spanish flu, and it gives expert quotes like this one:

It is “increasingly unlikely that the virus can be contained,” said Dr. Thomas R. Frieden, a former director of the Centers for Disease Control and Prevention who now runs Resolve to Save Lives, a nonprofit devoted to fighting epidemics.

The worried posts on LessWrong don't start until weeks after this article was published. On a February 25, 2020 post asking when CFAR should cancel its in-person workshop, the top answer cites the CDC's guidance at the time about covid-19. It says that CFAR's workshops "should be canceled once U.S. spread is confirmed and mitigation measures such as social distancing and school closures start to be announced." This is about 2-3 weeks out from that stuff happening. So, what exactly is being called early here?

CFAR is based in the San Francisco Bay Area, as are Lightcone Infrastructure and MIRI, two other organizations associated with the LessWrong community. On February 25, 2020, the city of San Francisco declared a state of emergency over covid. (Nearby, Santa Clara county, where most of what people consider as Silicon Valley is located, declared a local health emergency on February 10.) At this point in time, posts on LessWrong remain overall cautious and ambivalent.

By the time the posts on LessWrong get really, really worried, in the last few days of February and the first week of March, much of the rest of the world was reacting in the same way.

From February 14 to February 25, the S&P 500 dropped about 7.5%. Around this time, financial analysts and economists issued warnings about the global economy.

Between February 21 and February 27, Italy began its first lockdowns of areas where covid outbreaks had occurred.

On February 25, 2020, the CDC warned Americans of the possibility that "disruption to everyday life may be severe". The CDC made this bracing statement:

It's not so much a question of if this will happen anymore, but more really a question of when it will happen — and how many people in this country will have severe illness.

Another line from the CDC:

We are asking the American public to work with us to prepare with the expectation that this could be bad.

On February 26, Canada's Health Minister advised Canadians to stockpile food and medication.

The most prominent LessWrong post from late February warning people to prepare for covid came a few days later, on February 28. So, on this comparison, LessWrong was actually slightly behind the curve. (Oddly, that post insinuates that nobody else is telling people to prepare for covid yet, and congratulates itself on being ahead of the curve.)

In the beginning of March, the number of LessWrong posts tagged with covid-19 posts explodes, and the tone gets much more alarmed. The rest of the world was responding similarly at this time. For example, on February 29, 2020, Ohio declared a state of emergency around covid. On March 4, Governor Gavin Newsom did the same in California. The governor of Hawaii declared an emergency the same day, and over the next few days, many more states piled on.

Around the same time, the general public was becoming alarmed about covid. In the last days of February and the first days of March, many people stockpiled food and supplies. On February 29, 2020, PBS ran an article describing an example of this at a Costco in Oregon:

Worried shoppers thronged a Costco box store near Lake Oswego, emptying shelves of items including toilet paper, paper towels, bottled water, frozen berries and black beans.

“Toilet paper is golden in an apocalypse,” one Costco employee said.

Employees said the store ran out of toilet paper for the first time in its history and that it was the busiest they had ever seen, including during Christmas Eve.

A March 1, 2020 article in the Los Angeles Times reported on stores in California running out of product as shoppers stockpiled. On March 2, an article in Newsweek described the same happening in Seattle:

Speaking to Newsweek, a resident of Seattle, Jessica Seu, said: "It's like Armageddon here. It's a bit crazy here. All the stores are out of sanitizers and [disinfectant] wipes and alcohol solution. Costco is out of toilet paper and paper towels. Schools are sending emails about possible closures if things get worse.

In Canada, the public was responding the same way. Global News reported on March 3, 2020 that a Costco in Ontario ran out bottled water, toilet paper, and paper towels, and that the situation was similar at other stores around the country. The spike in worried posts on LessWrong coincides with the wider public's reaction. (If anything, the posts on LessWrong are very slightly behind the news articles about stores being picked clean by shoppers stockpiling.)

On March 5, 2020, the cruise ship the Grand Princess made the news because it was stranded off the coast of California due to a covid outbreak on board. I remember this as being one seminal moment of awareness around covid. It was a big story. At this point, LessWrong posts are definitely in no way ahead of the curve, since everyone is talking about covid now.

On March 8, 2020, Italy put a quarter of its population under lockdown, then put the whole country on lockdown on March 10. On March 11, the World Health Organization declared covid-19 a global pandemic. (The same day, the NBA suspended the season and Tom Hanks publicly disclosed he had covid.) On March 12, Ohio closed its schools statewide. The U.S. declared a national emergency on March 13. The same day, 15 more U.S. states closed their schools. Also on the same day, Canada's Parliament shut down because of the pandemic. By now, everyone knows it's a crisis.

So, did LessWrong call covid early? I see no evidence of that. The timeline of LessWrong posts about covid follow the same timeline that the world at large reacted to covid, increasing in alarm as journalists, experts, and governments increasingly rang the alarm bells. In some comparisons, LessWrong's response was a little bit behind.

The only curated post from this period (and the post with the third-highest karma, one of only four posts with over 100 karma) tells LessWrong users to prepare for covid three days after the CDC told Americans to prepare, and two days after Canada's Health Minister told Canadians to stockpile food and medication. It was also three days after San Francisco declared a state of emergency. When that post was published, many people were already stockpiling supplies, partly because government health officials had told them to. (The LessWrong post was originally published on a blog a day before, and based on a note in the text apparently written the day before that, but that still puts the writing of the post a day after the CDC warning and the San Francisco declaration of a state of emergency.)

Unless there is some evidence that I didn't turn up, it seems pretty clear the self-congratulatory narrative is a myth. The self-congratulation actually started in that post published on February 28, 2020, which, again, is odd given the CDC's warning three days before (on the same day that San Francisco declared a state of emergency), analysts' and economists' warnings about the global economy a bit before that, and the New York Times article warning about a probable pandemic at the beginning of the month. The post is slightly behind the curve, but it's gloating as if it's way ahead.

Looking at the overall LessWrong post history in early 2020, LessWrong seems to have been, if anything, slightly behind the New York Times, the S&P 500, the CDC, the government of San Francisco, and enough members of the general public to clear out some stores of certain products. By the time LessWrong posting reached a frenzy in the first week of March, the world was already responding — U.S governors were declaring states of emergency, and everyone was talking about and worrying about covid.

If LessWrong had decided to declare a state of emergency on the same day that San Francisco did, or simply prominently posted the CDC’s warning from that same day, it would have been three days (if not a bit more) ahead of where it actually ended up. Why didn’t it just do that? Alternatively, if the S&P 500 dropping 7.5% in around ten days had been taken as a sufficient signal, that also would have put LessWrong three days ahead of where it was.

I think people should be skeptical and even distrustful toward the claims of the LessWrong community, both on topics like pandemics and about its own track record and mythology. Obviously this myth is self-serving, and it was pretty easy for me to disprove in a short amount of time — so anyone who is curious can check and see that it's not true. The people in the LessWrong community who believe the community called covid early probably believe that because it's flattering. If they actually wondered if this is true or not and checked the timelines, it would become pretty clear that didn't actually happen.

Note: I am publishing this under a second EA Forum profile I created for community posts and other minutiae that I don’t want to distract from or clutter up my posts on my main profile. Sometimes a post is in an awkward middle ground between a quick take and a full post, and this is my imperfect solution to that.

See some previous discussion of a quick take almost identical to this post here.

Back in April 2020, I asked Greg Lewis for his take on the EA community's response to COVID on the 80k podcast. I can't remember anything about what we said but folks might find it interesting. https://80000hours.org/podcast/episodes/greg-lewis-covid-19-global-catastrophic-biological-risks/#the-response-of-the-effective-altruism-community-to-covid-19-021142

Woah, this is brutal.

Context for everybody: Gregory Lewis is a biorisk researcher with a background in medicine and public health. He describes himself as "heavily involved in Effective Altruism". (He's not a stranger here: his EA Forum account was created in 2014 and he has 21 posts and 6000 karma.)

The 80,000 Hours Podcast interview was recorded in mid-April 2020. Lewis starts off with a compliment:

He also pays a compliment to a few people in the EA community who have brainstormed interesting ideas about how to respond to the pandemic and who (as of April 2020) were working on some interesting projects. But he continues (my emphasis added):

Lewis elaborates (my emphasis added again):

More on this consulting textbooks:

What's worse than inefficiency:

An example of a bad take:

On bad epistemic norms:

More on EA criticism of the UK government:

On cloth masks:

More on cloth masks:

Lewis' concrete recommendations for EA:

More on EA setting a bad example:

On epistemic caution:

Lewis twice mentions an EA Forum post he wrote about epistemic modesty, which sounds like it would be a relevant read, here.

Note that Dominic Cummings, one of the then most powerful men in the UK, [credits the rationality community] (https://x.com/dominic2306/status/1373333437319372804) for convincing him that the UK needed to change its coronavirus policy (which I personally am very grateful for!). So it seems unlikely to have been that obvious

Although I think Yarrow's claim is that the LW community was not "particularly early on covid [and did not give] particularly wise advice." I don't think the rationality community saying things that were not at the time "obvious" undermines this conclusion as long as those things were also being said in a good number of other places at the same time.

Cummings was reading rationality material, so that had the chance to change his mind. He probably wasn't reading (e.g.) the r/preppers subreddit, so its members could not get this kind of credit. (Another example: Kim Kardashian got Donald Trump to pardon Alice Marie Johnson and probably had some meaningful effect on his first administration's criminal-justice reforms. This is almost certainty a reflection of her having access, not evidence that she is a first-rate criminal justice thinker or that her talking points were better than those of others supporting Johnson's clemency bid.)

It's quite a strange and interesting story, but I don't think it supports the case that LessWrong actually called covid earlier than others. Let's get into the context a little bit.

First off, Dominic Cummings doesn't appear to be a credible person on covid-19, and seems to hold strange, fringe views. For example, in November 2024 he posted a long, conspiratorial tweet which included the following:

Incidentally, Cummings also had a scandal in the UK around allegations that he inappropriately violated the covid-19 lockdown and subsequently wasn't honest about it (possibly lied about it). This also makes me a bit suspicious about his reliability.

This situation with Dominic Cummings reminds me a bit about how Donald Trump's staffers in the White House have talked about how it's nearly impossible to get him to read their briefings, but he's obsessed with watching Fox News. Unfortunately, the information a politician pays attention to and acts on is not necessarily the best information, or the source that conveyed that information first.

As mentioned in the post above, there were already mainstream experts like the CDC giving public warnings before the February 27, 2020 blog post that was republished on LessWrong on February 28. Is it possible Dominic Cummings was, for whatever reason, ignoring warnings from experts while, oddly, listening to them from bloggers? Is Cummings' narrative, in general, reliable?

I decided to take a look at the timeline of the UK government's response to covid in March 2020. There's an article from the BBC published on March 14, 2020, headlined, "Coronavirus: Some scientists say UK virus strategy is 'risking lives'". Here's how the BBC article begins:

The open letter says:

The BBC article also mentions another open letter, then signed by 200 behavioural scientists (eventually, signed by 680), challenging the government's rationale for not instituting a lockdown yet. That letter opened for signatures on March 13, 2020 and closed for signatures on March 16, 2020.

First, note that this open letter calling for social distancing measures was published 16 days after the February 28, 2020 post on LessWrong and 17 days after the February 27, 2020 blog post it was republishing. If the UK government changed its thinking on or approach to covid-19 in the last few days of February or the first few days of March based on Dominic Cummings reading the blog posts he mentioned in that tweet, why was this open letter still necessary on March 14? What exactly is Cummings saying the bloggers convinced him to do, and when exactly did he do it?

Another quote from the March 14, 2020 BBC article:

On March 16, 2020, the UK government advised the public to avoid non-essential social contact. On March 23, 2020, the government announced a nationwide lockdown that officially took effect on March 26. Is it possible the UK government's response was more influenced by mainstream experts than by bloggers?

I think the COVID case usefully illustrates a broader issue with how “EA/rationalist prediction success” narratives are often deployed.

That said, this is exactly why I’d like to see similar audits applied to other domains where prediction success is often asserted, but rarely with much nuance. In particular: crypto, prediction markets, LVT, and more recently GPT-3 / scaling-based AI progress. I wasn’t closely following these discussions at the time, so I’m genuinely uncertain about (i) what was actually claimed ex ante, (ii) how specific those claims were, and (iii) how distinctive they were relative to non-EA communities.

This matters to me for two reasons.

First, many of these claims are invoked rhetorically rather than analytically. “EAs predicted X” is often treated as a unitary credential, when in reality predictive success varies a lot by domain, level of abstraction, and comparison class. Without disaggregation, it’s hard to tell whether we’re looking at genuine epistemic advantage, selective memory, or post-hoc narrative construction.

Second, these track-record arguments are sometimes used—explicitly or implicitly—to bolster the case for concern about AI risks. If the evidential support here rests on past forecasting success, then the strength of that support depends on how well those earlier cases actually hold up under scrutiny. If the success was mostly at the level of identifying broad structural risks (e.g. incentives, tail risks, coordination failures), that’s a very different kind of evidence than being right about timelines, concrete outcomes, or specific mechanisms.

I distinctly remember telling my parents to wear a mask in the airport, based on rationalist sources, and having to argue that actually anonymous people on the internet were more reliable sources than the government, who did not recommend this.

Interesting. The claim I heard was that some rationalists anticipated that there would be a lockdown in the US and figured out who they wanted to be locked down with, especially to keep their work going. That might not have been put on LW when it was happening. I was skeptical that the US would lock down.