tl;dr

AI career advice orgs, prominently 80,000 Hours, encourage career moves into AI safety roles, including mid‑career pivots. I analyse the quality of this advice from the private satisfaction, public-good, and counterfactual equilibrium perspectives, and learn the following things.

- Rational Failure: If you value personal, direct impact highly, it can be rational to attempt a pivot that will probably fail (e.g., success chance).

- Opacity: if we needed to work out whether our advice was producing good or not, we would need data both on success rates and candidate quality distributions, which are currently unavailable.

- Misalignment: The optimal success rate for the field (maximizing total impact) differs from the optimal rate for individuals (maximizing personal EV). The advice ecosystem appears calibrated to neither.

- Counterfactual impact: Counterfactually, the value of a pivot is much lower than naïvely; in highly contested roles you need not only to be the best but to be the best by a wide enough margin to justify all the effort of the other people you are displacing.

- Donations: If you donate at the moment and would pause donations while taking a career sabbatical, that is very likely a negative EV move in public goods terms. If the EV of a pivot is uncertain, donating the attempt costs (e.g., the sabbatical expenses you were willing to pay) provides a guaranteed positive counterfactual impact and doesn't require all this fancy modelling.

The analysis here should not be astonishing; we know advice calibration is hard, and we know that the collective and private goals might be misaligned. Grinding through the details nonetheless reveals some less obvious implications; for example not only are the number of jobs and the number of applicants important, but your model of the distribution of talent ends up having a huge effect. The fact that the latter two factors --- number of applicants and talent distribution --- are inscrutable to the people who make the risky choice to do the career pivoting, is why I think that advice about careers is miscalibrated.

The problem bites for mid-career professionals with high switching costs. It is likely less severe for early career professionals who have lower switching costs, or for people doing something other than switching jobs (e.g. if you are starting up a new organisation the model would be different). I propose some mitigations, both personal and institutional. I made an interactive widget to help individuals evaluate their own pivots.

This was a draft for Draft Amnesty Week. I revisited it to edit for style

Epistemic status

A solid back-of-the-envelope analysis of some relatively well-understood but frequently under-regarded concepts.

Here’s a napkin model of the AI-safety career-advice economy—or rather, three models of increasing complexity. They show how advice can sincerely recommend gambles that mostly fail, and why—without better data—we can’t tell whether that failure rate is healthy (leading to impact at low cost) or wasteful (potentially destroying happiness and even impact). In other words, it’s hard to know whether our altruism is “effective.”

In AI Safety in particular, there's an extra credibility risk idiosyncratic to this system. AI Safety is, loosely speaking, about managing the risks of badly aligned mechanisms producing perverse outcomes. As such, it's particularly incumbent on our field to avoid mechanisms that are badly aligned and produce perverse outcomes; otherwise we aren't taking our own risk model seriously.

To keep things simple, we ignore as many complexities as possible.

- We evaluate decisions in terms of cause impact, which we assume we can price in donation-equivalent dollars. This is a public good.

- Individual private goods are the candidate's own gains and losses. We assume the candidate's job satisfaction and remuneration (i.e. personal utility) can be costed in dollars.

Part A---Private career pivot decision

An uncertain career pivot is a gamble, so we model it the same way we model other gambles.

Meet Alice

Alice is a senior software engineer in her mid-30s, making her a mid-career professional. She has been donating roughly 10% of her income to effective charities and now wonders whether to switch lanes entirely to achieve impact via technical AI safety work in one of those AI Safety jobs she's seen advertised She has saved six months of runway funds to explore AI-safety roles --- research engineering, governance, or technical coordination. Each month out of work costs her foregone income and reduced career prospects. Her question is simple: Is this pivot worth the costs?

To build the model, Alice needs to estimate four things:

Upside

-

Annual Surplus (): This is the key number. It's the difference in Alice's total annual utility between the new AI safety role () and her current baseline (). This surplus combines the change in her salary and her impact---indirectly via donations and directly by doing some fancy AI safety job.

- , where is wage, is impact, is donations, and is Alice's weighting of impact versus consumption.

- .

Downside

- Runway (): The maximum time she's willing to try, in years.

- Burn Rate (): This is Alice's net opportunity cost per year while on sabbatical (e.g., foregone pay, depleted savings), measured in k$/year.

Odds

- Application Rate (): The number of distinct job opportunities she can apply for per year.

- Success Probability (): Her average probability of getting an offer from a single application. We assume these are independent and identically distributed (i.i.d.).

Caution

The i.i.d. assumption (each job is independent) is likely optimistic. In reality, applications are correlated: if Alice is a good fit for one role, she's likely a good fit for others (and vice-versa). We formalise this in the next section with a notion of candidate quality distributions that captures the notion that you don't know your "ranking" in the field, but most people are not in at the top of it, by definition.

Timing

- Discount Rate (): A continuous rate per year that captures her time preference. A higher means she values immediate gains more---for example, if she expects short AGI timelines, might be high.

Modeling the Sabbatical: The Decision Threshold

With these inputs, we can calculate the total expected value (EV) of her sabbatical gamble. The full derivation is in Appendix A, but here's the result:

This formula looks complex, but its logic is simple. The entire decision hinges on the sign of the bracketed term: This is a direct comparison between the expected gain rate (the upside , multiplied by the success rate , and adjusted for discounting ) and the burn rate (). The prefactor scales that value according to the length of Alice's runway and her discount rate.

The EV is positive if and only if the gain rate exceeds the burn rate. This means Alice's decision boils down to a simple question: Is her per-application success probability, , high enough to make the gamble worthwhile?

We can find the exact break-even probability, , by setting the gain rate equal to the burn rate. This gives a much simpler formula for Alice's decision threshold:

If Alice believes her actual is greater than this , the pivot has a positive expected value. If , she should not take the sabbatical, at least on these terms.

What This Model Tells Alice

This simple threshold gives us a clear way to think about her decision:

- The bar gets higher: The threshold increases with higher costs (), shorter timelines, or greater impatience (). If her sabbatical is expensive or she's in a hurry, she needs to be more confident of success.

- The bar gets lower: The threshold decreases with more opportunities () or a higher upside (). If the job offers a massive impact gain or she can apply to many roles, she can tolerate a lower chance of success on any single one.

- Runway doesn't change the threshold: Notice that the runway length isn't in the formula. A longer runway gives her more expected value (or loss) if she does take the gamble, but it doesn't change the break-even probability itself.

- The results are fragile to uncertainty: This model is highly sensitive to her estimates. If she overestimates her potential impact (a high ) or underestimates her time preference (a low ), she'll calculate a that is artificially low, making the pivot look much safer than it is.[1]

- The key unknown: Even with a perfectly calculated , Alice still faces the hardest part: estimating her own actual success probability, .

That is, essentially, her chance of getting an offer. It depends not only on the number of jobs available but crucially on the number and quality of the other applicants.

All that said, this is a relatively "optimistic" model. If Alice attaches a high value to getting her hands dirty in AI safety work, she might be willing to accept a remarkably low ; we'll see that in the worked example. Hold that thought, though, because I'll argue that this personal decision rule can be pretty bad at maximizing total impact.

Caution

If you are using these calculations for real, be aware that our heuristics are likely overestimating Alice's chances. Job applications are not IID. The effective number of independent shots is lower than the raw application count, reducing effective --- if your skills don't match the first job, it is also less likely to match the second, because the jobs might be similar to each other.

Worked example

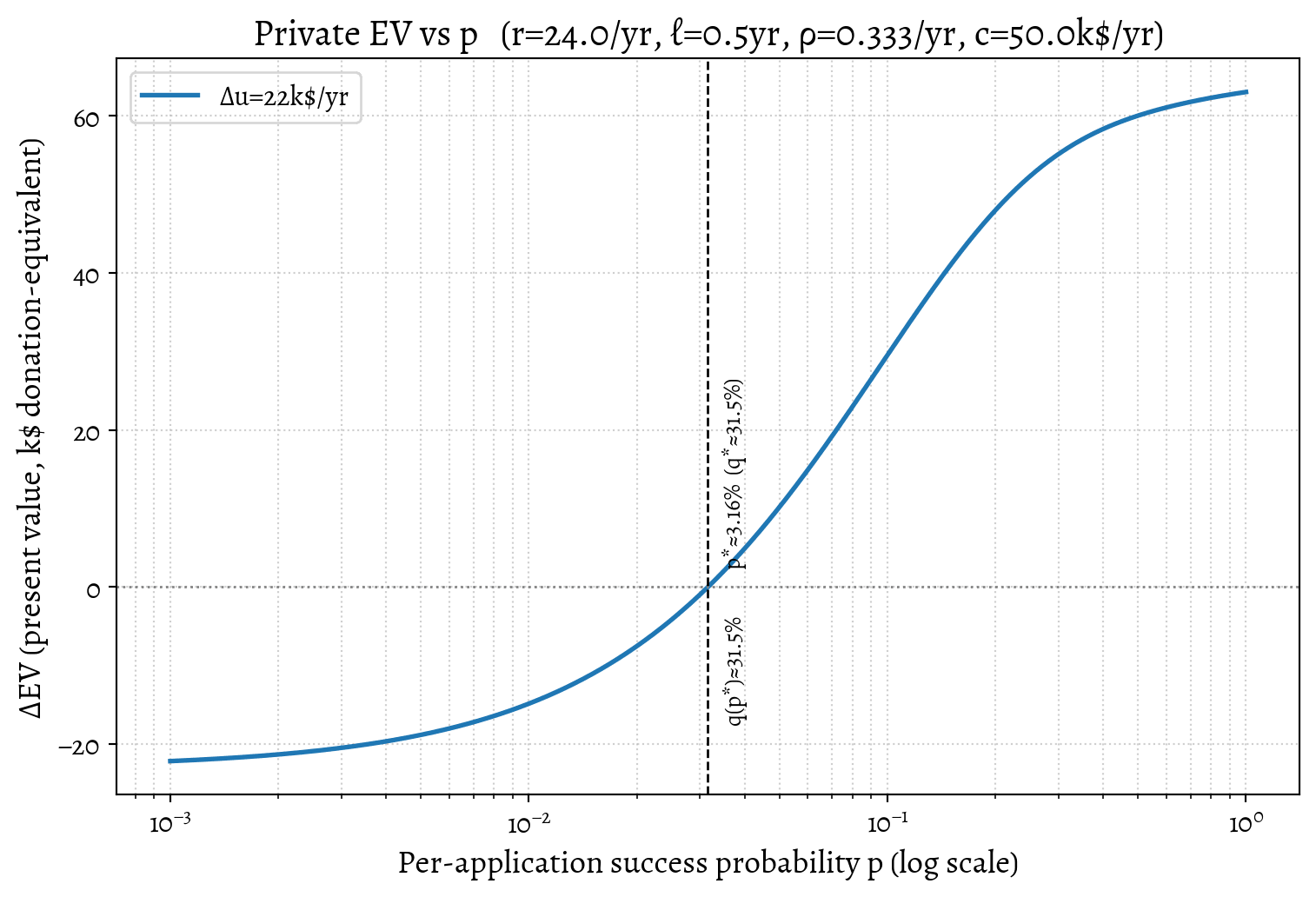

Let's plug in some plausible numbers for Alice. She's a successful software engineer who earns k$/year, donates k$/year after tax, and has no on-the-job impact (i.e. no net harm, no net good). That means Alice earns and donates . A target role offers , , and . Set , runway years, application rate /year, discount , and burn . Then and Over six months, the chance of at least one success at is . Alice's expected actual sabbatical length is , and, conditional on success, it's . Under these assumptions, we expect the sabbatical to break even because the job offers enough upside to compensate for a greater-than-even risk of failure.

We plot a few values for Alice to visualize the trade-offs for different upsides .

To play around with the assumptions, try the interactive Pivot EV Calculator (source at danmackinlay/career_pivot_calculator).

Part B --- Field-level model

tl;dr

In a world with a heavy-tailed distribution of candidate impact, the field benefits from many attempts because a few "hits" dominate. In light-tailed worlds, the same encouragement becomes destructive. We simply don't know which world we're in.

So far, this has been Alice's private perspective. Let's zoom out to the field level and consider: What if everyone followed Alice's decision rule? Is the resulting number of applicants healthy for the field? What's the optimal number of people who should try to pivot?

From personal gambles to field strategy

Our goal is to move beyond Alice's private break-even () and calculate the field's welfare-maximizing applicant pool size (). This is how many "Alices" the field can afford to have rolling the dice before the costs of failures outweigh the value of successes.

To analyze this, we must shift our model in three ways:

-

Switch to a Public Ledger: From a field-level perspective, private wages and consumption are just transfers; they therefore drop out of the analysis. What matters is the net production of public goods (i.e., impact).

-

Distinguish Public vs. Private Costs: The costs are now different.

- Private Cost (Part A): included Alice's full opportunity cost (foregone wages, etc.).

- Public Cost (Part B): We now use , which captures only the foregone public goods during a sabbatical (e.g., , or baseline impact + baseline donations + externalities).

-

Move from Dynamic Search to Static Contest: Instead of one person's dynamic search, we'll use a static "snapshot" model of the entire field for one year. We assume there are open roles and total applicants that year.

Reconciling the individual and field-level Models

In Part A, Alice saw jobs arriving one-by-one (a Poisson process with rate ). In Part B, we are modeling an annual "contest" with applicants competing for jobs.

We can bridge these two views by setting . This treats the entire year's worth of job opportunities as a single "batch" to be filled from the pool of candidates who are "on the market" that year.

This is a standard simplification. It allows us to stop worrying about the timing of individual applications and focus on the quality of the matches, which is determined by the size of the applicant pool (). We can then compare the total Present Value (PV) of the benefits (better hires) against the total PV of the costs (failed sabbaticals).

Here's a paragraph to bridge those two concepts. I'd suggest placing this just before the first plot in Part B, where you start to visualize .

If jobs are available annually (which we've already equated to Alice's application rate ) and total applicants are competing for them, a simple approximation for the per-application success probability is that it's proportional to the ratio of jobs to applicants.

For the rest of this analysis, we'll assume a simple mapping: . This allows us to plot both models on the same chart: as the field becomes more crowded ( increases), the individual chance of success () for any single application shrinks.

The Field-Level Model: Assumptions

Here's the minimally complicated version of our new model:

- There are total applicants and open roles per year.

- Each applicant has a true, fixed potential impact drawn i.i.d. from the talent distribution .

- Employers perfectly observe and hire the best candidates. (This is a strong, optimistic assumption about hiring efficiency).

- Applicants do not know their own , only the distribution .

The intuition is that the field benefits from a larger pool because it increases the chance of finding high-impact candidates. But the field also pays a price for every failed applicant.

Benefits vs. Costs on the Public Ledger

Let's define the two sides of the field's welfare equation.

The Marginal Benefit (MV) of a Larger Pool

The benefit of a larger pool is finding better candidates. We care about the marginal value of adding one more applicant to the pool, which we define as . This is the expected annual increase in impact from widening the pool from to . (Formally, , where is the total impact of the top hires from a pool of ).

The Marginal Cost (MC) of a Larger Pool

The cost is simpler. When , adding one more applicant results (on average) in one more failed pivot. This failed pivot costs the field the foregone public good during the sabbatical. We define the social burn rate per year as . To compare this to the annual benefit , we need the total present value of this foregone impact. We call this (the PV of one failed attempt). (This cost is derived in Appendix B as ).

We do not model employer congestion from reviewing lots of applicants --- the rationale is that it's empirically small because employers stop looking at candidates when they're overwhelmed [@Horton2021Jobseekers].[^2] Note, however, that we also assume employers perfectly observe , which means we're being optimistic about the field's ability to sort candidates. Maybe we could model a noisy search process?

Field-Level trade-offs

We can now find the optimal pool size . The total public welfare peaks when the marginal benefit of one more applicant equals the marginal cost.

As derived in Appendix B, the total welfare is maximized when the present value of the annual benefit stream from the marginal applicant () equals the total present value of their failure cost (). Substituting the expression for and cancelling the discount rate , we get a very clean threshold: This equation is the core of the field-level problem. The optimal pool size is the point where the expected annual marginal benefit () falls to the level of the total foregone public good from a single failed sabbatical attempt.

The Importance of Tail Distributions

How quickly does shrink? Extreme value theory tells us it depends entirely on the tail of the candidate-quality distribution, . The shape of the tail determines how quickly returns from widening the applicant pool diminish.

We consider two families (the specific formulas are in Appendix B):

-

Light tails (e.g., Exponential): In this world, candidates vary, but the best isn't transformatively better than average. Returns diminish quickly: the marginal value shrinks hyperbolically (roughly as ).

-

Heavy tails (e.g., Fréchet): This captures the "unicorn" intuition. Returns diminish much more slowly. If the tail is heavy enough, decays extremely slowly, justifying a very wide search.

Implications for Optimal Pool Size

This difference in diminishing returns hugely affects the optimal pool size . The full solutions for are in Appendix B.

With light tails, there's a finite pool size beyond which ramping up the hype (increasing ) reduces net welfare. Every extra applicant burns in foregone public impact while adding an that shrinks rapidly.

With heavy tails, it's different. As the tail gets heavier, explodes. In very heavy-tailed worlds, very wide funnels can still be net positive. We may decide it's worth, as a society, spending a lot of resources to find the few unicorns.

We set the expected impact per hire per year to (impact dollars/yr) to match Alice's hypothetical target role. This is just for exposition.

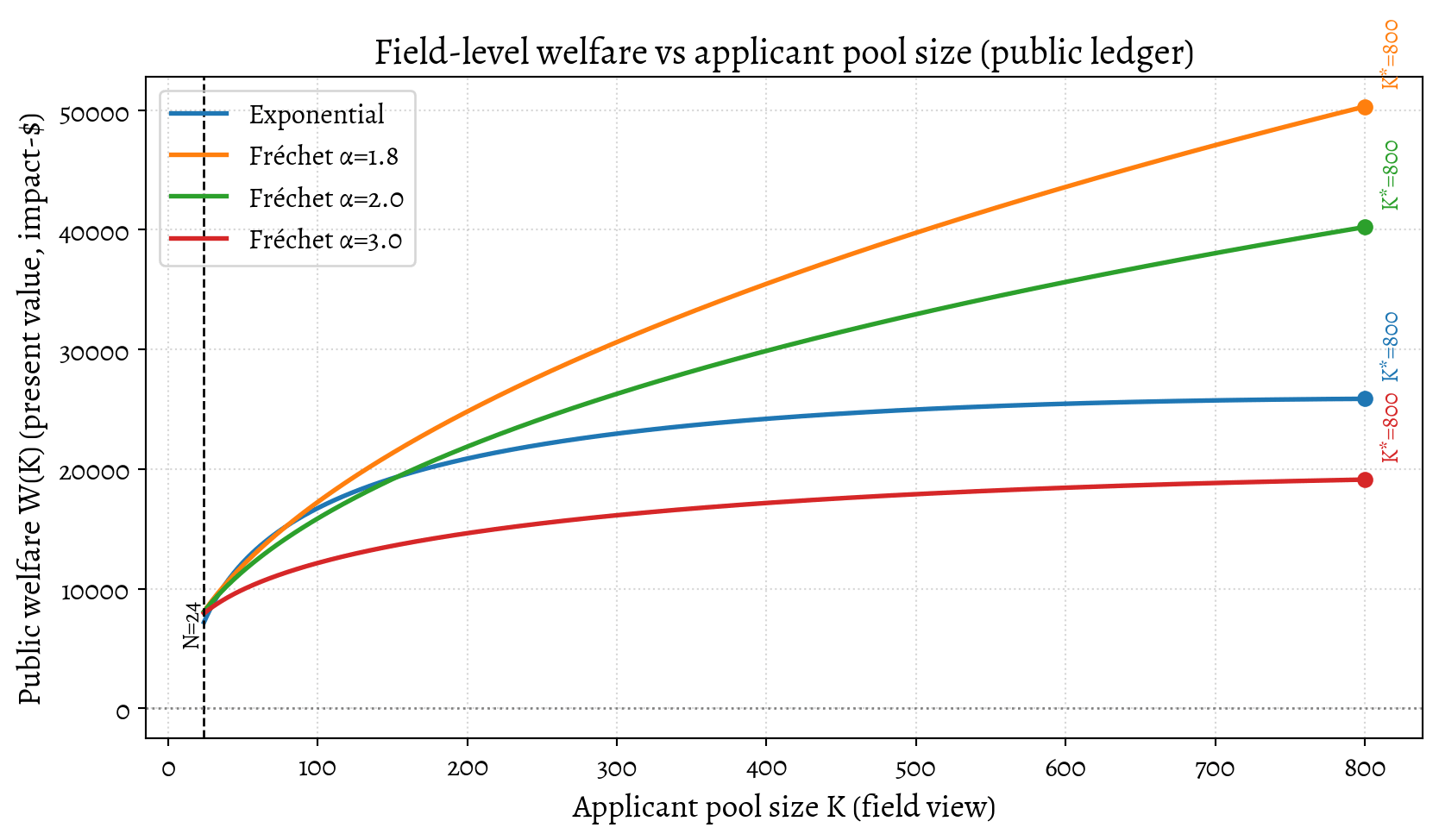

We can, of course, plot this.

- This plot shows total net welfare and marks the maximum for each family, so we can see where total welfare peaks. The dashed line at shows where failures begin: people each impose a public cost of . The markers show , the pool size beyond which widening further would reduce total impact.

- Units: is in impact dollars per year and is converted to PV by multiplying by . The subtraction uses the discounted per-failure cost .

- Fréchet curves use the large- asymptotic (with ). We could get the exact for Fréchet, but the asymptotic is good enough to illustrate the qualitative behaviour.

- We treat all future uncertainties about role duration, turnover, or project lifespan as already captured in the overall discount rate .

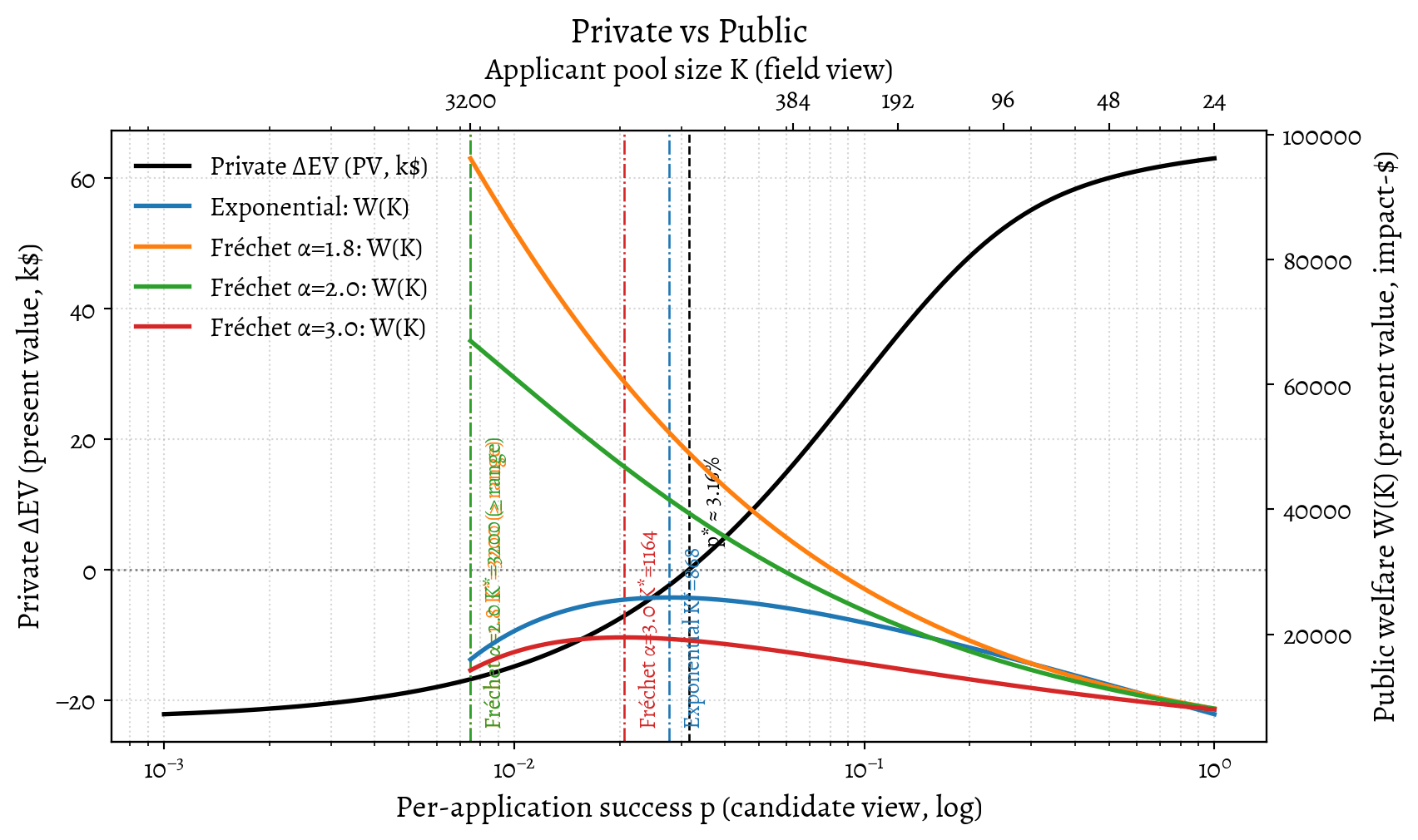

We can combine these perspectives to visualize the tension between private incentives and public welfare.

This visualization combines the private and public views by assuming an illustrative mapping from pool size to success probability: , where bundles screening efficiency; in this figure . The black curve (left axis) shows a candidate's private expected value (EV) as a function of success probability . The coloured curves (right axis) show the field's welfare, . The private break-even point (black dashed line) can fall far to the left of the field-optimal point (coloured vertical lines). This gap marks the region where individuals may be rationally incentivized, at the field level, to enter even though, at the candidate level, the field is already saturated or oversaturated.

<div>

None

If your applicant pool does not have heavy tails, widening the funnel likely increases social loss.

</div>

Part C --- Counterfactual Impact and Equilibrium

Part A modeled Alice's pivot as a private gamble, where the upside was the absolute impact () of the new role. Part B zoomed out, analyzing the field-level optimum () and showing how the marginal value () of adding one more applicant shrinks as the pool () grows.

Now we connect these two views. What if Alice is a sophisticated applicant? She understands the field-level dynamics from Part B and wants to maximize her true counterfactual impact---not just the absolute impact of the role she fills. She must update her private EV calculation from Part A.

This introduces a feedback loop: Alice's personal incentive to apply now depends on the crowd size () and the talent distribution (), and those factors in turn affect the crowd size.

The Counterfactual Impact Model

In Part A, Alice's upside included the absolute impact . But her true impact is counterfactual: it's the value she adds compared to the next-best person who would have been hired if she hadn't applied.

How can she estimate this? She doesn't know her own quality () relative to the pool. If we assume she is, from the field's perspective, just one more "draw" from the talent distribution (formally: applicants are exchangeable), then her expected counterfactual impact from the decision to apply is exactly the marginal value of adding one more applicant: (from Part B).

This is her ex-ante expected impact before the gamble is resolved. But her EV calculation (from Part A) needs the upside conditional on success.

Let be this value: the expected counterfactual impact given she succeeds and gets the job. Let be her overall probability of success in a pool of size . (In the static model of Part B, if she joins a pool of others, there are applicants for slots, so ). If her attempt fails (with probability ), her counterfactual impact is zero.

Therefore, the ex-ante expected impact is simply the probability of success multiplied by the value of that success:

This gives us the key value Alice needs. Alice's expected counterfactual impact, conditional on success, is:

Alice can now recalibrate her private decision from Part A. She defines a counterfactual private surplus, , by replacing the naive, absolute impact with her sophisticated, counterfactual estimate .

This changes the dynamics entirely. In Part A, the value of the upside () was fixed. Now, the value of the upside () itself depends on the pool size .

As grows, both (the marginal value) and (the success chance) decrease. How behaves depends on which of those decreases faster (the marginal value or the success chance)---a property determined by the tail of the impact distribution .

The Dynamics of Counterfactual Impact

The behaviour of leads to radically different incentives depending on the tail shape of the talent pool.

Case 1: Light Tails

In a light-tailed world (e.g., an Exponential distribution), talent is relatively clustered. The math shows (see Appendix: see Appendix) that and shrink at roughly the same rate, causing their ratio to be constant.

(where is the population's average impact).

Intuition: As the pool grows, the quality of the th-best hire---the person we displace---rises almost as fast as the quality of the average successful hire (us). The gap between us and the person we displace remains small and roughly constant. Our counterfactual impact is just the average impact, .

Implication: If the average impact is modest, may not be enough to offset a large pay cut (like Alice's). In this world, we'd expect pivots with high private costs to be EV-negative for the average applicant, regardless of how large the pool gets.

Case 2: Heavy Tails

In a heavy-tailed world (e.g., Fréchet), "unicorns" with transformative impact exist. Here, shrinks much more slowly than . As shown in the appendix, for a Fréchet distribution with shape , the result is:

The expected counterfactual impact conditional on success actually increases as the field gets more crowded.

Intuition: Success in a very large, competitive pool () is a powerful signal. It suggests we aren't just "good," but potentially a "unicorn." We aren't just displacing the th-best person; we're potentially displacing someone much further down the tail.

Implication: In this world, the rate of decay of the field value with funnel size can be slow. The potential upside can grow large enough to easily offset significant private costs (like pay cuts and foregone donations). As a corollary, if we believe in unicorns, it can still make sense to risk large private costs with a low chance of success in order to discover whether we are, in fact, a unicorn.

Alice Revisited

Alice revisited. With light‑tailed assumptions, equals the population mean and is too small to offset Alice's pay cut and lost donations---Alice's counterfactual surplus is negative regardless of . Under heavy‑tailed assumptions, rises with ; across a broad range of conditions, the pivot can become attractive despite large pay cuts (i.e. if Alice might truly be a unicorn). The sign and size of this effect hinge on the tail parameter and scale, which are currently unmeasured.

Visualizing Private Incentives vs. Public Welfare

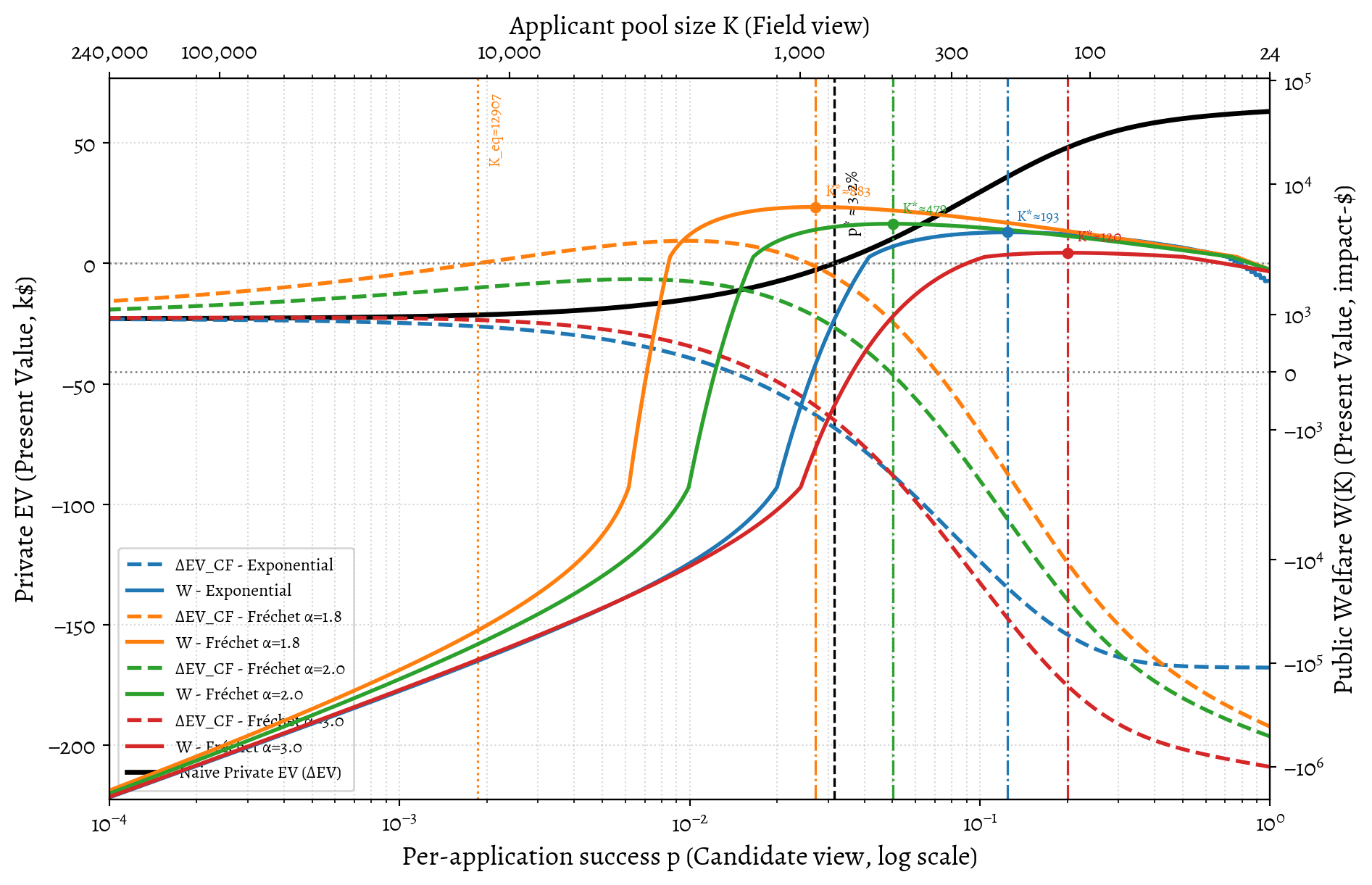

We can now visualize the dynamics of private, public, and counterfactual private valuations by assuming an illustrative mapping between pool size and success probability: . This allows us to see how the incentives change as the field gets more crowded (moving left on the x-axis).

Visualizing the Misalignment

This visualization brings all three models together, using Alice's parameters to illustrate the tensions between private incentives and public good. The plot is dense, but it reveals three key dynamics:

-

The Information Gap (A vs. C): The "Naive EV" (A, black solid line) is far more optimistic than the "Counterfactual EV" (C, dashed colored lines). An applicant using the simple model from Part A---ignoring her displacement effect---will drastically overestimate the personal EV of pivoting.

-

The Cost Barrier (C): In light-tailed worlds (Exponential, purple; Fréchet , red), the sophisticated applicant's EV is always negative. Alice's financial losses (her burn rate plus in foregone donations) dominate any plausible counterfactual impact. Only in heavy-tailed "unicorn" worlds (Fréchet , green; , orange) does the pivot become EV-positive.

-

The Structural Misalignment (B vs. C): This is the core problem. In the heavy-tailed worlds where pivoting is privately rational (green, orange), the socially optimal pool size (, the peak of the solid welfare lines) is far smaller than the private equilibrium (, where the dashed EV lines cross zero). The system incentivizes massive Over-Entry.

Why Does This Happen? The Misalignment Mechanism

The plot shows that a misalignment exists; our model explains why it's structural. It boils down to a conflict between the private cost of trying and the social cost of failing.

We can identify the two different "stop" signals:

- The Social Optimum (): The field's total welfare (Part B)

peaks when the marginal impact of one more applicant

() drops to equal the social cost of the applicant's

(likely) failed attempt. For Alice, this cost is the public good she

stops producing during her sabbatical---primarily her donations.

- Social Cost of Failure (): /year.

- The Private Equilibrium (): A sophisticated Alice

(Part C) stops applying when her private EV becomes zero. This

happens when the marginal impact she can expect to have

(, which she shares with other applicants) drops to

equal her effective private cost hurdle per application. As

derived in Appendix C, this hurdle is .

- Private Cost Hurdle (): (or $690).

This is the misalignment.

The field implicitly "wants" applicants to stop when the marginal impact benefit drops below $18,000. But because the job search is so efficient (high ), Alice's private cost for one more "shot at the prize" is only $690.

She is rationally incentivized to keep trying long after her entry is creating a net social loss. This is what drives the massive gap in our heavy-tailed model: the social optimum might be around 11,600, but the private equilibrium balloons to over 178,000.

This leads to a clear, if sobering, takeaway for a mid-career professional like Alice. Given her high opportunity cost (including foregone donations), donating is the robustly higher-EV option unless she has strong, specific evidence that both (a) the talent pool is extremely heavy-tailed and (b) she is likely to be one of the "unicorns" in that tail.

Implications and Solutions

Our takeaway is that the AI safety career funnel, and likely other high-impact career funnels, is miscalibrated; not in the sense that we are definitely producing net harm right this minute, but in the sense that the feedback mechanisms do not exist to make sure that we do not produce net harm. It's hard to know how bad this is, but Alice's social cost versus the private hurdle is worrisome if it is typical.

That is to say, all this work on alignment is misaligned. Organizations influencing the funnel size don't internalize the costs borne by unsuccessful applicants. This incentivizes maximizing application volume (a visible proxy) rather than welfare-maximizing matches---a classic setup for Goodhart's Law.

That said, it's not even clear what we should be aligned to; the equilibria for maximizing personal life satisfaction for applicants, or maximizing total field impact, may differ.

If we want to make a credible claim to be impact-driven, it is the latter, the net public good, that needs to be prioritized, because "please donate so that a bunch of monied professionals can self-actualize" is not a great pitch.

For Individuals: Knowing the Game

For mid-career individuals, the decision is high-stakes. (For early-career individuals, costs are lower, making the gamble more favourable, but the need to estimate remains.)

-

Calculate your threshold (): Use the model in Part A (and the linked calculator). Without strong evidence that is true, a pivot involving significant unpaid time is likely EV-negative.

-

Seek cheap signals about whether you are in fact a unicorn: Look for personalized evidence of fit---such as applying to a few roles before leaving your current job---before committing significant resources.

-

Use grants as signals: Organizations like Open Philanthropy offer career transition grants. These serve as information gates. If received, a grant lowers the private cost (). If denied, it is a valuable calibration signal. If a major funder declines to underwrite the transition, candidates should update downwards. (If you don't get that Open Phil transition grant, don't quit your current job.)

-

Change the game by doing something other than applying for a job:

- Achieving impact by getting AI Safety on the agenda at your current job (if it's tech-related) is often overlooked. You can have a huge impact by influencing your current employer to take AI safety seriously, without needing to pivot careers.

- Founding or joining a new organization can bring multiple roles into existence, reducing the need to compete for existing roles.

For Organizations: Transparency and Feedback

Employers and advice organizations control the information flow. Unless they provide evidence-based estimates of success probabilities, their generic encouragement should be treated with scepticism.

- Publish stage-wise acceptance rates (Base Rates). Employers must publish historical data (applicants, interviews, offers) by track and seniority. This is the single most impactful intervention for anchoring .

- Provide informative feedback and rank. Employers should provide standardized feedback or an indication of relative rank (e.g., "top quartile"). This feedback is costly, but this cost must be weighed against the significant systemic waste currently externalized onto applicants and the long-term credibility of the field.

- Track advice calibration. Advice organizations should track and publish their forecast calibration (e.g., Brier scores) regarding candidate success. If an advice organization doesn't track outcomes, its advice cannot be calibrated except by coincidence.

For the Field: Systemic Calibration

To optimize the funnel size, the field needs to measure costs and impact tails.

- Estimate applicant costs (). Advice organizations or funders should survey applicants (successful and unsuccessful) to estimate typical pivot costs.

- Track realized impact proxies. Employers should analyze historical cohorts to determine if widening the funnel is still yielding significantly better hires, or if returns are rapidly diminishing.

- Experiment with mechanism design. In capacity-constrained rounds, implementing soft caps---pausing applications after a certain number---can reduce applicant-side waste without significantly harming match quality [@Horton2024Reducing].

Where next?

I'd like feedback from people deeper in the AI safety career ecosystem about what I've gotten wrong. Is the model here sophisticated enough to capture the main dynamics? I'd love to chat with people from 80,000 Hours, MATS, FHI, CHAI, Redwood Research, Anthropic, etc., about this. What is your model about the candidate impact distribution, the tail behaviour, and the costs? What have I got wrong? What have I missed? I'm open to the possibility that this is well understood and being actively managed behind the scenes, but I haven't seen it laid out this way anywhere.

Further reading

Resources that complement the mechanism-design view of the AI safety career ecosystem:

- Christopher Clay, AI Safety's Talent Pipeline is Over-optimised for Researchers

- AI Safety Field Growth Analysis 2025

- Why experienced professionals fail to land high-impact roles Context deficits and transition traps that explain why even strong senior hires often bounce out of the AI safety funnel.

- Levelling Up in AI Safety Research Engineering --- EA Forum. A practical upskilling roadmap; complements the "lower , raise , raise " levers by reducing risk before a pivot.

- SPAR AI --- Safety Policy and Alignment Research program. An example of a program that provides structured training and, implicitly, some "negative previews" of the grind of AI safety work.

- MATS retrospectives --- LessWrong. Transparency on acceptance rates, alumni experiences, and obstacles faced in this training program.

- Why not just send people to Bluedot on FieldBuilding Substack. A critique of naive funnel-building and the hidden costs of over-sending candidates to "default" programs.

- How Stuart Russell's IASEAI conference failed to live up to its potential (FBB #8) --- EA Forum. A cautionary tale about how even well-intentioned field-building efforts can misfire without mechanism design.

- 80,000 Hours career change guides. Practical content on managing costs, transition grants, and opportunity cost---useful for calibrating in the pivot-EV model.

- Forecasting in personal decisions --- 80k. Advice on making and updating stage-wise probability forecasts; relevant to candidate calibration.

- AI safety technical research - Career review

- Updates to our research about AI risk and careers - 80,000 Hours

- The case for taking your technical expertise to the field of AI policy - 80,000 Hours

- Center for the Alignment of AI Alignment Centers. A painfully relatable satire that deserves citing here.

- AMA: Ask Career Advisors Anything --- EA Forum

Appendices

See the original post.

To be consistent we need to take this to be a local linear approximation at your current wage and impact level; so we are implicitly looking at marginal utility. ↩︎

I wonder why this hasn't attracted more upvotes - seems like a very interesting and high-effort post!

Spitballing - I guess there's such a lot of math here that many people (including me) won't be able to fully engage with the key claims of the post, which limits the surface area of people who are likely to find it interesting.

I note that when I play with the app, the headline numbers don't change for me when I change the parameters of the model. May be a bug?

Ah that's why it's for draft amnesty week ;-) Somewhere inside this dense post there is a simpler one waiting to get out, but I figured this was worth posting. Right now it is in the form of ”my own calculations for myself” and it’s not that comprehensible nor the model of good transdisiplinary communication to which I aspire. I'm trying to collaborate with a colleague of mine to write that shorter version. (And to improve the app. Thanks for the bug report @Henry Stanley 🔸 !)

I guess I should flag that I'm up for collaborations and this post can be edited on github including the code to generate the diagrams, so people should feel free to dive in and improve this

One thing that occurs to me (as someone considering a career pivot) is the case of who someone isn't committed to a specific cause area. Here you talk about someone who is essentially choosing between EtG for AI safety or doing AI safety work directly.

But in my case, I'm considering a pivot to AI safety from EtG - but currently I exclusively support animal welfare causes when I donate. Perhaps this is just irrational on my part. My thinking is that I'm unlikely, given my skillset, to be any good at doing direct work in the animal welfare space, but consider it the most important issue of our time. I also think AI safety is important and timely but I might actually have the potential to work on it directly, hence considering the switch.

So in some cases there's a tradeoff of donations foregone in one area vs direct work done in another, which I guess is trickier to model.

Yes, I sidestepped the details of relative valuation entirely here by collapsing the calculation of “impact” into “donation-equivalent dollars.” That move smuggles in multiple subjective factors — specifically, it incorporates a complex impact model and a private valuation of impacts. We’ll all have different “expected impacts,” insofar as anyone thinks in those terms, because we each have different models of what will happen in the counterfactual paths, not to mention differing valuations of those outcomes.

One major thing I took away from researching this is that I don’t think enough about substitutability when planning my career (“who else would do this?”), and I suppose part of that involves modelling comparative advantage. This holds even relative to my private risk/reward model. But thinking in these terms isn’t natural: my estimated impact in a cause area depends on how much difference I can make relative to others who might do it — which itself requires modelling the availability and willingness of others to do each thing.

Another broader philosophical question worth unpacking is whether these impact areas are actually fungible. I lean toward the view that expected value reasoning makes sense at the margins (ultimately, I have a constrained budget of labour and capital, and I must make a concrete decision about how to spend it — so if Bayes didn’t exist, I’d be forced to invent him). But I don’t think it is a given that we can take these values globally seriously, even within an individual. Perhaps animal welfare and AI safety involve fundamentally different moral systems and valuations?

Still, substitutability matters at the margins. If you move into AI safety instead of animal welfare, ideally that would enable someone else — with a better match to animal welfare concerns — to move into AI safety despite their own preferences. That isn’t EtG per se, but it could still represent a globally welfare-improving trade in the “impact labour market.”

If we take that metaphor seriously, though, I doubt the market is very efficient. Do we make these substitution trades as much as we should? The labour market is already weird; the substitutability of enthusiasm and passion is inscrutable; and the transactions are costly. Would it be interesting or useful to make it more efficient somehow? Would we benefit from better mechanisms to coordinate on doing good — something beyond the coarse, low-bandwidth signal of job boards? What might that look like?

JD from Christians for Impact has recently been posting about the downside risks of unsuccessful pivots, which reminded me of this post. Thank you for taking the effort to write this up; I've shared it with advisors in my network.

Thanks pete! is JD's post available anywhere for us to read

Here's a LI post series:

-https://www.linkedin.com/posts/christians-for-impact_top-questions-to-ask-before-making-a-career-activity-7378817376802287616-N9RW?utm_source=share&utm_medium=member_desktop&rcm=ACoAABBfKAYBL-dwrMYafLIlwWQe6mqrTT0f8FU

-https://www.linkedin.com/posts/christians-for-impact_4-more-questions-to-ask-yourself-before-making-activity-7379172018287714304-6fcp?utm_source=share&utm_medium=member_desktop&rcm=ACoAABBfKAYBL-dwrMYafLIlwWQe6mqrTT0f8FU