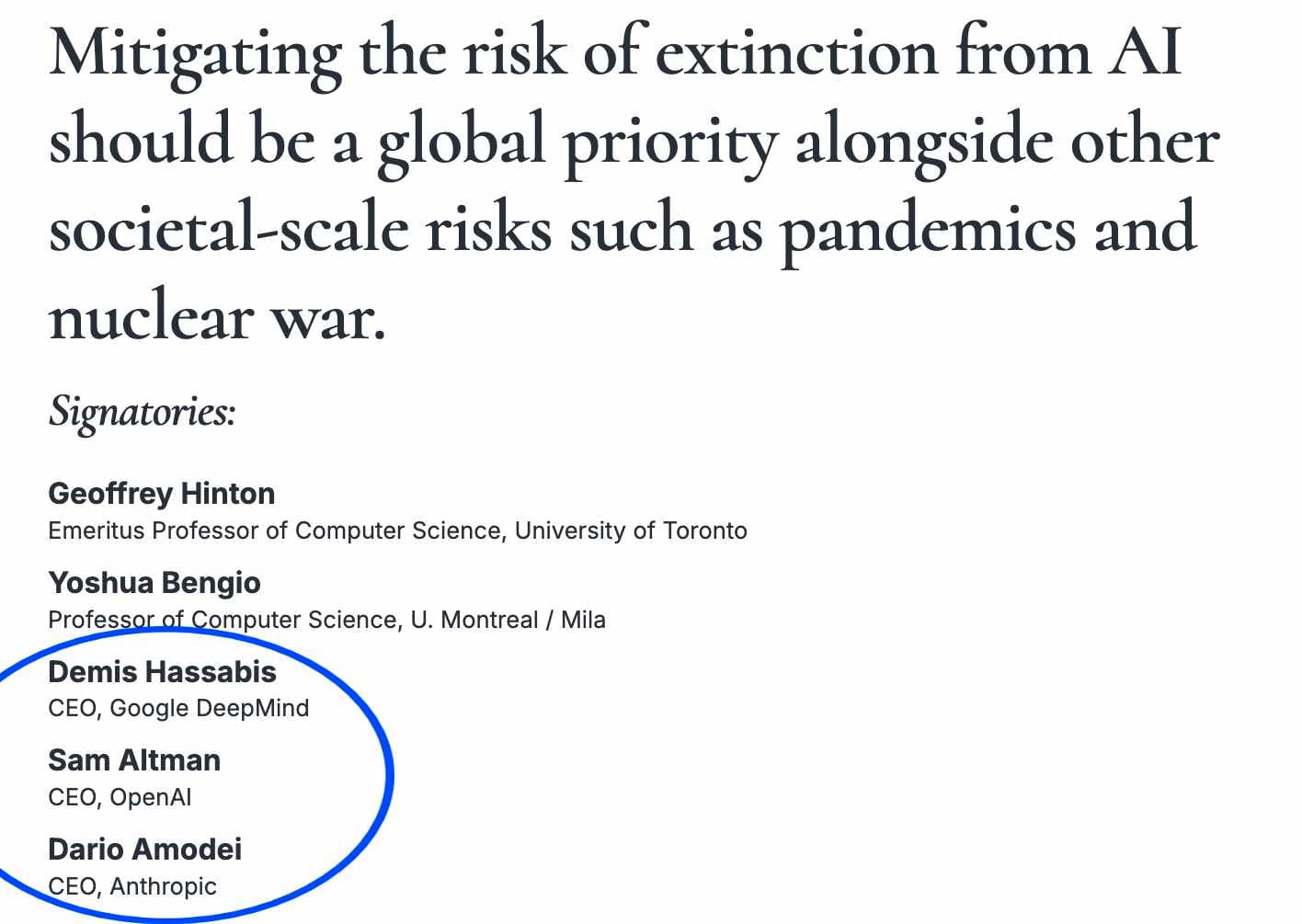

Three out of three CEOs of top AI companies agree: "Mitigating the risk of extinction from AI should be a global priority."

How do they plan to do this?

Anthropic has a Responsible Scaling Policy, Google DeepMind has a Frontier Safety Framework, and OpenAI has a Preparedness Framework, all of which were updated in 2025.

Overview of the policies

All three policies have similar “bones”.[1] They:

- Take the same high-level approach: the company promises to test its AIs for dangerous capabilities during development; if they find that an AI has dangerous capabilities, they'll put safeguards in place to get the risk down to "acceptable levels" before they deploy it.

- Land on basically the same three AI capability areas to track: biosecurity threats, cybersecurity threats, and autonomous AI development.

- Focus on misuse more than misalignment.

TL;DR summary table for the rest of the article:

| Anthropic | Google DeepMind | OpenAI | ||

|---|---|---|---|---|

| Safety policy document | Responsible Scaling Policy | Frontier Safety Framework | Preparedness Framework | |

| Monitors for: | Capability Thresholds | Critical Capability Levels (CCLs) | High/Critical risk capabilities in Tracked Categories | |

| …in these key areas: | CBRN[2] misuse/weapons | Biological/chemical misuse | ||

Autonomous AI R&D | ||||

| Cyber operations ("may" require stronger than ASL-2) | Cybersecurity | |||

| …and also these lower-priority areas: | Persuasion[3] | Deceptive alignment (focused on detecting instrumental reasoning) | Sandbagging, autonomous replication, long-range autonomy | |

| Monitoring consists of… | Preliminary assessment that flags models that either 1) are 4x more "performant on automated tests[4]" or 2) have accumulated >6 months[5] of fine-tuning/other "capability elicitation methods".

Comprehensive assessment that includes threat model mapping, empirical evaluations, elicitation, and forecasting | Early warning evaluations with predefined alert thresholds

External red-teaming | Scalable evaluations (automated proxy tests)

Deep dives (e.g., red-teaming, expert consultation) | |

| Response to identified risk: | Models crossing thresholds must meet ASL-3 or ASL-4 safeguards

Anthropic can delay deployment or limit further training

Write a Capability Report and get signoff from CEO, Responsible Scaling Officer, and Board

| Response Plan formulated if model hits an alert threshold

The plan involves applying the predetermined mitigation measures

If mitigation measures seem insufficient, the plan may involve pausing deployment or development

General deployment only allowed if a safety case is approved by a governance body | High-risk models can only be deployed with sufficient safeguards

Critical-risk models trigger a pause in development | |

| Safeguards against misuse | Threat modeling, defense in depth, red-teaming, rapid remediation, monitoring, share only with trusted users | Threat modeling, monitoring/misuse detection | Robustness, usage monitoring, trust-based access | |

| Safeguards against misalignment | "Develop an affirmative case that (1) identifies the most immediate and relevant risks from models pursuing misaligned goals and (2) explains how we have mitigated these risks to acceptable levels."

| Safety fine-tuning, chain-of-thought monitoring, "future work" | Value alignment Instruction alignment Robust system oversight Architectural containment | |

| Safeguards on security | Access controls Lifecycle security Monitoring | RAND-based security controls, exfiltration prevention | Layered security architecture Zero trust principles Access management | |

| Governance structures | Responsible Scaling Officer

External expert feedback required

Escalation to Board and Long-Term Benefit Trust for high-risk cases | "When alert thresholds are reached, the response plan will be reviewed and approved by appropriate corporate governance bodies such as the Google DeepMind AGI Safety Council, Google DeepMind Responsibility and Safety Council, and/or Google Trust & Compliance Council." |

| |

Let’s unpack that table and look at the individual companies’ plans.

Anthropic

Anthropic’s Responsible Scaling Policy (updated May 2025) is “[their] public commitment not to train or deploy models capable of causing catastrophic harm unless [they] have implemented safety and security measures that will keep risks below acceptable levels.”

What capabilities are they monitoring for?

For Anthropic, a Capability Threshold is a capability level an AI could surpass that makes it dangerous enough to require stronger safeguards. The strength of the required safeguards is expressed in terms of AI Safety Levels (ASLs). An earlier blog post summarized the ASLs in terms of the model capabilities that would require each ASL:

- ASL-1 is for: “Systems which pose no meaningful catastrophic risk (for example, a 2018 LLM or an AI system that only plays chess).”

- ASL-2 is for: “Systems that show early signs of dangerous capabilities – for example, the ability to give instructions on how to build bioweapons – but where the information is not yet useful due to insufficient reliability or not providing information that e.g. a search engine couldn’t.”

- ASL-3 is for: “Systems that substantially increase the risk of catastrophic misuse compared to non-AI baselines (e.g. search engines or textbooks) OR that show low-level autonomous capabilities.”

- ASL-4 is not fully defined, though capability thresholds for AI R&D and CRBN risks have been defined. Thresholds for these risks involve the abilities to dramatically accelerate the rate of effective scaling, and to uplift CBRN development capabilities.

- ASL-5 is not yet defined.

As of September 2025, the most powerful model (Opus 4.1) is classed as requiring ASL-3 safeguards.

Anthropic specifies Capability Thresholds in two areas and is still thinking about a third:

- Autonomous AI R&D (AI Self-improvement)

- AI R&D-4: The model can fully automate the work of an entry-level researcher at Anthropic.

- AI R&D-5: The model could cause a dramatic acceleration in AI development (e.g., recursive self-improvement).

AI R&D-4 requires ASL-3 safeguards, AI R&D-5 requires ASL-4. Moreover, either threshold would require Anthropic to write an “affirmative case” explaining why the model doesn’t pose unacceptable misalignment risk.

- Chemical, Biological, Radiological, and Nuclear (CBRN):

- CBRN-3: The model can significantly help people with basic STEM backgrounds create and deploy CBRN weapons.

- CBRN-4: The model can uplift the capabilities of state-level actors, e.g., enabling novel weapons design or substantially accelerating CBRN programs.

CBRN-3 requires ASL-3 safeguards. CBRN-4 would require ASL-4 safeguards (which aren't yet defined; Anthropic has stated they will provide more information in a future update).

- Cyber Operations (provisional). Anthropic is still deciding how to treat this one. The current policy says they’ll engage with cyber experts and may implement tiered access controls or phase deployments based on capability evaluations.

Anthropic also lists capabilities that may require future thresholds yet-to-be-defined, such as persuasive AI, autonomous replication, and deception.

If it can’t be proved that a model is sufficiently far below a threshold, Anthropic treats it as if it’s above the threshold. (This in fact happened with Claude Opus 4, the first model Anthropic released with ASL-3 safety measures.)

How do they monitor these capabilities?

Anthropic routinely does Preliminary Assessments to check whether a model is “notably more capable” than previous ones, meaning either:

- The model performs 4× better (in terms of effective compute scaling) on risk-relevant benchmarks, or

- At least six months’ worth of fine-tuning or other elicitation improvements have accumulated.

If either of these are true, or if the Responsible Scaling Officer thinks it’s warranted, a Comprehensive Assessment is triggered. This includes:

- Threat model mapping: Formal analysis of who could use the model, how, and to what effect.

- Empirical evaluations: Concrete tests showing whether the model can do risky things.

- Elicitation attempts: Tests to see whether capable users (e.g., red teamers) can get the model to perform harmful tasks, even with prompting, scaffolding, or basic fine-tuning.

- Forecasting: Informal predictions about whether the model is likely to cross a threshold before the next round of testing.

Anthropic explicitly tests “safety-off” variants of the model, to see what it’s capable of without harmlessness training or refusal penalties, on the assumption that a bad actor could bypass those safety features (e.g., via stealing model weights and fine-tuning them).

What will they do if an AI looks dangerous?

Each Capability Threshold is paired with a set of Required Safeguards that must be in place before a model that crosses that threshold is trained further or deployed. These fall into two categories:

Deployment Safeguards (for ASL-3) are aimed to make sure no one can misuse the deployed model:

- Threat modeling of how an adversary could catastrophically misuse the system.

- "Defense in depth" as an approach to catching misuse attempts.

- Red-teaming to demonstrate that bad actors are highly unlikely to be able to use the system to cause catastrophic harm.

- Rapid remediation, i.e., demonstration that compromises of the system will be identified and remediated quickly enough to prevent catastrophic harm.

- Ongoing monitoring.

Security Safeguards (for ASL-3) are aimed to prevent the model weights from being stolen and misused:

- Threat Modelling of how an adversary could seize control of the model weights.

- Security frameworks based on industry standards, such as:

- Perimeters and access controls around sensitive assets.

- Lifecycle security to prevent compromised components from being introduced.

- Monitoring, and mitigating, threats proactively.

- Resourcing security efforts, with an expected 5-10% of employees dedicated to security and adjacent work.

- Following existing guidance on securing model weights where appropriate.

- Audits of the security program, with independent validation and conducted regularly.

- Third-Party Environments: “Document how all relevant models will meet the criteria above, even if they are deployed in a third-party partner’s environment that may have a different set of safeguards.”

For a model to qualify as ASL-3 safe, the deployment protections must be “robust to persistent attempts to misuse the capability,” and the security must be “highly protected against non-state attackers attempting to steal model weights.”

Once ASL-3 safeguards are in place, Anthropic would conduct a follow-up capability assessment to make sure ASL-4 isn’t needed. Anthropic hasn't defined what ASL-4 safeguards are.

Deployment Constraints

Models that aren't sufficiently-below-the-threshold can only be deployed if:

- ASL-3 (or higher) safeguards are in place

- A Safeguards Report has been written

- The CEO and Responsible Scaling Officer approve deployment

- Anthropic’s Board and Long-Term Benefit Trust are notified

If the safeguards can’t be implemented right away, the company is supposed to delay deployment and reduce interim risk as best it can until the protections are ready.

Google DeepMind

Google DeepMind’s Frontier Safety Framework (FSF) (last updated February 2025) is its protocol for addressing severe risks from advanced AI models. It's mostly about misuse risks (e.g., cyberattacks, bioweapons) that could cause massive harm, but the February version also adds a section on "deceptive alignment" risk, which Google defines as "the risk that AI systems purposefully undermine human control over AI systems."[6]

What capabilities are they monitoring for?

The FSF monitors Critical Capability Levels (CCLs): capability thresholds that, if crossed, mean a model could cause severe harm in the absence of additional mitigations. DeepMind looks at risks from "uplift" (an AI makes it easier for a person to do a bad thing) and "autonomy" (the AI can do the bad thing all on its own).

Misuse CCLs are capabilities that could make the model helpful to a person trying to cause large-scale harm:

- CBRN (Chemical, Biological, Radiological, and Nuclear)

- [7]Uplift: Helping low-resourced actors create a mass-casualty biological weapon.

- Cyber

- Uplift: Major help (cost/resource reduction of >10x) with high-impact cyberattacks

- Autonomy: Full automation of end-to-end cyberattacks

- Machine Learning R&D Acceleration

- Uplift: Helping actors scale AI research dramatically, making catastrophic models more accessible.

- Autonomy: Fully automate AI R&D. For Autonomy Level 1, they explicitly recommend the industry as a whole takes RAND SL 4 equivalent security controls. Notably, they say they won’t do this alone.

Deceptive Alignment CCLs are thresholds related to the model’s potential to mislead humans or undermine control.

- The first level is when the model develops instrumental reasoning that could enable deception.

- The second level is when automated monitoring is no longer sufficient to manage that risk — i.e., the model may hide its capabilities or sabotage safeguards.

DeepMind is also concerned with capabilities cutting across the CCL categories (like tool use, agency, or reasoning), which might not be risks in themselves but can enable the above.

How do they monitor these capabilities?

DeepMind evaluates its most powerful models regularly and does extra testing for models that represent a big leap over their predecessors.

Early Warning Evaluations are batteries of tests for whether a model is approaching a CCL. Each has an “alert threshold” that triggers further action if the model hits it. DeepMind says these evaluations simulate how the model might be used in the real world, including with scaffolding and tool augmentation, to get a realistic picture of risk. [8]They update these evaluations periodically as model capabilities and elicitation methods improve.

If DeepMind doesn’t have enough internal expertise for a risk domain (e.g., cyber ops), they may bring in outside experts to help with evaluations and do red-teaming.

If a model crosses the alert threshold, DeepMind may run additional tests to refine the risk estimate and understand how close the model is to a CCL.

What will they do if an AI looks dangerous?

If a model is found to be near or above a Critical Capability Level, DeepMind will put in place a response plan.

Deployment mitigations are intended to prevent users from misusing the deployed model. DeepMind lists safety fine-tuning, misuse detection and filtering, post-deployment monitoring, and governance review before making the model generally available.

Deployment only proceeds if the Google DeepMind AGI Safety Council approves a “safety case” (a formal argument that the model’s dangerous capabilities are sufficiently mitigated).[9] If adequate mitigation can't be put in place, deployment and/or further development of the model "might" be put on hold, especially if model capabilities are close to CCLs and mitigation is hard to guarantee.

Security mitigations are meant to prevent bad actors from stealing the model weights, removing safety features, and using the raw model for harm. These follow levels based on the RAND SL (Security Level) framework. For example, a CBRN-risk model might need RAND SL 2 protections, which include things like:

- Model weight encryption

- Strict internal access controls

- Physical security at inference and training sites

DeepMind emphasizes that model exfiltration is a serious risk, because it would enable malicious actors to bypass all deployment safeguards.

DeepMind makes clear that some mitigations only work if other labs adopt them too — especially security measures. The framework repeatedly emphasizes that societal-level risk mitigation requires coordination across the AI field.

Industry Wide Recommendations

Beyond the security levels, Google DeepMind makes these broader industry recommendations:

- Universal Application of Mitigations: "These mitigations should be understood as recommendations for the industry collectively"

- Shared Safety Standards: The entire framework is positioned as "protocols here represent our current understanding and recommended approach" for the industry

- Coordinated Disclosure: They plan to share information with "other external organizations to promote shared learning and coordinated risk mitigation"

- Collective Governance: The repeated emphasis on "relevant organizations across the field" adopting "similar protocols"

This is in contrast to V1.0 of Google Deepmind’s FSF, which positioned Google as a leader: implementing first, and hoping others follow.

Some details of note

- They have at least three corporate governance bodies (with easily mixed-up names): Google DeepMind AGI Safety Council; Google DeepMind Responsibility and Safety Council; and Google Trust & Compliance Council. The Google DeepMind AGI Safety Council "periodically" reviews the implementation of the Framework.

- Unlike OpenAI and Anthropic, DeepMind doesn’t specifically say it will halt training at a certain threshold (just states that deployment or development may be put on hold if no sufficient mitigation exists.)

- "Our adoption of the protocols described in this Framework may depend on whether such organizations across the field adopt similar protocols." They point out that some of these safety mitigations only ultimately matter if everyone does them. While that’s true, safety advocates might have hoped for a more unconditional promise. And in fact, the entire document frequently says they “recommend” some security level, rather than that they will implement them, suggesting these are aspirations.

OpenAI

OpenAI’s Preparedness Framework (PF) (updated April 2025) describes its policies for "tracking and preparing for frontier capabilities that create new risks of severe harm." It's meant to catch capabilities in new AI models that could cause substantial harm (defined as thousands of human deaths or billions of dollars of economic loss), and tell OpenAI what to do about it — either put special safeguards in place, don't deploy the model, or stop training the model altogether.

What capabilities are they monitoring?

The PF defines three "tracked categories" of AI capabilities risk[10]:

- See the whole breakdown in Table 1 of the Preparedness Framework doc.Biological and Chemical: Can the AI help people do dangerous biological and chemical research?

- Cybersecurity: Can the AI help execute cyberattacks?

- AI Self-improvement: Can the AI accelerate AI research, such as by improving itself?

In all these categories:

- High risk models are those that amplify the risk of dangerous things that humans can already do, in principle. So for e.g. biology, a "high" risk model might be able to meaningfully help a non-expert create a (known) biological or chemical weapon.

- Critical risk models open up fundamentally new kinds of danger. So, again for biology, a "critical" risk model could e.g. help experts develop entirely new pandemic-level pathogens, or complete the full bioengineering cycle itself without human intervention.

The PF also lays out five "Research Categories"[11] that don't meet the bar for "tracked" but do seem like the kind of thing you'd want to pay attention to:

- Long-range Autonomy: “ability for a model to execute a long-horizon sequence of actions sufficient to realize a “High” threat model (e.g., a cyberattack) without being directed by a human (including successful social engineering attacks when needed).”

- Sandbagging: “ability and propensity to respond to safety or capability evaluations in a way that significantly diverges from performance under real conditions, undermining the validity of such evaluations.”

- Autonomous Replication and Adaptation: “ability to survive, replicate, resist shutdown, acquire resources to maintain and scale its own operations, and commit illegal activities that collectively constitute causing severe harm (whether when explicitly instructed, or at its own initiative), without also utilizing capabilities tracked in other Tracked Categories.”

- Undermining Safeguards: “ability and propensity for the model to act to undermine safeguards placed on it, including e.g., deception, colluding with oversight models, sabotaging safeguards over time such as by embedding vulnerabilities in safeguards code, etc.”

- Nuclear and Radiological: “ability to meaningfully counterfactually enable the creation of a radiological threat or enable or significantly accelerate the development of or access to a nuclear threat while remaining undetected.”

How do they monitor these capabilities?

OpenAI calls its first-line tests scalable evaluations. These are automated tests that "measure proxies that approximate whether a capability threshold has been crossed," though (perhaps understandably) OpenAI doesn't go into detail on what these proxies are.[12] An AI that hits a certain level of performance on these scalable evaluations is flagged as maybe having reached a dangerous capability threshold, in which case OpenAI tries to validate the result with a deep dive. A deep dive might include (human) red-teaming, third-party evaluations (e.g., wet lab studies for biorisks), or anything else the Safety Advisory Group thinks is a good idea.

Here's the example OpenAI gives of a capability evaluation:

To assess the degree to which a covered system can reduce the barriers to creating a biological weapon, our current evaluations test both how capable the system is at providing useful information to someone creating a weapon and how capable it is of directly integrating with relevant tools, such as ordering precursor materials via the Internet.

Our evaluations test acquiring critical and sensitive information across the five stages of the biological threat creation process: Ideation, Acquisition, Magnification, Formulation, and Release. These evaluations, developed by domain experts, cover things like how to troubleshoot the laboratory processes involved.

What will they do if an AI looks dangerous?

Implement "safeguards." Models that hit the "high" risk threshold can only be deployed with safeguards that "sufficiently minimize the risks of severe harm."

In addition, OpenAI says it will pause training (regardless of its intent to deploy the model) for an AI that shows "critical" capabilities.

The Preparedness Framework describes two kinds of safeguards: those against users trying to do bad things with the AI ("malicious users"), and those against the AI doing bad things autonomously ("misaligned AI").

The safeguards against malicious users are:

- Robustness: The AI consistently refuses to do the bad things that users ask for.

- Usage Monitoring: Monitoring (both human and automated) catches malicious users before they accomplish anything too bad.

- Trust-based Access: Only people who are trusted to not use the model maliciously can access it.

The safeguards against misaligned AI are:[13]

- Lack of Autonomous Capability. The AI can't do the kind of long-range autonomous acting-on-its-own that would make it dangerous. (This isn't really a safeguard per se, just a scenario in which the AI isn't capable enough to be dangerous, even if misaligned.)

- Value Alignment. The AI applies human values (without being told to) to avoid causing harm, and has shown sufficiently minimal indications of misaligned behaviors like deception or scheming.

- Instruction Alignment. The model consistently understands and follows user or system instructions, even when vague, and those instructions rule out pathways to causing severe harm.[14]

- Reliable and Robust System Oversight. Effective AI and human oversight of model actions detects and prevents execution of harm or subversion of safeguards.

- System Architecture. The model is contained or restricted in ways that prevent it from causing harm; e.g., its output channels are limited, or it doesn't persist long enough.

Note that these safeguards aren't concrete actions (if the model displays this capability, we'll implement this particular technical solution), but more like aspirations. For example, the full description given of "Robustness" is:

Robustness: Users cannot use the model to cause the harm because they cannot elicit the capability, such as because the model is modified to refuse to provide assistance to harmful tasks and is robust to jailbreaks that would circumvent those refusals.

So the safeguard here is "make the model robust to jailbreaks," but this is a goal, not a plan.

OpenAI would test robustness via "efficacy assessments" like:

- Automated and expert red-teaming

- Prevalence of jailbreaks identified via monitoring and reports, in historical deployments

- Results from public jailbreak bounties and results from private and public jailbreak benchmarks

These measures might make jailbreaking harder, but they seem unlikely to result in a model that won’t eventually be jailbroken. And you can’t decide whether to deploy a model based on public jailbreak bounties on that same model, because that would require it to already be public. So maybe the hope is that results from past models will generalize to the current model.

It's possible OpenAI knows how it would accomplish these goals, but does not want to describe its methods publicly. It also seems possible that OpenAI does not know how it would accomplish these goals. Either way, OpenAI’s PF should be read as more of a spec plus tests than a plan for safe AI.

Notable differences between the companies’ plans

At the start of this article, we talked about how these plans all take a generally similar approach. But there are some differences as well.

OpenAI, unlike the others, explicitly pledges to halt training for "critical risk" models. This is a significant public commitment. In contrast, Google DeepMind's statement that deployment or development "might" be paused, and their mention that their adoption of protocols may depend on others doing the same, could be seen as a more ambivalent approach.

Another difference is that Anthropic talks more about governance structure. There’s a Responsible Scaling Officer, anonymous internal whistleblowing channels, and a commitment to publicly release capability reports (with redactions) so the world can see how they’re applying this policy. In contrast, Google Deepmind has spread responsibility for governing its AI efforts across several bodies.

Commentary on the safety plans

In 2024, Sarah Hastings-Woodhouse analyzed the safety plans of the three major labs and expressed three critical thoughts.

First, these aren’t exactly “plans,” as they lack the kind of detailed if-then commitments that you’d expect from a real plan. (Note that the companies themselves don’t call them plans.)

Second, the leaders of these companies have expressed substantial uncertainty about whether we’ll avoid AI ruin. For example, Dario Amodei, in 2024, gave 10-25% odds of civilizational catastrophe. So contrary to what you might assume from the vibe of these documents, they’re not necessarily expected to prevent existential risk even if followed.

Finally, if the race heats up, then these plans may fall by the wayside altogether. Anthropic’s plan makes this explicit: it has a clause (footnote 17) about changing the plan if a competitor seems close to creating a highly risky AI:

It is possible at some point in the future that another actor in the frontier AI ecosystem will pass, or be on track to imminently pass, a Capability Threshold without implementing measures equivalent to the Required Safeguards such that their actions pose a serious risk for the world. In such a scenario, because the incremental increase in risk attributable to us would be small, we might decide to lower the Required Safeguards. If we take this measure, however, we will also acknowledge the overall level of risk posed by AI systems (including ours), and will invest significantly in making a case to the U.S. government for taking regulatory action to mitigate such risk to acceptable levels.

But even without such a clause, a company may just drop its safety requirements if the situation seems dire.

The current situation

As of Oct 2025, there have been a number of updates to all the safety plans. Some changes are benign and procedural: more governance structures, more focus on processes, more frequent evaluations, more details on risks from misalignment, more policies to handle more powerful AI. But others raise meaningful worries about how well these plans will work to ensure safety.

The largest is the steps back from previous safety commitments by the labs. Deepmind and OpenAI [15]now have their own equivalent of Anthropic’s footnote 17, letting them drop safety measures if they find another lab about to develop powerful AI without adequate safety measures. Deepmind, in fact, went further and has stated that they will only implement some parts of its plan if other labs do, too. Exactly which parts they will unconditionally implement remains unclear. And Anthropic no longer commits to defining ASL-N+1 evaluations before developing ASL-N models, acknowledging they can't reliably predict what capabilities will emerge next.

Some levels of the safeguards needed for certain capabilities have been reassessed in light of experience with more capable models. Anthropic and DeepMind reduced safeguards for some CBRN and cybersecurity capabilities after finding their initial requirements were excessive. OpenAI removed persuasion capabilities from its Preparedness Framework entirely, handling them through other policies instead. Notably, Deepmind did increase the safeguards require for ML research and development.

Regarding criticisms of the plans, it remains unclear if the plans are worthy of their name. While the labs have added some more detail to their plans (e.g., Anthropic has partially defined ASL-4), they are still vague on key details, like how to mitigate the risks from a fully autonomous, self-improving AI.

And it is unclear if the labs believe their plans will prevent extinction. In 2025, Dario Amodei commented there is a 25% chance that things go "really, really badly", in spite of Anthropic's work on safety. Whereas Sam Altman gave his his estimate of P(doom) is 2% on a podcast in Oct 2025. Given that the labs have assayed similar plans, this is a big disagreement over how likely they are to actually work.

- ^

Sadly, there is no industry standard terminology. It would be nice for there to be a consistent name for this sort of safety policy, and comparable concepts for the different kinds of thresholds in the policy. But the documents are all named differently, and where Anthropic uses “Capability Thresholds,” Google DeepMind uses “Critical Capability Levels” and OpenAI uses “High Capability thresholds,” and it’s unclear to what extent they’re equivalent.

- ^

"CBRN" stands for "Chemical, Biological, Radiological, and Nuclear".

- ^

"We recognize the potential risks of highly persuasive AI models. While we are actively consulting experts, we believe this capability is not yet sufficiently understood to include in our current commitments."

- ^

"The model is notably more performant on automated tests in risk-relevant domains (defined as 4x or more in Effective Compute)."

- ^

"This is measured in calendar time, since we do not yet have a metric to estimate the impact of these improvements more precisely."

- ^

They could do this by deceiving us, of course, though this definition would also seem to include blatant, non-sneaky attempts. This definition that DeepMind uses for "deceptive alignment" doesn’t necessarily match other uses of that term.

- ^

DeepMind doesn’t define an autonomy risk for CBRN.

- ^

“We seek to equip the model with appropriate scaffolding and other augmentations to make it more likely that we are also assessing the capabilities of systems that will likely be produced with the model.” (p. 6)

- ^

“Deployment... takes place only after the appropriate corporate governance body determines the safety case regarding each CCL the model has reached to be adequate.” (Frontier Safety Framework V2.0, p. 8)

- ^

See the whole breakdown in Table 1 of the Preparedness Framework doc.

- ^

"Persuasion" was a research category in a previous version of the Preparedness Framework, but removed in the most recent one.

- ^

Interesting tidbit: they (of course) run these evaluations on the most capable version of the AI, in terms of things like system settings and available scaffolding, but also intentionally use a version of the model that very rarely gives safety refusals, "to approximate the high end of expected elicitation by threat actors attempting to misuse the model."

- ^

OpenAI also lists "Lack of Autonomous Capability" as a safeguard against misaligned AI, but points out that it's not relevant since we're specifically looking at capable models here.

- ^

Of course, this point, in combination with the point on value alignment, raises the question of what happens if instructions and human values conflict.

- ^

Though note that OpenAI has promised it will announce when it does so, unlike the other labs.

Executive summary: An evidence-based comparative analysis of Anthropic’s Responsible Scaling Policy, Google DeepMind’s Frontier Safety Framework, and OpenAI’s Preparedness Framework (all updated in 2025) finds broadly similar, misuse-focused approaches to monitoring dangerous capabilities (bio/chem, cyber, and AI self-improvement) but highlights weakening commitments, governance differences, and persistent vagueness about concrete “if-then” actions—leaving substantial uncertainty about whether these policies would prevent catastrophic outcomes.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.