This is an entry into the 2025 'Essays on Longtermism' Competition.

In the essay 'Shaping Humanity’s Longterm Trajectory' Toby Ord proposed quantifying the thing longtermist's should value to be the instantaneous value of life^ integrated over all time. Thus the impact of a counterfactual scenario is the difference between the area under this curve with and without the intervention.

Here I extend this concept by arguing that this equation can be simplified by combining it with the principle of future people's self determination. Under this assumption it is our responsibility to put future people in a position to self determine how to maximise their flourishing. After all they will be in a much better position to determine this than we are, by having more information about their needs and likely much more resources than we do.

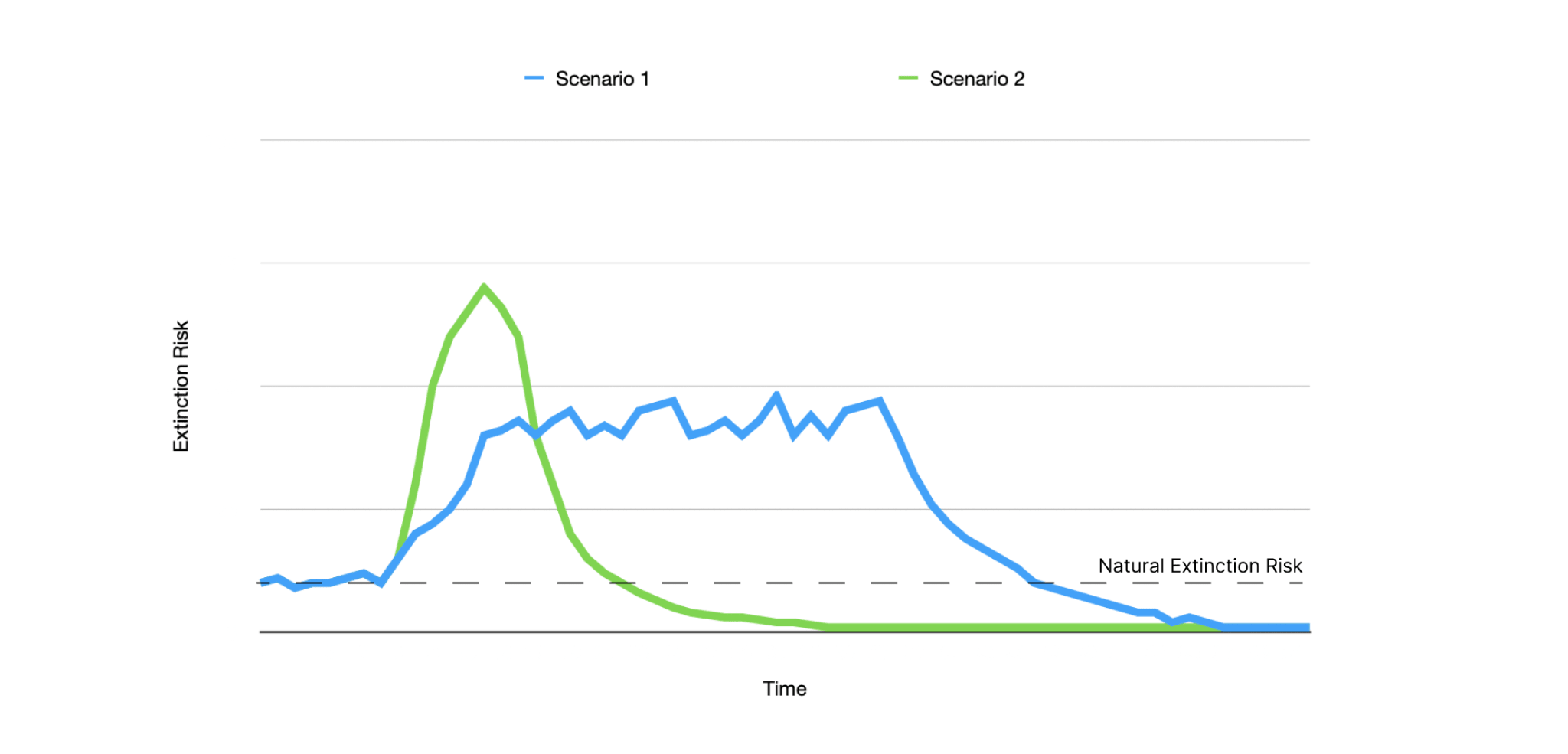

The most fundamental aspect of self determination is existence itself. Under this assumption the equation simplifies to integrating the instantaneous extinction risk^^ over time. And the goal of longtermism is to identify and implement the scenario that minimizes the area under this curve between now and the eventual heat death of the universe. Formalized this way it becomes clear that it may be beneficial to temporarily increase instantaneous extinction risk if doing so means we can achieve a much lower permanent extinction risk in the future. The graph below qualitatively shows how the introduction of artificial extinction causes dramatically increased extinction risk above the baseline natural level. It also demonstrates how scenario two which has a higher initial risk and maximum risk has a lower risk profile overall due to the smaller area under the curve when compared with scenario one.

Here I argue from the laws of physics an upper bound strategy that ensures an exponentially decreasing extinction risk for all future time once we are able to harvest XX% of the sun’s power. On the Kardashev scale this happens when humanity is a K1.7 civilization and we are currently a K0.7 civilization (the scale is logarithmic).

Extinction, if it happens, will be caused by an event. There are many events that could cause extinction but the worst type would be the one that presses right up against the very limits of physics. It would spread omnidirectionally at the speed of light and achieve a 100% lethality. The only known cause that could possibly achieve this is the highly speculative false vacuum decay. If we can achieve an exponentially decreasing extinction risk scenario even in the presence of this strongest form of extinction event then we also achieve it for all weaker forms such as artificial pandemics or ASI take over, hence the upper bound phrasing used earlier.

The universe is expanding at a rate of 1% of light speed every 10^21 km. This means if you are currently 10^23km away from Earth, light (or a vacuum decay event) leaving Earth now will never reach you. Congratulations you are now officially outside of Earth's cosmic event horizon. Currently there are roughly 10 million^^^ gravitationally bound galaxy clusters inside Earth's cosmic event horizon. In 100 billion^^^^ years there will be none and that will be the same for each one of those galaxy clusters. If we send life to each of these galaxy clusters at near light speed now, then awaken them from stasis^^^^^ in 100 billion years there will be 10 million civilisations that would have to independently decide to dabble with false vacuums to cause universal extinction.

But we can do better in terms of the number of independent civilisations. It would be a waste to let all the stars in these galaxy clusters burn for 100 billion years before life begins to use them. So they should be disassembled into Jupiter sized planets to prevent fusion. Furthermore, we can split gravitationally bound clusters. For example to lift 10% of the mass of the Local Group (the gravitationally bound group of galaxies that the Earth is in) out of this gravitational well it would require 10^52 joules which is the energy equivalent to the rest mass of 10^35 kg of mass which is 0.000001% of the mass of the Local Group. Thus we can trade off a small proportion of the mass in these gravitationally bound galaxy clusters to recreate multiple new gravitationally bound star clusters. If this is done continuously over time then we would have an exponentially increasing number of them. This is a way of trading between the robustness of life and the total amount of life.

We can also do better than 100 billion years in terms of implementation speed. If we send out life at an average speed of 99% of the speed of light then we only have to wait one million years before it is out of our cosmic event horizon. At 99.9% this drops to one hundred thousand years. To only have to wait 1000 years the speed would have to be 99.99999% of light speed (the seven nines goal)^^^^^^. Even at this speed we could send a one ton life carrying probe to every galaxy cluster in Earth's cosmic event horizon using only 1.5 hours of the Sun's output. This is likely to be the most important thing a longtermist oriented K1.7 civilization could do with their resources and it would only take 10% of their annual energy budget for ten years.

To summarize, a K1.7 civilization would be able to use 10% of their annual energy budget for ten years to create a scenario where within 1000 years a lower bound of 10 million of the worst possible type of extinction events are required to occur independently to wipe out all life in the universe. In fact once achieved the instantaneous extinction risk decreases well below current levels and the baseline natural extinction risk and will continue to decrease exponentially for all future time until the heat death of the universe.

This essay is a call to the effective altruism community to identify the fastest and safest scenarios that enable humanity to achieve a K1.7 civilization status and the additional functionality required to seed life throughout the cosmic event horizon.

^

Toby Ord used the word humanity instead of life, I'm just generalising the concept here.

^^

When I refer to extinction risk I mean the risk that all life in the universe dies. Not just human life. By the instantaneous extinction risk I mean the likelihood between zero and one that the universe will not contain life in the next time period. So if extinction happens then the curve is at a value of one for all time (unless you believe in abiogenesis which I personally do not view as being plausible).

^^^

ChatGPT5 Extended Thinking: 1.5–3 million

Grok Expert: 34 million

Claud: 25-150 million

Rounded average estimate: 40 million

So I rounded it to the closest order of magnitude 10 million.

This is a lower bound estimate since it is possible to artificially create separate gravitationally bound clusters from one bound cluster. Though I should point out it is also probably possible to artificially merge some separate gravitationally bound clusters together but this is much harder to do.

^^^^

ChatGPT5 Extended Thinking: Infinity

Grok Expert: 95 billion years

Claud Opus 4.1: 150 billion years

Google Browser AI : 150 billion years

I rounded the average to 100 billion years.

Letting all the stars burn for 100 billion years in these galaxy clusters represents an enormous waste of energy. Ideally you will use non-intelligent mechanisms to convert them into Jupiter sized planets to stop fusion. Life doesn’t have to be in suspended animation for all that time either, it just needs to be kept below vacuum decay initiating technology level.

^^^^^

Stasis in this case meaning any state where they are not able to create false vacuum decay. Under this definition life on Earth is currently in stasis, so life can certainly thrive in stasis.

^^^^^^

ChatGPT5 Extended Thinking: 99.999993%

Grok Expert: 99.9999928%

I rounded the average to 99.99999%

Author here. Here are some of the novel aspects of this essay (to the best of my knowledge).

* Identified a new longtermist cause area of increasing humanity's Kardeshev scale and quantified the K1.7 civilization scale as being particularly longtermist relevant.

* Rigorously proved a strategy that will achieve existential risk that is lower than the natural background existential risk before artificial extinction causes were invented.

* Proved a strategy that will ensure exponentially decreasing existential risk for all future time until the heat death of the universe.

* This was achieved by generalizing extinction events to be cause and effect which was an infohazard free way of analyzing extinction risks. This seems to be the first literature in EA that does this.

* This essay described the novel idea of how the expansion of the universe can be used to defend against existential risk of all types.

* Identified another longtermist relevant feature of higher level Kardeshev civilizations adding to simulating conscious experience, etc.

* Set the appropriate scale of longtermist thinking to the cosmic event horizon scale. I find most EA literature on space settlement assume humanity's maximum expansion is limited to this galaxy. There are 64 mentions of word galaxies in longtermist EA forum articles and one other longtermist article that mentions cosmic event horizon.

* Talked about the heat death of the universe as the ultimate existential threat. There are 15 other longtermist EA forum articles that talk about this. So it isn't particularly novel or neglected.

* The Kardeshev scale is mentioned in only one previous longtermist EA forum article which in it's own words says it "is hard to take seriously". Here I show it should be taken seriously.

* Introduced the concept of self determination in the longtermist discussion. Self determination is mentioned in a serious capacity two times in the longtermist EA forum articles. So not completely novel but quite neglected in the EA literature.

* Argued against the current longtermist majority view that minimizing existential risk now is all that matters. Also not novel but does challenge the current paradigm.

* I discuss a novel mechanical mechanism by which we can trade off between a marginally bigger future and a marginally more robust future.