david_reinstein

Bio

Participation2

I'm the Founder and Co-director of The Unjournal; We organize and fund public journal-independent feedback, rating, and evaluation of hosted papers and dynamically-presented research projects. We will focus on work that is highly relevant to global priorities (especially in economics, social science, and impact evaluation). We will encourage better research by making it easier for researchers to get feedback and credible ratings on their work.

Previously I was a Senior Economist at Rethink Priorities, and before that n Economics lecturer/professor for 15 years.

I'm working to impact EA fundraising and marketing; see https://bit.ly/eamtt

And projects bridging EA, academia, and open science.. see bit.ly/eaprojects

My previous and ongoing research focuses on determinants and motivators of charitable giving (propensity, amounts, and 'to which cause?'), and drivers of/barriers to effective giving, as well as the impact of pro-social behavior and social preferences on market contexts.

Podcasts: "Found in the Struce" https://anchor.fm/david-reinstein

and the EA Forum podcast: https://anchor.fm/ea-forum-podcast (co-founder, regular reader)

Twitter: @givingtools

Posts 72

Comments923

Topic contributions9

Here's the Unjournal evaluation package

A version of this work has been published in the International Journal of Forecasting under the title "Subjective-probability forecasts of existential risk: Initial results from a hybrid persuasion-forecasting tournament"

We're working to track our impact on evaluated research (see coda.io/d/Unjournal-...) So We asked Claude 4.5 to consider the differences across paper versions, how they related to the Unjournal evaluator suggestions, and whether this was likely to have been causal.

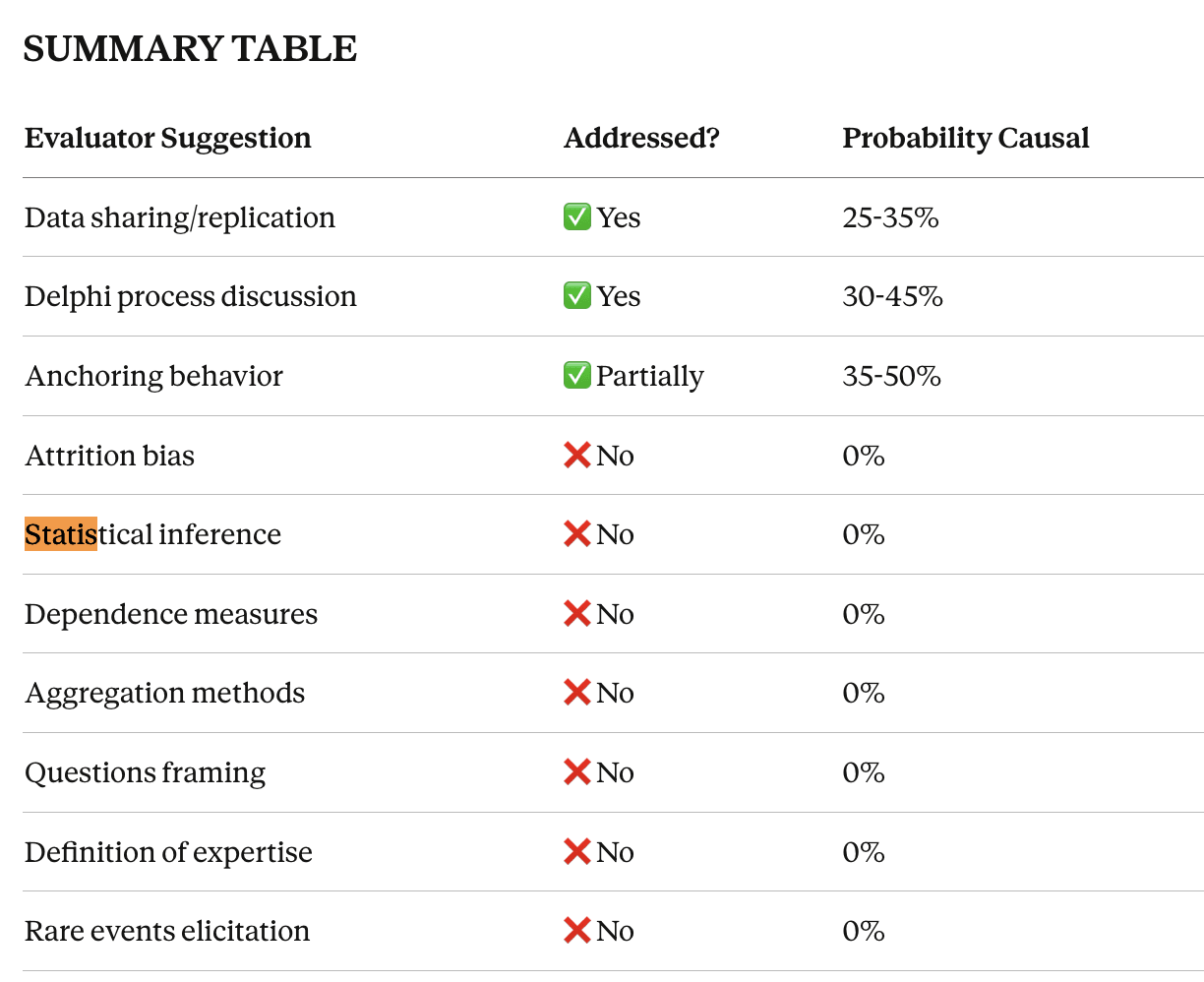

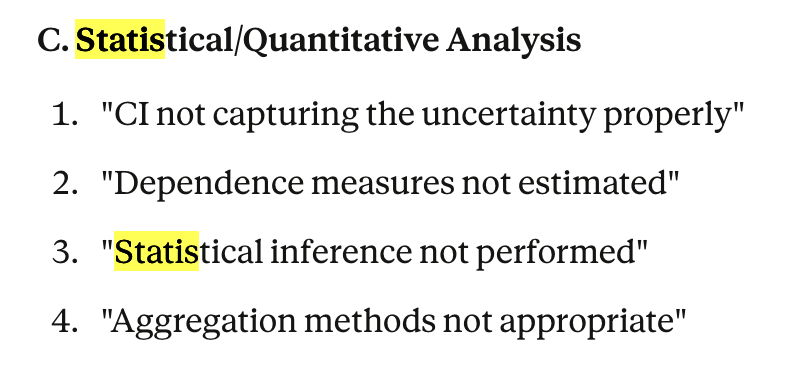

See Claude's report here coming from the prompts here. Claude's assessment: Some changes seemed to potentially reflect the evaluators' comments. Several ~major suggestions were not implemented, such as the desire for further statistical metrics and inference.

Maybe the evaluators (or Claude) got this wrong, or these changes were not warranted under the circumstances We're all about an open research conversation, and we invite the authors' (and others') responses.

I've found it useful both for posts and for considering research and evaluations of research for Unjournal, with some limitations of course.

- The interface can be a little bit overwhelming as it reports so many different outputs at the same time some overlapping

+ but I expect it's already pretty usable and I expect this to improve.

+ it's an agent-based approach so as LLM models improve you can swap in the new ones.

I'd love to see some experiments with directly integrating this into the EA forum or LessWrong in some ways, e.g. automatically doing some checks on posts or on drafts or even on comments. Or perhaps an opt-in to that. It could be a step towards systematic ways of improving the dialogue on this forum -- and forums and social media in general, perhaps. This could also provide some good opportunity for human feedback that could improve the model, e.g. people could upvote or downvote the roast my post assessments, etc.

In case helpful, the EA Market Testing team (not active since August 2023) was trying to push some work in htis direction, as well as collaboration and knowledge-sharing between organizations.

See our knowledge base (gitbook) and data analysis. (Caveat: it is not all SOTA in a marketing sense, and sometimes leaned a bit towards the academic/scientific approach to this).

Happy to chat more if you're interested.

I think "an unsolved problem" could indicate several things. it could be

-

We have evidence that all of the commonly tried approaches are ineffective, i.e., we have measured all of their effects and they are tightly bounded as being very small

-

We have a lack of evidence, thus very wide credible intervals over the impact of each of the common approaches.

To me, the distinction is important. Do you agree?

You say above

meaningful reductions either have not been discovered yet or do not have substantial evidence in support

But even "do not have substantial evidence in support" could mean either of the above ... a lack of evidence, or strong evidence that the effects are close to zero. At least to my ears.

As for 'hedge this', I was referring to the paper not to the response, but I can check this again.

Some of this may be a coordination issue. I wanted to proactively schedule more meetings at EAG Connect, but I generally found fewer experienced/senior people at key orgs in the Swapcard relative to the bigger EAGs. And some that were there didn't seem responsive ... as it's free and low-cost, there may also be people that sign up and then don't find the time to commit,

Posted and curated some Metaculus questions in our community space here.

This is preliminary: I'm looking for feedback and also hoping to post some of these as 'Metaculus moderated' (not 'community') questions. Also collaborating with Support Metaculus' First Animal-Focused Forecasting Tournament.

Questions like:

Predict experts' beliefs over “If the price of the... hamburger-imitating plant products fell by 10% ... how many more/fewer chickens consumed globally in 2030, as a +/- percent?

Project Idea: 'Cost to save a life' interactive calculator promotion

What about making and promoting a ‘how much does it cost to save a life’ quiz and calculator.

This could be adjustable/customizable (in my country, around the world, of an infant/child/adult, counting ‘value added life years’ etc.) … and trying to make it go viral (or at least bacterial) as in the ‘how rich am I’ calculator?

The case

While GiveWell has a page with a lot of tech details, but it’s not compelling or interactive in the way I suggest above, and I doubt they market it heavily.

GWWC probably doesn't have the design/engineering time for this (not to mention refining this for accuracy and communication). But if someone else (UX design, research support, IT) could do the legwork I think they might be very happy to host it.

It could also mesh well with academic-linked research so I may have some ‘Meta academic support ads’ funds that could work with this.

Tags/backlinks (~testing out this new feature)

@GiveWell @Giving What We Can

Projects I'd like to see

EA Projects I'd Like to See

Idea: Curated database of quick-win tangible, attributable projects